Deploying and Pressure Testing a Markov Chains Model

To ensure that the deployed model is valid and versatile, we designed and applied a testing methodology. This results in closing the narrative and institutionalising our data-driven attribution model as the single source of truth within the company.

Key takeaways:

In part 3 of this 3-part series on fractional attribution models, Baptiste Amar, senior data scientist, explains how he deploys and pressure tests the Markov Chains model. Deployment implies replicating the global findings from the design phase at the channels’ sequence level: every time a transaction fires, we need to distribute it across the marketing channels that triggered it.

Then, to ensure that the deployed model is valid and versatile, we designed and applied a testing methodology. This results in closing the narrative and institutionalising our data-driven attribution model as the single source of truth within the company.

Read part 2 to learn how Baptiste chose and designed the Markov chains model. Read part 1, Understanding data-driven attribution models, for an introduction to the topic and its business case.

{{divider}}

Here’s a recap of the year-long project from choosing a model to pressure testing it:

Q1: Selecting and designing the model. In the first phase:

- We built a solid dataset with paths to conversion for every customer

- Tweaked Markov Chains model to estimate overall channel importance

Q2: Deploying chosen model

Q3: Validation / pressure testing the model on real marketing campaigns

After Q1, the project's outcome was super insightful, but wasn’t yet the backbone of a daily marketing strategy that we were trying to provide. To go one step further and extract the highest value possible from the project, we dedicated the second quarter to deploying the model. Every time a transaction fires, the goal is to credit all involved channels for the right portion of revenue, depending on their impact.

One constraint for the deployment is that we did not want to retrain the model every day because:

- it would have been computationally expensive

- we wanted the channel's revenue evolution to be model neutral so it's only impacted by marketing interventions.

Hitting the rock: from global importances to individual estimates

This is where we faced an issue we did not foresee: How could we translate overall channel importances into individual-level estimations? In other words: for a given sequence of channels, how much should we credit each of them?

Theoretically, it was an unsolvable problem: getting channels credits at the transaction-level implies that paths are independent of one another. However, Markov Chains' logic is built upon dependency across all the sequences in the graph. To translate global outcome from the model into individual-level, we needed to tweak (once again) Markov Chains logic and adapt it to our needs.

Asking for help: tapping into the community

At GetYourGuide, when we run into a problem, we’re always encouraged to ask our peers and share knowledge. In this case, we didn’t have an expert in this domain. So to solve the problem, we reached out to external sources: We contacted the author of the ChannelAttribution package, whose contact we found on CRAN.

We explained to him the operational problem we were facing. He agreed to help as it was also an opportunity for him to enhance the package’s outcomes from the deployment perspective. This was a fantastic learning experience, as we were both super committed to finding a solution in a timely manner. This required strong collaboration based on each of us, leveraging the best of our different skill sets.

This is where we faced an issue we did not foresee: How could we translate overall channel importances into individual-level estimations? In other words: for a given sequence of channels, how much should we credit each of them?

Together, we tested several different approaches, and after an incredible amount of trial and error — and a few tears —we came up with an original method. From the global credit allocated to every channel, we built an algorithm based on the transition matrix that estimates credit to allocate for each channel in every path, so the aggregation replicates global results.

You might also be interested in: 15 data science principles we live by

The underlying idea is that global results (channel importances) make sense: they come from Markov Chains logic. When applying weights to channels at the path-level – i.e. depending on the other channels in the paths and its position – and aggregating every path, we need to approximate the initial results to the best extent possible.

To do so, we designed an algorithmic approach that takes the actual sequences of events throughout the entire graph and applies weight. After normalizing the weights and aggregating all conversions, we compare the results to the channel importance and apply a corrective factor. Then we do the process again, apply another corrective factor, and so on and so forth. Once we reach the desired convergence level, the process stops and outputs channels path-specific weights for every sequence that we observed.

The last piece of the algorithm we needed to build relates to the fact that there is an infinite number of combinations that can lead a customer to conversion. Hence, many have not observed. Then, we built an algorithm that split it into triples of channels for every new path and replicated the path-level channel weights estimates that we calculated before.

The new approach is now embedded within the ChannelAttribution package.

You may also be interested in: How this display marketer and his small team make a big impact

Building the pipeline

By applying the above methodology, the outcome is that for any sequence of events towards conversion, we can credit the channels depending on their impact. To deploy the model and have every new transaction’s revenue distributed, we built the following pipeline:

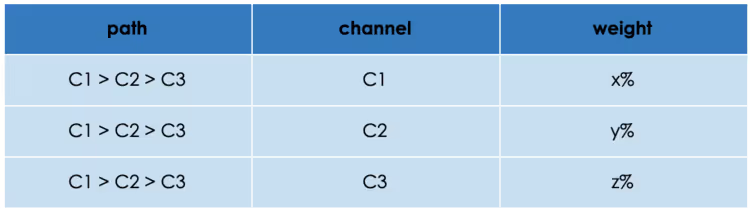

1. We stored the results of the model at the path level: it is basically a three-columns csv containing the sequence of channels, a specific channel, and its weight for the specific sequence.

2. Everyday, we gather transactions and their paths and apply the weights accordingly. If the path has never been observed before, we impute it.

Phase 3: pressure testing

At the end of phase 2, we refined a Markov Chains model distributing every transaction's revenue across involved channels. A lot of sanity checks and backtesting ensured the model's validity on the past interactions between our customers and our marketing interventions.

To ensure our model’s versatility, e.g. its ability to adapt to new situations, we dedicated a quarter to aggressively pressure testing the model on actual marketing campaigns. The hypothesis we want to verify is that, unlike heuristic approaches (e.g. our U-shape model), Markov Chains attribution captures incrementality signals.

What is pressure testing?

Pressure testing consists of designing marketing campaigns and measuring two elements:

- Incremental revenue generated by the campaign

- Channel weights’ evolution in the exposed group against an unexposed group

If a specific campaign is proven to be very incremental, the Markov Chains model should credit the involved channel with more weight than where/when there is no campaign.

Example of pressure test

We wanted to perform as many pressure tests as possible to ensure model sanity, but also respect a relevant timeframe so that the project is closed at some point – avoiding analysis paralysis. To do so, we planned all the tests happening over a quarter, from campaign design and launch and outcome analysis.

Examples of pressure tests in the pipeline are:

- blacking out a specific segment from paid search

- navigational searches uplift from Video campaigns

- repeat purchase incentives CRM campaigns

In all those cases, we measure both the incremental revenue generated (or lost) and compare it against models’ weight to ensure that the incrementality signals were captured correctly.

You might also be interested in: How we built our modern ETL Pipeline part 1 and part 2.

Conclusion

Taking these three steps, we designed, deployed, and tested our Markov Chains based attribution model. The last remaining step is then to socialize widely throughout the company to make sure it is instituted as the single source of truth regarding revenue attribution. For this matter, thoroughly understanding every stakeholder’s needs and expectations is key, and so is providing the model’s outcome in the right format (datasets, dashboards, presentations, specific analyzes, and so on).

Next steps towards measurement

Deploying a data-driven attribution model is a significant additional step towards making marketing decision-processes more data-driven and eventually more profitable. To go further and beyond into marketing measurement, though, it can't solve every question standalone.

To ensure our model’s versatility, e.g. its ability to adapt to new situations, we dedicated a quarter to aggressively pressure testing the model on actual marketing campaigns. The hypothesis we want to verify is that, unlike heuristic approaches (e.g. our U-shape model), Markov Chains attribution captures incrementality signals.

Firstly, it is blind to some specific marketing interventions (offline) and interactions (impressions). This means that some channels, like display, will not get credited for the right value they are bringing to the company. To understand the value of those channels that are not trackable or those whose value does not only lie on click, we should refer to other marketing measurement standards such as Media Mix Model or lift studies.

You might also be interested in: How we scaled up A/B testing at GetYourGuide

Secondly, the attribution model provides a short-term vision of channel performance. Our model is based on the last 90 days before the conversion. To set the correct ROI targets for the channels, however, one needs to understand that the quality of captured traffic is important: some channels can participate in building longer-term relationships with clients, which results in their customer lifetime value is higher. In this case, attribution needs to be connected with a solid CLV framework and hacked with long-term ROI multipliers.

In general, each implementation of an advanced analytics tool makes the organization smarter and marketing interventions more efficient. But at the end of the day, blending all the tools to create a unified framework helps optimize budget allocation, steer channels efficiently, and set up the right targets and forecast outcomes.The more you build and blend them together, the higher the value your marketing analytics will generate — way beyond the sum of each value model.

If you are interested in business intelligence, data analysis or data science, check out our open positions in engineering.

.JPG)

.JPG)