How We Extended our Open Source ML Platform to Support Real-Time Inference

Our machine learning (ML) platform helps data scientists at GetYourGuide build data products and iterate on them faster.

Key takeaways:

Theodore Meynard is a senior data scientist at GetYourGuide on the Recommendation and Relevance team. Our recommender system helps customers find the best activities for their needs and complement the ones they’ve already booked. In this follow-up post to Part I, he explains how together with Jean Machado, they supported real-time inferences by extending their machine learning (ML) platform.

- Introduction

- Motivations and challenges of real-time inference

- The workflow adopted for the ML platform

- How do we build a web service for our models?

- Conclusion

{{divider}}

Introduction

Our machine learning (ML) platform helps data scientists at GetYourGuide build data products and iterate on them faster.

We’ve leveraged open-source tools to build the platform efficiently. We only introduce new technology when necessary, and adapt company tools to fit our projects when possible. In the first phase of this project, we created the framework to allow data scientists to train and predict in batch, the most common technique to make predictions in production.

In batch inference, you predict the outputs of all the feasible inputs at once, typically saving the predictions and taking action on the observations later. The second most common technique to make predictions in production is real-time inference. In real-time inference, you immediately predict your output only for the requested parameters

In this blog post, I will explain how Jean Machado and I extended it to support real-time inference. I’ll first cover why we need to go into real-time inference, and the additional challenges it brings. Then, I’ll describe the framework we developed to allow live inference use cases. Finally, I’ll dive into the tools we use to build a web service.

We also talked about our ML platform at the Data+AI 2021 conference. You can watch the recording here.

Motivations and challenges of real-time inference

Batch inference is usually great for the first version of a model, or when a use-case requires it. It’s usually simpler and faster to set up.

As we iterate on the project, its logic becomes more complex and requires more sophisticated features. It becomes trickier to predict in batch for two reasons:

- The feature values we use for predictions change very quickly. For example, the history of activities a user views is a very dynamic feature, and we want to use the latest value for better predictions. It is used to personalize the recommendations we serve to our customers.

- The input space gets too big. As the number of features increases, we need to generate more predictions for each possibility, and it can quickly explode. For example, ranking activities in different orders depending on whether a user is on desktop or mobile, or the language they use on our website. Each time we introduce a feature like this, we multiply the input space by the number of segments the dimension has. It’s used to improve the relevance of activities on our search result pages.

To solve these problems, we can instead do the predictions in real-time. In this case, we just need to predict the values of interest for the requested parameters, not all the possible combinations, and we can predict using the latest feature values. That means we need a web service that can do predictions in a reasonable amount of time, handles multiple requests concurrently, and can be monitored and scaled according to the traffic.

In addition to those classical engineering problems, we have challenges specific to the deployment of an online ML model. Following the same principles as during the first phase of the project, such as following software engineering best practices and making our work reproducible, we aim to automate the integration and deployment steps.

The workflow adopted for the ML platform

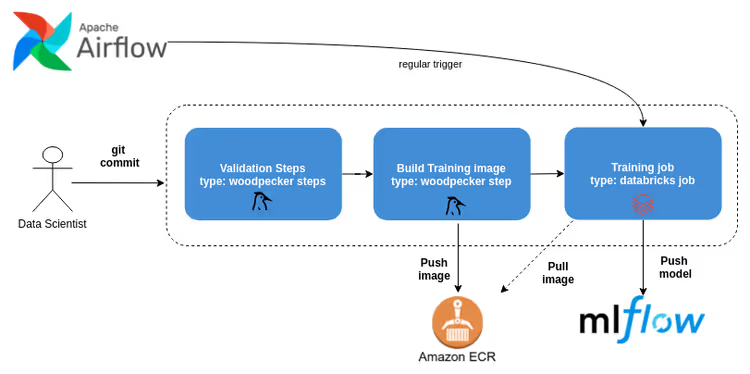

Our training workflow is almost identical to the training for batch inference and online inference.

Once the training is done, we need to deploy our model. But it can’t be deployed within the same environment that it was trained in. At GetYourGuide, we have a clear separation between data infrastructure and production infrastructure. We mainly use Databricks as our data infrastructure, but services are deployed on a Kubernetes cluster in the production environment.

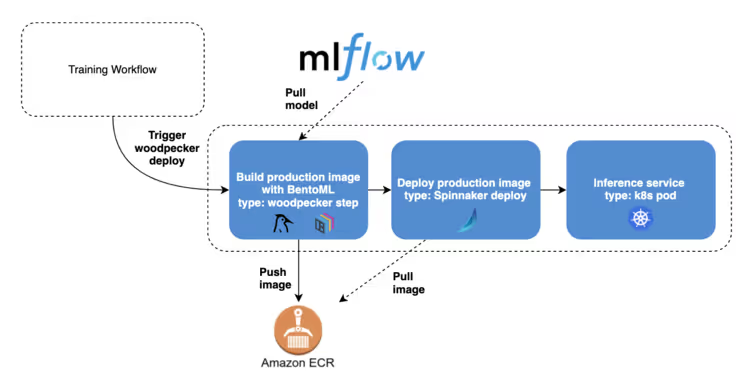

Once a training workflow is done, it triggers a deployment pipeline. The deployment will first load the model from the MLflow registry. It then uses BentoML to package the model into a web service, and serve the result as a Docker image. The Docker image is then pushed to the Amazon container registry. We use Spinnaker to perform a canary release. If it’s successful, it’s rolled out in our production environment.

This approach has additional advantages:

- Identify versions: Separating the tracking of code on GitHub and the tracking of our model on MLflow gives us additional flexibility. We frequently use the same code to train different models at different times. Providing a unique identifier of the web service which can direct to the MLflow model version, and a separate code version makes sure we always know what’s currently being used.

- Easily handle rollbacks: Once the Docker image with the model and code have been built, the web service can be reused anytime. This is particularly handy in case we have an incident. If we have a model that’s not behaving as expected, we can retrigger a deployment of a previous image in Spinnaker.

- Speed up hotfixes: We also need to apply some changes which are not related to the ML model. For example, the service is not covering an edge case when validating the input data. In this situation, we’d want to deploy the new code version with the latest model as quickly as possible, and not have to wait a few hours before a training job is completed. Instead, we can push the change to the repository, not trigger the training pipeline, and directly trigger a deployment pipeline. It can be automated by specifying some flags in the commit message. A new production image will be created using the latest code and the previous model.

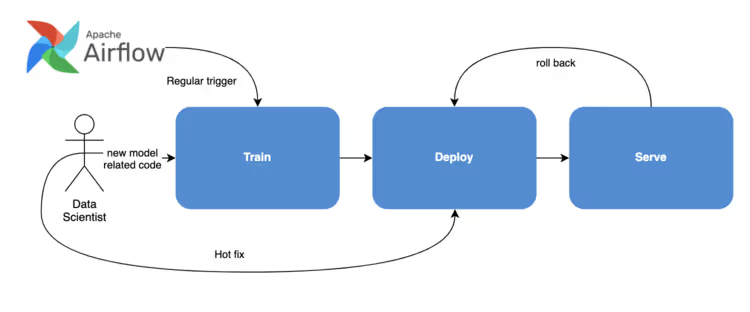

Below is a graph summarizing all the possible workflows our platform supports.

How do we build a web service for our models?

I’ll now dive deeper into the library we used to create our web service. Following our engineering principles, we tried to leverage open-source projects as much as possible, and contributed to them to include the new functionalities we need. We experimented with many solutions and spent time trying to make them work with the rest of our infrastructure.

We specifically considered the following solutions:

- MLflow to build a production image, then push it to our Kubernetes cluster

- MLflow to build a production image, then push it to Sagemaker

- seldon-core with MLflow as the model registry

- Wrapping our model ourselves using a popular web framework (like FastAPI or Flask)

- BentoML to build a production image, then push it to our Kubernetes cluster

After weighing the pros and cons of each approach, we decided to use BenotML. It benefits the prediction speed and the flexibility we have when defining the service.

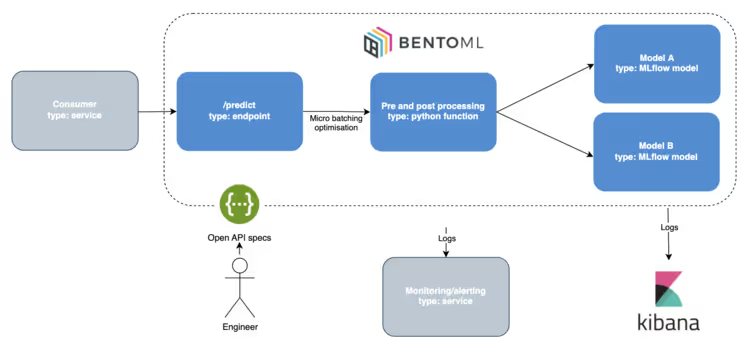

These particular aspects were very attractive to us:

- It automatically provides OpenAPI specifications (it’s widely used inside our company and is very useful when consumers need to query our service)

- It provides a simple and elegant API to pre and post-process the predictions

- It comes with micro-batching which permits higher throughput than other alternatives

- It accepts multiple models wrapped in the same service

In particular, if we want to run an A/B test, we only want to use one service with the control and treatment models. We use the pre-processing functionality of BentoML to select which model to employ for each user.

Below is a graph summarizing the main features of BentoML:

We also helped give back to the BentoML community by adding some documentation of how to integrate BentoML with MLflow, helped with a security patch, and made the default entry point as a non-root user in Docker.

Conclusion

By now you’ve learned how we extended our ML platform capabilities to real-time inference. We’re now building real-time inference services by using our platform and are continuously improving it with what we learn along the way.

We’re also investigating other topics including:

- Feature store to easily access features for inference and training

- Data test to validate our data set

- Monitoring and alerting of model drift

With the platform, we can own the full cycle of model development and ship data products faster to help people find incredible experiences.

If you’re interested in joining our Engineering team, check out our open roles.

.JPG)

.JPG)