Leveling Up GetYourGuide’s Data Platform

Discover how GetYourGuide's Data Engineers, Robert Bemmann and Shaurya Sood, executed a seamless migration to Databricks' E2 platform, ensuring uninterrupted data operations with minimal disruption.

Key takeaways:

Robert Bemmann, and Shaurya Sood, Data Engineers at GetYourGuide in Berlin, share the migration approach their team undertook to transition to Databricks' E2 platform. They detail the carefully planned steps taken to achieve a smooth and accurate migration process, guaranteeing minimal disruption to both the Data Platform's functionality and the continuity of day-to-day operations.

{{divider}}

At GetYourGuide, the Core Data Platform team’s mission is crucial: enabling the company to leverage data autonomously to build innovative data products. Our entire spectrum of data operations - ranging from processing, exploration, and transformation, to the creation of machine learning and AI systems - is meticulously executed on Databricks.

Until Q1 2023, our Databricks infrastructure operated on a legacy workspace architecture, known as the single tenant (ST) workspace, established in May 2015. However, with the evolution of technology, this architecture is making way for the advanced next-generation Databricks platform - E2, as it approaches its end of life by the conclusion of 2023. E2 brings enhanced functionalities, including Unity Catalog, Delta Live Tables, Databricks SQL, and multi-workspace support, alongside the capability to operate LLMs.

In light of this shift, a substantial migration was necessary, involving the migration of over 2200 data pipelines, predominantly spark jobs, accommodating 10+ teams and in excess of 550 users.

This migration wasn’t limited to data pipelines alone; a spectrum of essential assets like notebooks, clusters, data, and machine learning models also made the journey.

A priority throughout this transition was to ensure continuity, aiming for no or minimal interruption in operations. This blog post will discuss the details of the migration process. We'll cover what was needed for a smooth transition, our planning for the new Databricks workspace, and the challenges we faced and how we solved them.

Planning the Migration

Before starting the planning process, we first collected the necessary requirements. Conversations were held with Databricks users from different areas of the platform to understand their crucial needs. Databricks at GetYourGuide is utilized by a wide range of teams, not limited to engineering. These include analytics, marketing, business, and product teams.

Requirements

Our main goal was to have a smooth migration with little to no disruption in data operations. In the Data Platform team at GetYourGuide, all data transformation and processing are done using Spark on Databricks. We have strict SLAs to deliver business reports based on these processes, and it was important to meet these SLAs without any interruptions. Other important teams, like Data Products and Marketing Platform, also depend a lot on Databricks. So, it was necessary to make sure their operations continued without any problems during the migration.

A flexible migration strategy was crucial, allowing individual teams to migrate at their own pace. This meant facilitating a migration process where one team could transition immediately, while another could take several weeks to move their workloads and jobs comprehensively.

Every component of Databricks, such as notebooks, jobs, clusters, MLFlows, teams, users, secrets, and instance profiles, needs to be transferred and function as usual. The only noticeable modification for end-users will be the updated URL for the new workspace, ensuring the platform maintains its familiar operation and reliability.

Workspace Architecture Design

In terms of desired workspace design, we had to come up with a design that serves our potential future needs and get the buy-in from all the teams and their respective point of contact.

The new E2 architecture inherently supports multi-workspace functionality. Hence, initially, for the workspace design we wanted to come up with a design that allows a future separation in terms of workflows, costs and ACLs. Our initial design was to have one workspace per team (LOB=line of business) as we planned for a clear division of users by groups to improve the overall governance of the Lakehouse. Each team would have its own catalog (with separate write access), but with read access to other catalogs. Fine-grained table access rights (such as blocking read access or masking for PII data) would be managed by Unity Catalog governance rules. The following visual outlines what we had in mind.

However, the above design was too complicated to migrate into immediately. Therefore, we decided to go with one single E2 workspace that would serve as a bridge workspace, so that we are able to migrate all assets to the E2 environment in a simplified manner. Our strategy was to apply a straightforward 'lift and shift' approach. We decided to replicate the existing workspace in its entirety, maintaining all of its established characteristics, into the new E2 account.

In reality, as of today, our workloads are still running on this single bridge workspace (but on E2 architecture) as we currently do not see a real benefit in overcomplicating things:

- Multiple workspaces may require additional management overhead as we would also have to configure and maintain separate catalogs

- Additionally, further splitting up the workspace would call for another migration - the migration of all ETL jobs. The jobs potentially would need to connect to another workspace, which comes with additional complexity and maintenance.

- Cost tracking does also work with proper tagging of jobs and clusters using team cluster policies

However, one challenge we still face is having unified ACLs on schemas and tables, for which we might plug in Unity Catalog in the future.

Looking ahead, we might consider further subdividing the workspace among various teams and perhaps creating distinct workspaces for different environments such as production and staging. But for now, the goal was to migrate the unified legacy workspace as it is, preserving its existing structure and functionality.

Challenges

Since our goal was a phased migration, some spark jobs could run in the legacy workspace while others could operate in the E2 workspace. This approach posed a significant challenge: ensuring all datasets remain synchronized across both environments at all times.

Everything, from all S3 buckets and their respective files to all Managed and External Tables of the Hive metastore, needed to be consistently available and updated in both the old and new environment, ensuring jobs could read the same data irrespective of their operational workspace.

Based on our requirements, here are some of the questions that we asked ourselves:

- How do we maintain synchronized table metadata across workspaces? For instance, if a table was updated from the E2 workspace, how could updates be reflected and accessed in the legacy workspace? These updates could be various modifications like the addition of new data or partitions to the table.

- What about the managed tables whose files are exclusively available locally in the dbfs file system of the legacy workspace? How can the E2 workspace access those files? Is there a requirement to transfer those files preliminarily?

- How can we determine and identify the priority tables for migration? What is the approach to ensuring that focus and resources are allocated to migrating the most critical tables? Is it possible to utilize spark metadata to facilitate this identification?

- What’s the process for migrating other essential assets such as notebooks, users, clusters, and machine learning models?

- How do we establish and maintain a single source of truth for all Databricks infrastructure components and configurations throughout the migration process and also for the future?

Solutions

Synchronising Metadata across Workspaces

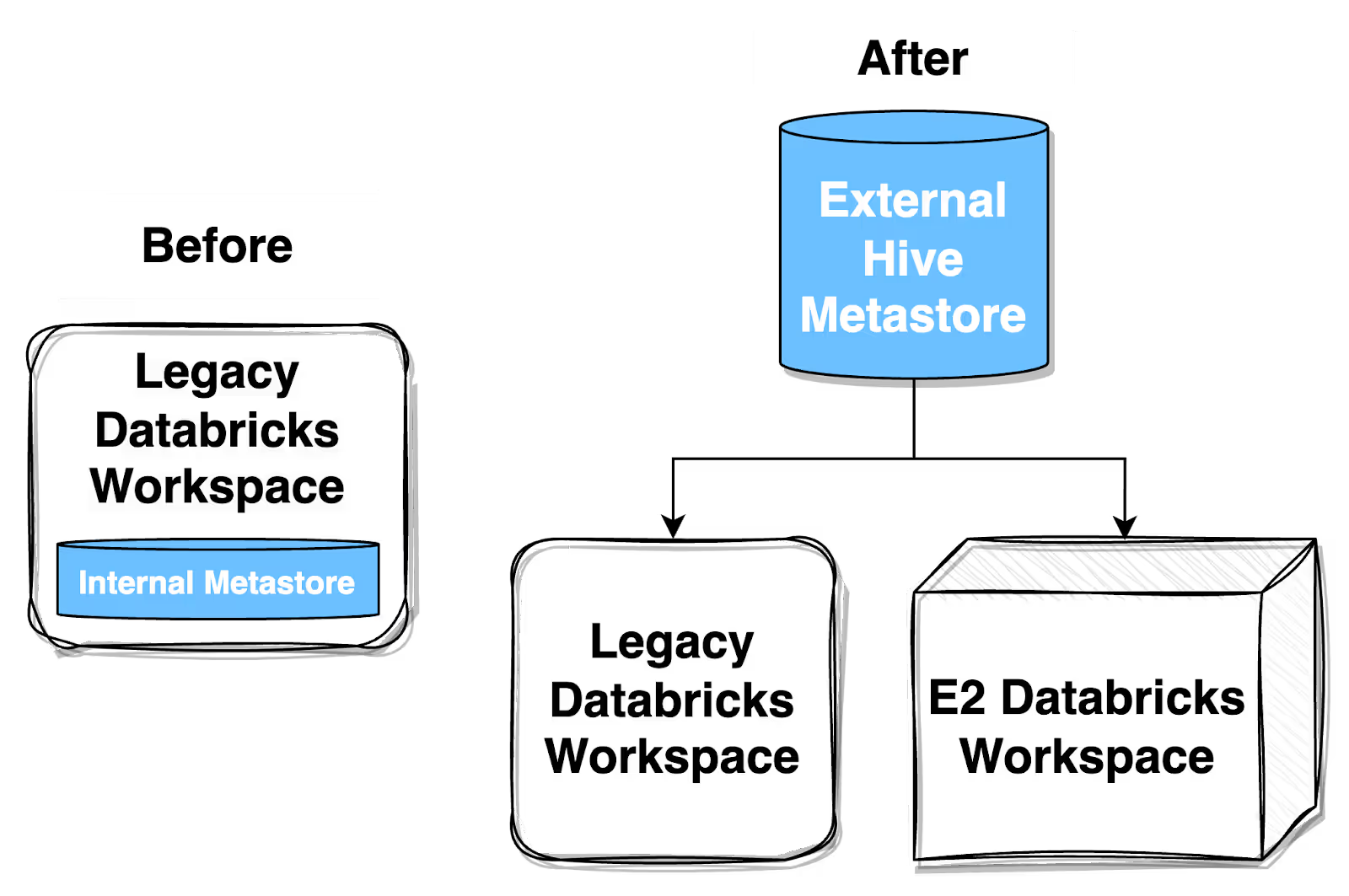

Leveraging an External Hive Metastore as a common Satellite

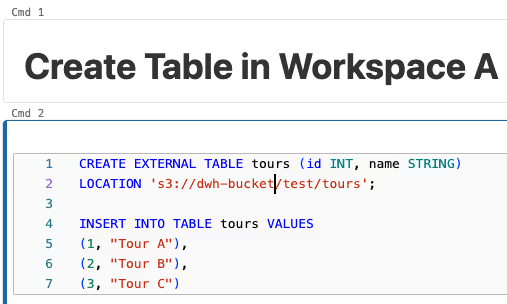

Using an External Hive Metastore (EHM) helped us manage metadata and table structures across different workspaces more effectively. Let’s go through a practical example to understand its benefits better.

Imagine adding a new partition to a table in Workspace A. Without an EHM, if you try to access this table from Workspace B, the new partition won’t be visible. This happens because, without a shared metastore, updates made in one workspace don’t show up in another.

One way to handle this without an EHM is to create a custom application to keep the internal metastores of the two workspaces in sync. But this method would need a lot of maintenance overhead and constant near real-time updates, taking resources away from other important tasks.

With an EHM, the metadata updates are centralized. So, when a new partition is added to a table in Workspace A, the EHM gets updated. Then, when you access the table from Workspace B, the EHM ensures that the new partition is visible, keeping data consistent across workspaces.

In conclusion, using an EHM made the data management process more efficient, eliminating the need for a separate application to sync metadata across different workspaces.

How was the migration to an External Hive metastore done?

We employed a 4-hour maintenance window to pause all activities on Databricks to be able to facilitate this migration. Although the process is fairly quick and did not take longer than 30 minutes.

- All activities in Databricks were paused as a prerequisite to prevent any changes during the migration process, which included stopping all scheduled jobs and disabling user logins.

- A backup of the internal Databricks Hive metastore was created and imported into a new database hosted on an RDS instance.

- Next, all clusters across both legacy and E2 workspaces were configured via a global init script to always connect to the external metastore on startup.

- Validations were carried out to ensure that everything was functioning as expected.

- Normal operations were resumed, re-enabling user logins and scheduled jobs.

Migrating Managed Tables

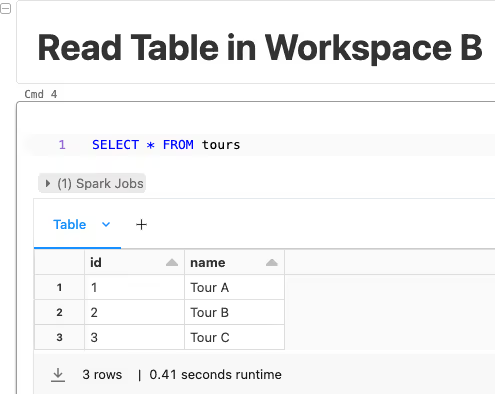

Moving on to our next challenge after migrating to EHM, we were tasked with the migration of over 21,000 managed tables, which amounted to about ~10TB of data. Even though the External Hive Metastore (EHM) solved our problem of keeping tables and partitions in sync, serving as a central source of truth, the migration of the managed tables presented its own unique set of challenges.

Why did we need to migrate the Managed tables?

For the managed tables, the files were localized to the legacy workspace, and consequently the data, were inaccessible from the E2 workspace. Although the EHM provided metadata access, querying the managed tables in E2 was futile as they appeared empty; the actual files resided in the DBFS of the legacy workspace.

To elaborate with an example, if a managed table in the legacy workspace was queried from the E2 workspace, it would show up as empty with zero records because the actual files containing the data were located in the local DBFS of the legacy workspace, making them inaccessible from the E2 workspace.

Thus, finding an efficient strategy to handle the migration of these substantial managed tables was crucial in our migration process.

Given the substantial volume of data to be migrated - 21,000 tables equating to around 10 TB - a strategic, prioritized approach was essential. We identified tables that were accessed most frequently to migrate them as a priority.

To determine this, we utilized various pieces of table metadata, such as creation dates and partition addition dates, available in the metastore. Additionally, we implemented a Spark query listener, which further informed us about the usage patterns of the tables, ensuring that our migration strategy was both efficient and focused on the most immediately necessary data. More on Spark Query Listener if you keep reading!

How did we migrate the data?

The migration of data was meticulously carried out in multiple phases and iterations. Here's a systematic breakdown of how it was executed:

- Selection of Tables: Tables were selected for migration in batches, prioritized based on immediate necessity and usage.

- Pausing Updates: Any update activities, such as writing or appending to the chosen tables, were temporarily halted. This meant pausing jobs that were actively interacting with these tables.

- Data Transfer: The actual data, stored in parquet files, was copied from the Databricks File System (DBFS) to a designated S3 bucket.

- Updating Metadata in Hive Metastore: This step is notably intricate. After transferring the files, the metadata in the Hive Metastore needed updating to reflect the new S3 locations of the tables.

- The crucial realization was that the table storage locations were documented in two specific metastore tables: SDS and SERDE_PARAMS.

- Upon transferring the files to the new S3 location, the metadata entries in these tables were meticulously updated. This involved changing the referenced locations to point to the new S3 paths.

- Additionally, the default location of the databases was modified.

Consequently, this universal approach ensured that both the legacy and E2 workspaces pointed to the same synchronized location in S3, and also had access to the same data - promoting consistency and alignment across the two workspaces.

Let’s talk about the Spark Query listener a bit more.

Using a Spark Query Listener to identify frequently used tables

We needed a solution that listens to all metadata I/O (files, partitions, tables) of our Spark jobs and stores the info permanently. This metadata could be joined with the external Hive metastore data and we would know which tables (which is only a small subset of all 21K managed tables) we have to move ourselves or convert to external tables to speed things up.

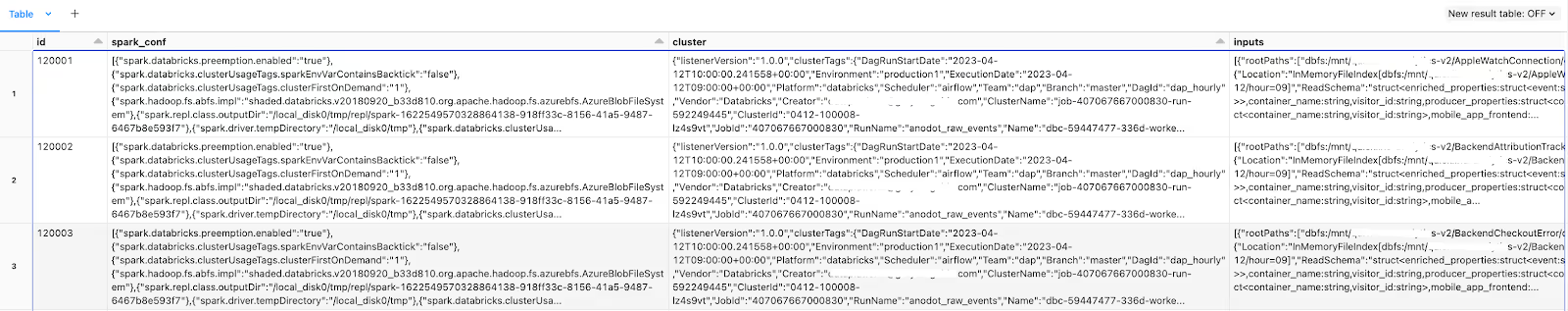

For the custom Spark Listener we extended the default QueryExecutionListener class and overwrote the queryExecutionListeners for each spark job via init script ("spark.sql.queryExecutionListeners" = "com.getyourguide.spark.listener.ClusterListener"). The application then listens to the Spark Query execution plan and parses relevant info as JSON. This JSON is then simply dumped to a MySQL database, so that we can do the querying and transformations later via SQL. A sample JSON payload looks like this:

As a next step, we just needed to dump the MySQL data to S3, so that we can analyze it easily in a notebook. It was much easier to write analytical queries using Spark SQL. We were interested in the inputs and outputs primarily, but the cluster and spark_conf data was very useful as well.

The final step is a simple join of the Spark listener input and output data with the metadata information stored in the Hive metastore database (to retrieve table_type and table location). That way each team could check their respective airflow tasks for managed tables that need to be converted into external tables.

Databricks Migration Tooling

For the final stage of the migration, we turned to Databricks Labs' migration tooling, which provided critical scripts for a one-time, clean switch from our standard workspace to the E2 workspace. These scripts were effectively utilized for migrating users, clusters, notebooks, jobs, secrets and ML models. This step was taken only after setting up the external hive metastore, and finishing up the Managed Tables migration, thus ensuring a smooth and final transition to the E2 environment.

Databricks Infra-as-Code

When we decided to set up our Databricks and AWS infrastructure, Terraform immediately stood out as the right tool for the job. Here's why:

- Quick Setups are Now a Reality: Thanks to Terraform modules, we can create new Databricks workspaces or AWS configurations in no time. This also means that adding new elements to our existing setup will be straightforward in the future.

- A Reliable Source of Truth: All our Terraform code is securely stored in a version-controlled repository. This becomes our go-to reference for how we built our infrastructure, which is incredibly helpful for future reference or troubleshooting.

- Reduced Risk of Errors: Terraform minimizes manual errors by translating our infrastructure requirements into code. This makes the setup process more reliable and consistent.

- A Unified Guide for Everything: One of the best parts is having a single point of reference for both Databricks and AWS. This simplifies management and ensures that we can understand our entire architecture easily.

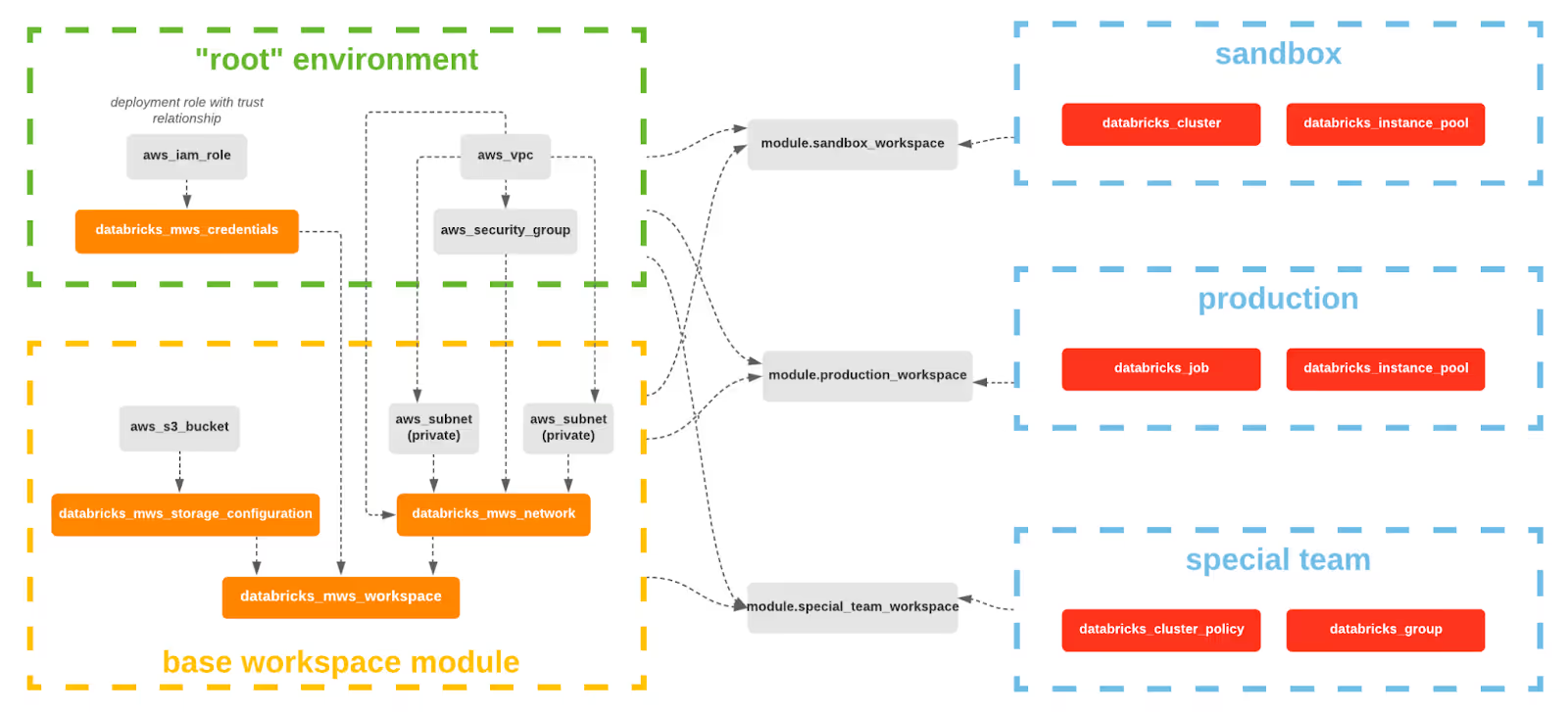

All relevant assets needed for a successful migration were created via the Databrick and AWS Terraform provider, amongst others:

- MySQL RDS DB for the EHM

- central AWS VPC (incl. subnets) that is connected into our main company network via transit gateway

- workspace (as reusable module) incl. it's S3 root bucket, mws_credentials, mws_storage_configuration and mws_network

- all required IAM roles for instance profiles and S3 bucket ACLs

The following architecture diagram taken from the official databricks provider docs illustrates our Terraform setup. In our case, it is slightly modified as we are using one single VPC (IAM role and databricks network) for all workspaces.

In short, Terraform has simplified our Databricks Platform journey and set us up for success with future cloud infrastructure projects.

Learnings

We were able to migrate our whole Databricks infrastructure with 2FTEs within 6 months (start end of November 2022, roll out mid-May 2023).

As a wrap, these were our main learnings:

1. Basics of project management

- Each big and complex project such as this migration needs thorough planning, coordination, and proper communication within the whole company, especially as that many other teams and stakeholders were involved. Define one main goal and a few milestones in between.

- Get regular buy-in from other teams via RFCs (Request for Comments) to define requirements and expected outcomes and if they are actually met. When in doubt, always over-communicate in the relevant channels, in our case Slack (a dedicated channel with regular updates) and emails to global GetYourGuide recipients. Furthermore, we tried to be as responsive as possible whenever we launched or rolled out a new feature. This increases trust in you for your stakeholders.

2. Define a clear north star goal as well as milestones

- Our north star goal was that all users use the new E2 workspace in May 2023 and all of our data pipelines run there as well. Always keeping this in mind helped us tremendously in prioritizing all the tasks we could potentially do. Basically, it makes the decision easier for what all NOT to do right now. Things that sound nice to have in theory, but did not contribute to the main goal at all are: Cleaning up all kinds of assets like unused tables, policies, users and groups, having cluster policies right away, having proper ACLs for tables and datasets, SSO from the beginning, and API token rotation.

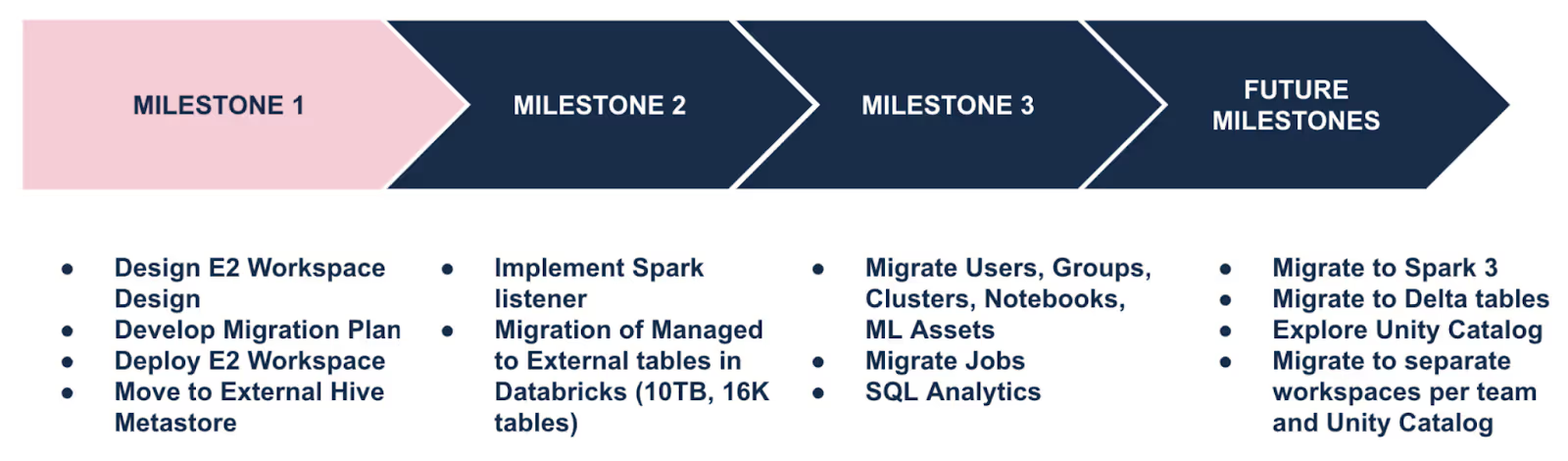

3. Another learning for projects that exceed 1 month of work was to use milestones. With milestones you have something to present in between the project. They also give a good feeling how much you are aligned with keeping the timeline in terms of your initial planning. We defined 3 milestones, with a few subgoals were:

- Software migrations should be incremental in most cases, especially if the scope is big. For our project, incremental especially meant that the single teams could migrate their scheduled data pipelines (spark jobs) individually and that there are zero implications with regard to data consistency. Using an external Hive metastore instead of the Databricks internal one was the unblocking solution for this problem. No matter if a pipeline writes from the old or new environment, we can always ensure that the data is in sync.

- For us, it also paid off to ask peer engineers from other companies that went through a similar migration process. Although preconditions and workloads may differ, some best practice patterns turn out to be similar (as outlined before).

A big shout-out to Marketing, Data Products, Paid Search and all other teams involved for all the help. Your support and cooperation played a crucial role in the success of our migration.

.JPG)

.JPG)