Leveraging an Event-Driven Architecture to Build Meaningful Customer Relationships

The Customer Engagement team’s mission is to accelerate engagement across all stages of the customer lifecycle. To achieve this goal, one of their approaches is to leverage communication channels such as email and push notifications.

Key takeaways:

The Customer Engagement team’s mission is to accelerate engagement across all stages of the customer lifecycle. To achieve this goal, one of their approaches is to leverage communication channels such as email and push notifications. These reminders can provide customers with inspirational content about destinations they’re planning to visit. We help recommend things to do based on their upcoming trips to ensure they don’t miss out on anything.

To establish this targeted level of interaction with our customers, the team employs an event-driven marketing strategy. They do this by connecting relevant events in the customer life cycle into a Customer Relationship Management (CRM) tool. From there, we can orchestrate all communications sent to them.

Senior backend engineer, Vitor Santos, shares his team's process to conceptualize and build this integration that helps us keep our customers engaged and aware of our inventory.

{{divider}}

Event-driven marketing: what it is and how to enable this strategy

Before diving head first into the details, it’s important to have a shared understanding of event-driven marketing. In the book, “Follow That Customer! The Event-Driven Marketing Handbook,” one of the authors, Egbert Jan van Bel, describes EDM as “a discipline within marketing, where commercial and communication activities are based upon relevant and identified changes in a customer’s individual needs.”

This means we are reaching out to customers as a result of some relevant action they took while using our product. For instance, a customer is going on an Eiffel Tower tour they booked on GetYourGuide. This is an opportunity for us to share with them a few other popular activities in Paris that might make their trip more incredible, like a tour at the Louvre museum or a sightseeing tour on the Seine River.

There are many event-based CRM tools in the market that help enable this strategy. These tools work by offering a platform that allows clients to:

- Import customer data and events through methods such as REST API requests and CSV upload

- Configure event-triggered campaigns through a user-friendly interface

- Leverage out-of-the-box communication delivery mechanisms to dispatch emails, SMS, and push notifications

We wanted to employ an event-driven marketing strategy, so we did a thorough evaluation process of different CRM tools that offer this capability and ended up choosing Braze. We chose it for its ease of integration, onboarding support, and most importantly, an extensive set of features that suited our needs and enabled our marketing teams to operate with efficiency.

Leveraging an event-driven architecture

Our marketplace architecture is heavily event-based. As a data-driven organization, domain events are naturally emitted for every minimal relevant interaction between customers and our product. This generates useful analytical value that helps shape our decisions to drive improvements. In addition to that, events are also produced to enable asynchronous communication between distributed systems.

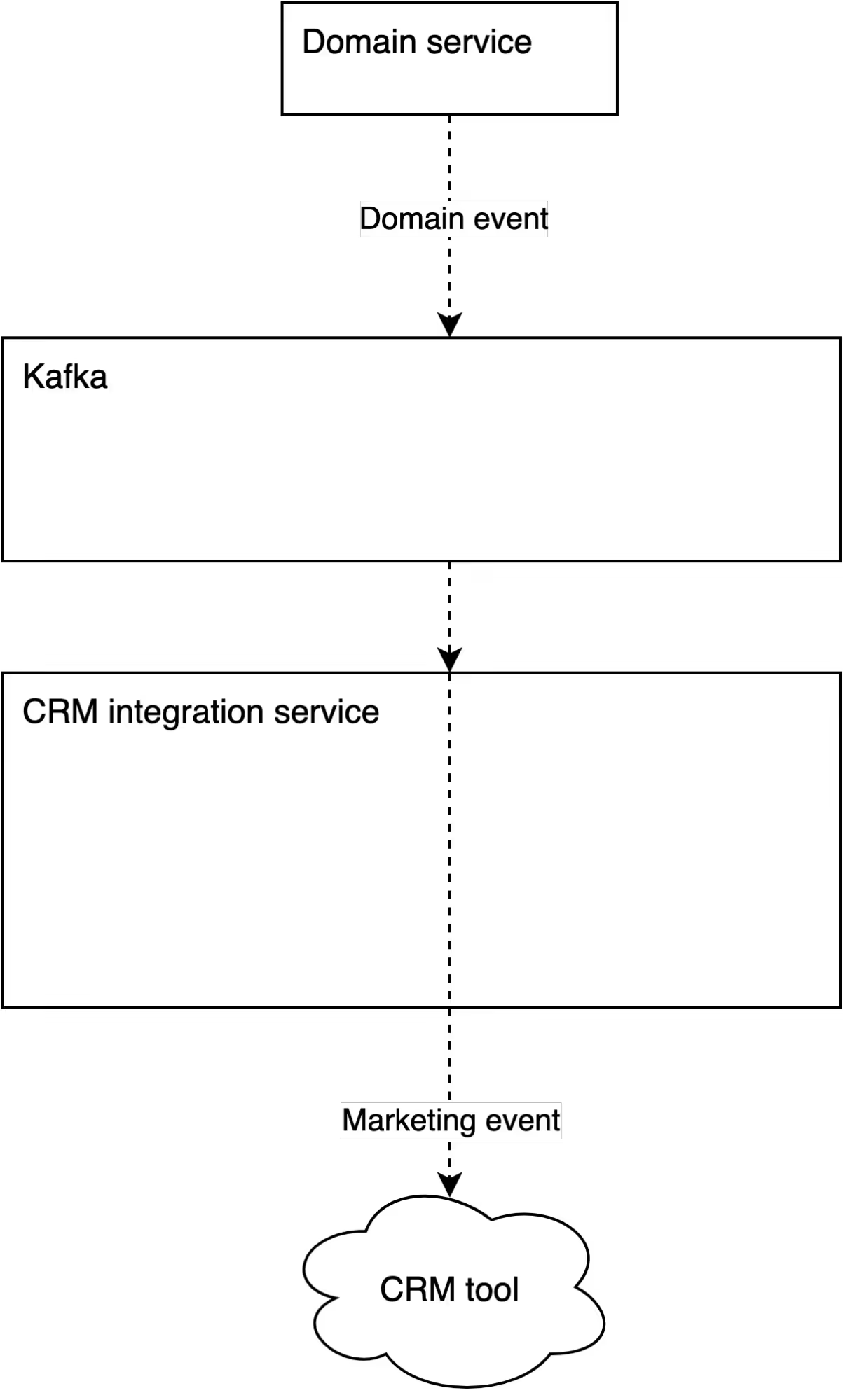

We use Apache Kafka as the event streaming platform through which domain events are transmitted. This means that our domain services connect to Kafka to publish business facts in the form of events, and they can also use it to listen to events produced by other services.

With these domain events available and the tooling necessary to consume them in real-time, we were equipped to achieve our mission: engineer a solution that plugs marketing-relevant events into our CRM tool. To achieve this, we decided to build a new system to perform this integration.

An overview of our CRM integration service

The application operates in a stateless fashion by listening to Kafka topics that contain relevant domain events produced by other services, consuming and transforming them into marketing events, which are then pushed into our CRM tool.

A marketing event differs from a domain event because it may use different naming conventions or contain additional data. To achieve that, our event processing pipeline contains an intermediate step to allow for transformation and enrichment.

To illustrate, let’s think about a booking completed event. This is a crucial part of the customer journey that offers us an excellent opportunity to establish an interaction. Apart from having this event in our CRM tool as a trigger for campaigns, we also want it to hold additional relevant information for marketing purposes. This could include whether the user has other upcoming activities, when they are taking place, and where they are located. This information helps us offer tailored recommendations to the customer based on their future plans.

Since this extra information may not come as part of the domain event, our service has a built-in capability to perform event enrichment by calling other APIs or querying extra information from a persistence layer:

Once these marketing events reach our CRM tool, then it’s up to our marketing and content teams to do their magic. From a user-friendly interface, they can set up targeted, data-rich communications through an array of channels (such as email, push notifications, in-app messages), which get triggered based on these events. Some events that are relevant in our customers’ journey include, but are not limited to:

- Account created

- Activity wishlisted

- Activity added to the shopping cart

- Booking completed

- Activity preparation started

- Activity conducted

Monitoring and resilience strategy for an event processing service

As a customer-centric company, offering a seamless experience is always a primary concern. Before rolling out any service to production, we carefully plan a strategy to ensure high availability, performance, and resilience. Establishing this plan for an event processing service brought a few exciting challenges:

Performance monitoring

We use service-level objectives (SLOs) as a framework for defining targets around application performance.

In the realm of an event processing service, our main performance guiding principle is to have event processing happen in (near) real-time. This helps ensure no bottlenecks are created due to slow event consumption, allowing us to promptly reach our customers.

We use Burrow, an open-source Apache Kafka consumer lag checking tool, to monitor the performance of our event consumer. By connecting it to Datadog, our monitoring platform, we can set up SLOs over metrics produced by Burrow and ultimately check whether our Kafka consumer is lagging (i.e. consuming events at a lower rate than they are produced) or not. As an organization, we’ve standardized all our SLO targets to three and a half nines, which in this case means that we aim for the consumer not to lag at least 99.95% of the time.

Besides having application SLOs displayed in monitoring dashboards that we casually look at, we leverage Datadog notifications to get alerted whenever we’re not meeting these objectives, which helps us identify issues and ultimately seek high availability and performance.

Resilience

The characteristics of an event processing service introduce two main points of failures from which we have to guard ourselves against:

- Consumption of malformed events

The action taken to prevent failures in event consumption or deserialization is actually part of our broader event lifecycle best practices: We require events to be strictly backward compatible so that once a consumer is set up, it will never fail to consume them as a result of a change (intentional or not) in the producer. Additionally, we perform event schema validation on the producer end to ensure that an event is only produced if it conforms with its schema definition.

2. Event processing failures

Suppose there’s a failure in the event processing (for instance, caused by an outage in the external API being called by the event processor); the operation cannot be discarded and instead rolled back and retried. We leverage Kafka’s ability to set up retriable consumers to repeat the event processing until it is successful. We also apply an exponential backoff to our retry configuration to guarantee that we’re giving enough time for any external services or failing components we depend upon to recover.

Final thoughts

Leveraging an event-driven architecture to build a CRM integration turned out to be a non-invasive solution to our marketplace structure. By consuming existing domain events, we were able to unlock event-driven marketing through a completely independent system. We were able to do it without integrating our CRM tool into every single domain service that owns relevant business logic — avoiding spreading the work across multiple teams. This naturally brought us all the fundamental benefits of a distributed architecture, such as more scalability, flexibility, and separation of concerns.

Deploying an event-driven marketing strategy in collaboration with marketing and content teams helped us significantly grow key metrics such as conversion rate and revenue. Most importantly, it is helping our customers on their journey to discover incredible experiences.

If you're interested in joining our engineering team, check out our open roles.

.JPG)

.JPG)