How to Use Kafka to Integrate with an External System

Based in Zurich, Umberto D’Ovidio is a Software Engineer on our Fulfillment Team. In keeping with the team’s remit of building systems that support user experience, a recent project saw the introduction of Kafka as a buffer between GetYourGuide’s system and an external Customer Service System. From error handling to high-traffic tolerance, Umberto explains the what, why, and how of implementation.

Key takeaways:

Based in Zurich, Umberto D’Ovidio is a Software Engineer on our Fulfillment Team. In keeping with the team’s remit of building systems that support user experience, a recent project saw the introduction of Kafka as a buffer between the GetYourGuide application and an external Customer Service System. From error handling to high-traffic tolerance, Umberto explains the what, why, and how of implementation.

At GetYourGuide we want to change how people experience travel. We want to create unforgettable experiences, and when something doesn’t go as planned, we want to be there to solve these problems as quickly as possible. To help keep customers on the move, we dedicate extra care to making sure that their requests reach us, no matter what. One of our engineering principles is to use the best tools available for the job, and for this reason, we are using an external customer service solution to process customers’ contacts.

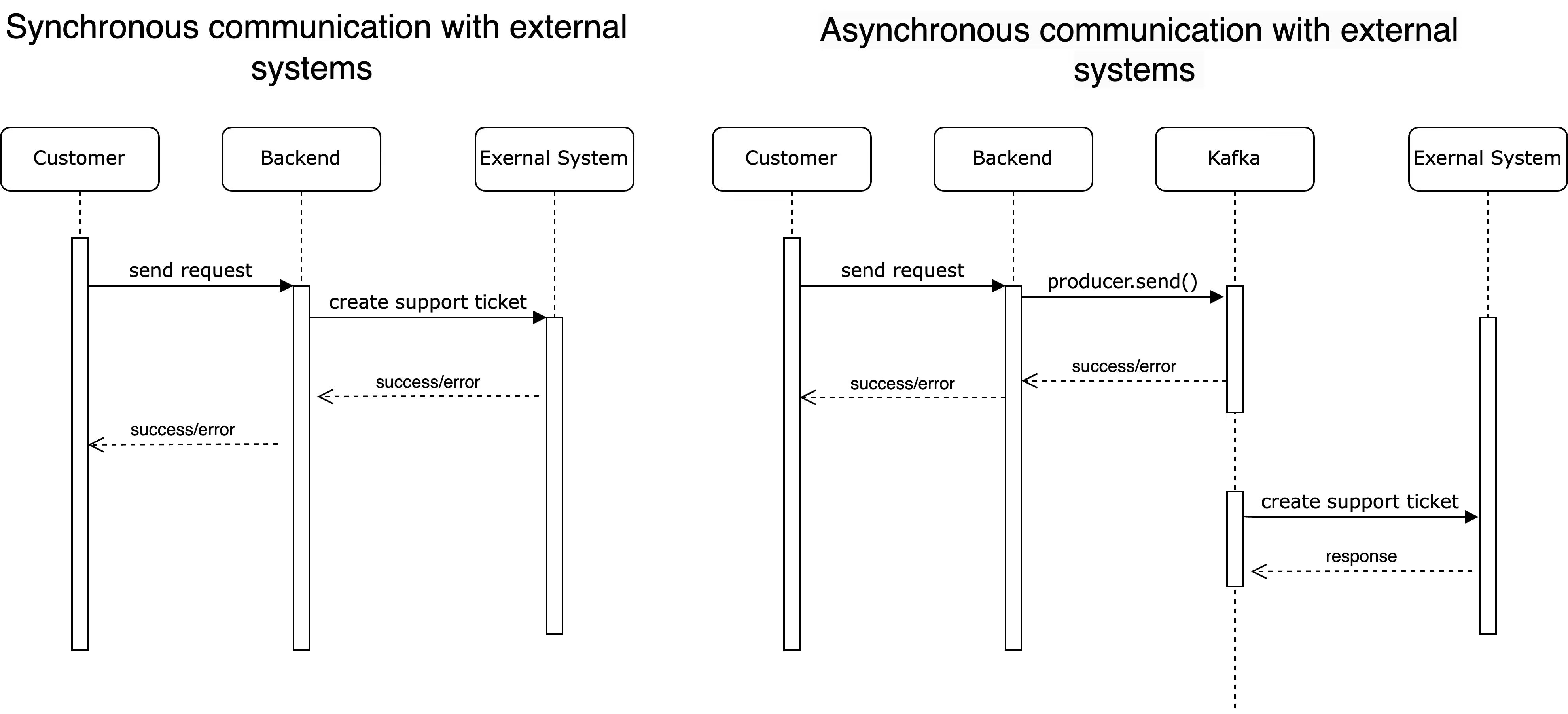

To prevent external system downtime from affecting our customers' experience, we are using Kafka as a buffer between our application and the external system. This ensures that any issues with the external system do not impact our customers' experience.

Why Kafka?

There are several benefits to using Kafka as a buffer between an application and an external system:

- Better performance: Writing to Kafka is usually faster than sending the request to an external service. Once we have successfully written to Kafka, we know that the message will eventually reach the external system, so we respond to the user request with a success message.

- Stores messages for later processing: If the external system is unavailable, Kafka can store messages for later processing once the system becomes available again. This prevents data loss and ensures that the external system is not overwhelmed with a large backlog of requests when it becomes available.

- Absorbs bursts of traffic: Kafka can act as a buffer that absorbs bursts of traffic, allowing the external system to process requests at a steady rate rather than being overwhelmed by sudden spikes in traffic. This is especially useful since it reduces the chances of running into rate limits.

- Provides a record of interactions: Kafka stores a (temporary) record of all messages that pass through it, which can be useful for debugging and monitoring purposes.

Details of the Implementation

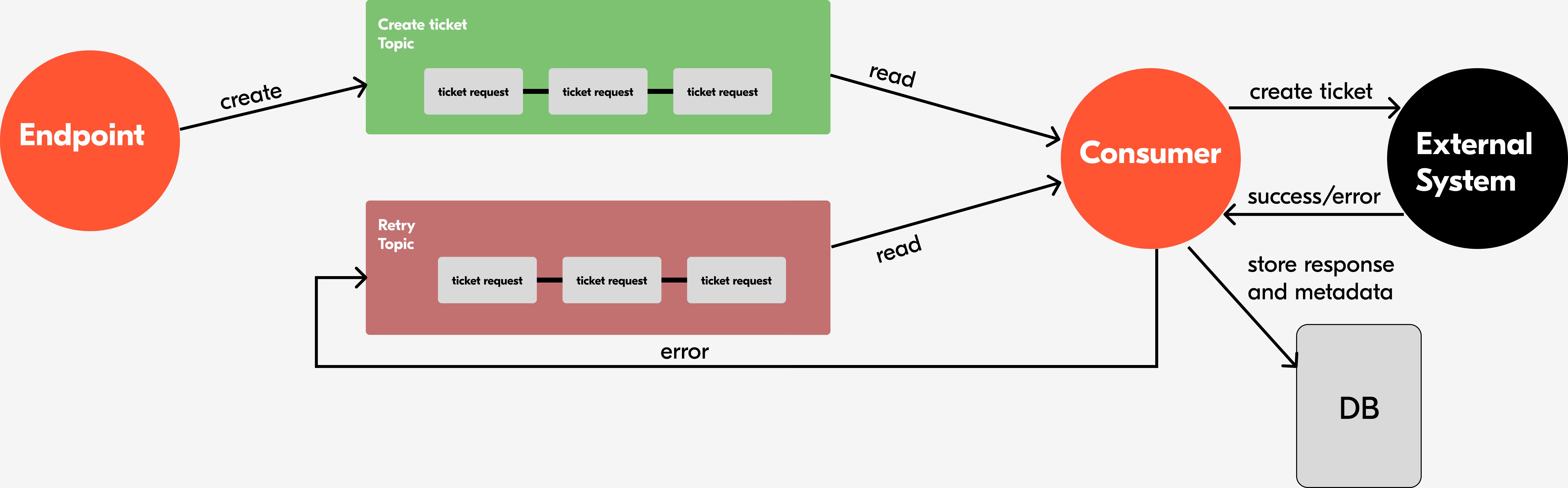

Our implementation uses Kafka Spring to communicate with Kafka, and comprises two important components.

The first validates users' requests and ensures they are saved correctly to Kafka. The second reads requests from the Kafka topic and submits them to the external system, performing retries in case requests are unsuccessful, and tracking the responses in a database table.

Sending Messages to a Kafka Topic

When it comes to saving messages to Kafka, there are a few considerations to take into account. As Kafka is a distributed system, it’s possible to configure the amount of acknowledgments required. This configuration has trade offs.

When acknowledgments are configured to one, the producer will wait for an acknowledgment message from the leader. If the leader acknowledges and suddenly crashes, the message will be lost.

A more conservative approach is to set ACKs to all. In this case, the producer will wait until all replicas have sent an acknowledgment message.

Another important configuration parameter is max.block.ms. This controls how long the producer.send() method can block. Its default setting is 60,000 ms, although for our use case, we have set it to a much shorter value. If producer.send() times out, we can still send the request synchronously to the external system, bypassing Kafka and safeguarding against a Kafka outage.

Another important consideration is that Kafka is not designed to handle large payloads. Since our customers’ requests can include attachments, we upload these to AWS S3 and store a reference to them in the payload, so that it can be downloaded again by the consumer.

Consuming Messages from a Kafka Topic

When consuming messages, we have a few constraints that influence our implementation.

First of all, we must avoid creating duplicate requests for our care agents. Secondly, a particular request may fail to be created because of validation errors. When this happens, we make sure to retry the request without blocking other requests in the queue, using a separate retry topic. Meanwhile, we get notified on Slack about the issue, and can investigate why the validation fails. If it’s a programming error (i.e., we are missing a parameter in the request), we can fix it. After a fix is deployed, the request will succeed.

We had very few instances of validation failures. Those that occurred were caused by a mismatch between our email validation logic and the external system’s validation logic. This is one of the trade-offs that we have to make when we move from a synchronous to an asynchronous flow.

Speaking of retries, we must avoid overloading the external system. Imagine what happens if the validation keeps failing for a particular message. The consumer will try to send the message over and over, which can possibly result in rate-limiting, thus preventing other requests from being sent.

Moreover, if a request fails, we must have visibility on why this is the case. These considerations led to the following design: We record every response from the external system in our database, including the created and updated timestamp. Before sending the requests, we check that it has not been sent before. This is because Kafka follows an “at least once delivery” semantic.

Before sending the request, we also check the last time it was sent. If this is a retry, we follow an exponential backoff policy. This ensures that bad requests won’t be retried too often and that legitimate requests that failed for a random issue will be retried quickly. We also check the number of retries of a particular request. If the request exceeds this configured amount of retries, we add it to a dead letter queue topic and give up trying.

Integration Testing for Asynchronous Systems

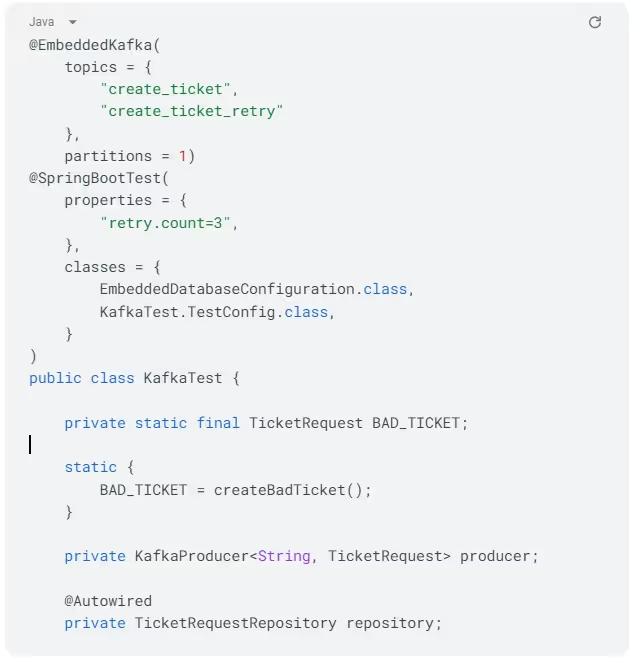

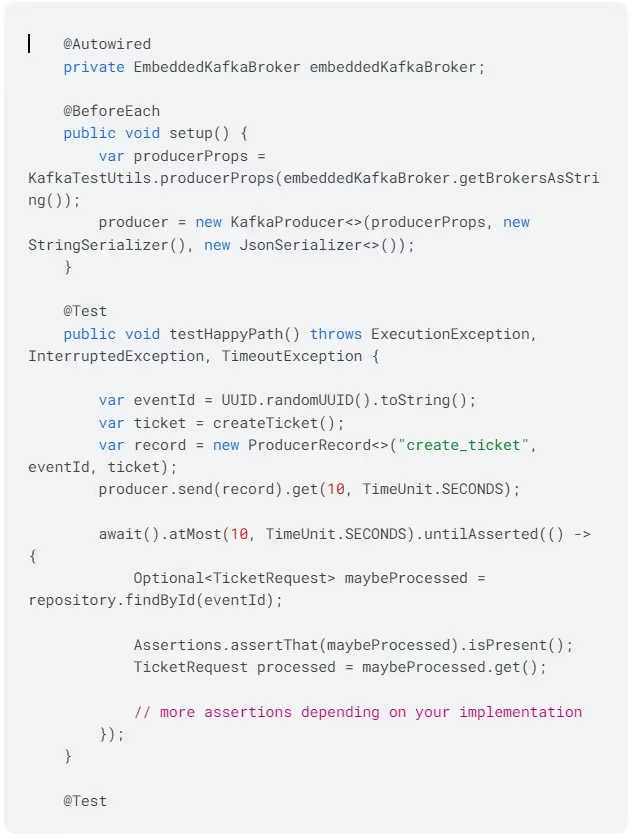

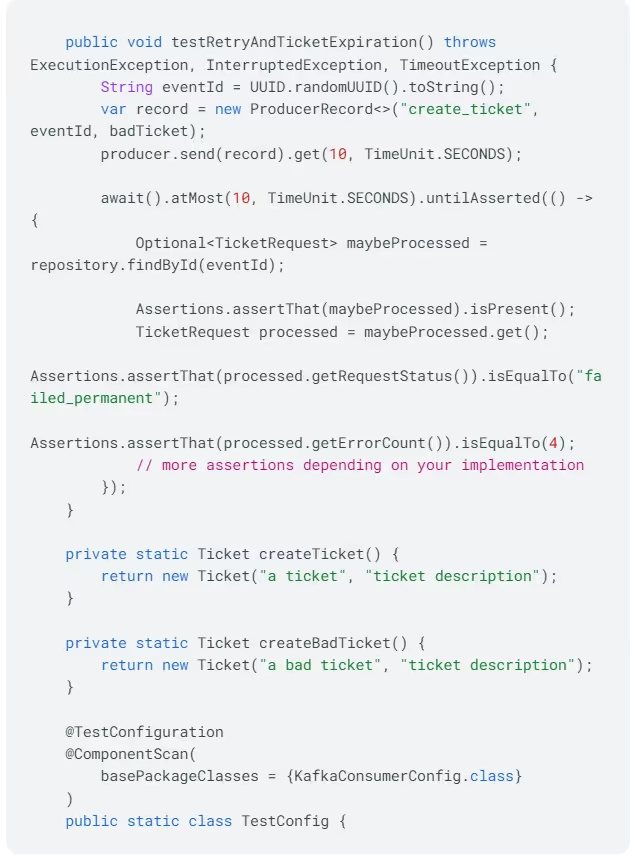

As we use Spring Boot, we heavily rely upon dependency injection. This aids testing by making it easy to mock parts of the system you are not interested in testing. We are using EmbeddedKafka to simulate Kafka behavior in our tests. Another approach would be to run Kafka in test containers, but the test would require more resources and be slower. Another very useful library that we use when testing asynchronous systems is Awaitility. The following is an example of a possible integration test on the consumer side:

We are setting up a producer in the setup function, which is run before each test. The happy path test sends a ticket to Kafka and expects that a ticket entry is created in our database within 10 seconds.

In the test retry test, we send a hardcoded ticket which will make our mock client throw an exception. This is testing our error-handling logic. We expect a ticket in status failed permanently to be created within 10 seconds, with an error count of four. This is because we have overridden the retry.count property to three. For illustration purposes, we can pretend that this property is used to evaluate how many times a ticket creation should be retried.

Observability

We are running a periodic query that fetches the ticket count per status of the last 24 hours. This information is published periodically to our observability system, where we have defined a monitor that will alert us on Slack if we have more than a predefined number of tickets in failed statuses. We can then check our database to understand the reason for the failure and intervene manually if this is required.

Rollout Strategy

To ensure that the rollout of the new flow worked as expected, we duplicated the original endpoint to send contact requests and implemented the asynchronous flow.

We initially sent only 1% of the traffic to the new solution. Once we fixed a few minor issues, we increased the traffic percentage gradually, until 100% of the traffic was going through the asynchronous flow.

Results

The following is a comparison of the median duration of contact requests between the synchronous and asynchronous flow. The asynchronous workflow is on average 72% faster than the synchronous one.

Conclusion

This implementation allows us to scale and improves the user experience. Since introducing it, we have improved the robustness of our system by being resilient over external system temporary failures. As a nice bonus, request time decreased significantly, and we now have more visibility on customer requests.

.JPG)

.JPG)