DBT & Databricks: Using job clusters for data pipelines

Explore how GetYourGuide's data engineering team leverages dbt and Databricks job clusters to optimize data pipelines in our latest blog post. Discover the step-by-step guide by Giovanni Corsetti and Shaurya Sood, detailing the integration of dbt with Databricks for cost-effective and resource-efficient solutions. Learn about the challenges, innovative workarounds, and the ultimate shift to a serverless warehouse for a smoother developer experience. Dive into the technical insights and practical benefits of using Docker images and the Databricks job run API to enhance your data transformation processes.

Key takeaways:

In this series, Giovanni Corsetti and Shaurya Sood, Data Engineers at Core Data Platform, delve into the integration of dbt with Databricks. This first part outlines a step-by-step guide to running dbt jobs on Databricks job clusters- a method the team initially explored to optimize data pipelines. While the approach was ultimately replaced, it remains a cost-effective and resource-efficient solution worth sharing.

{{divider}}

The beginning of the journey

At GetYourGuide we rely on a proprietary tool called Rivulus for SQL transformations in databricks job clusters. However, proprietary tools often come with challenges like maintenance complexity and steeper onboarding curves. That’s where dbt (data build tool) stood out. With its strong community support, built-in features like unit tests, pre-and post-hooks, and community-maintained packages, dbt offers significant advantages over Rivulus.

A common issue for those using Databricks with dbt is the lack of support for job clusters, which can push users towards more expensive alternatives. As mentioned on the official dbt website,

When you run a dbt (databricks) project, it generates SQL, which can run on All Purpose Clusters or SQL warehouses

The only support that Databricks provides in that regard is to submit dbt tasks in job clusters, however, job clusters are only used to trigger a dbt CLI command that calls a SQL warehouse under the hood.

Only a SQL warehouse or all-purpose compute can be used as the target for a dbt task. You cannot use job compute as a target for dbt.

Possible solution

In May 2024, Leo Schick proposed a workaround to run dbt on Databricks job clusters. I highly recommend reading it since most of his struggles and findings align with my experience trying to solve the same problem. His method utilized custom scripts and Databricks notebooks to trigger dbt-spark jobs, bypassing the dbt-databricks adapter.

Although this solution is functional, it did not meet the requirements we were looking for at GetYourGuide, such as

- Avoiding notebooks in production: notebooks are not version-controlled by default, thus making it difficult to follow more mature development practices such as unit tests and continuous integration.

- Authentication complexity: using notebooks for dbt runs requires cloning the dbt project repository for every run, adding multiple layers of complexity for authentication.

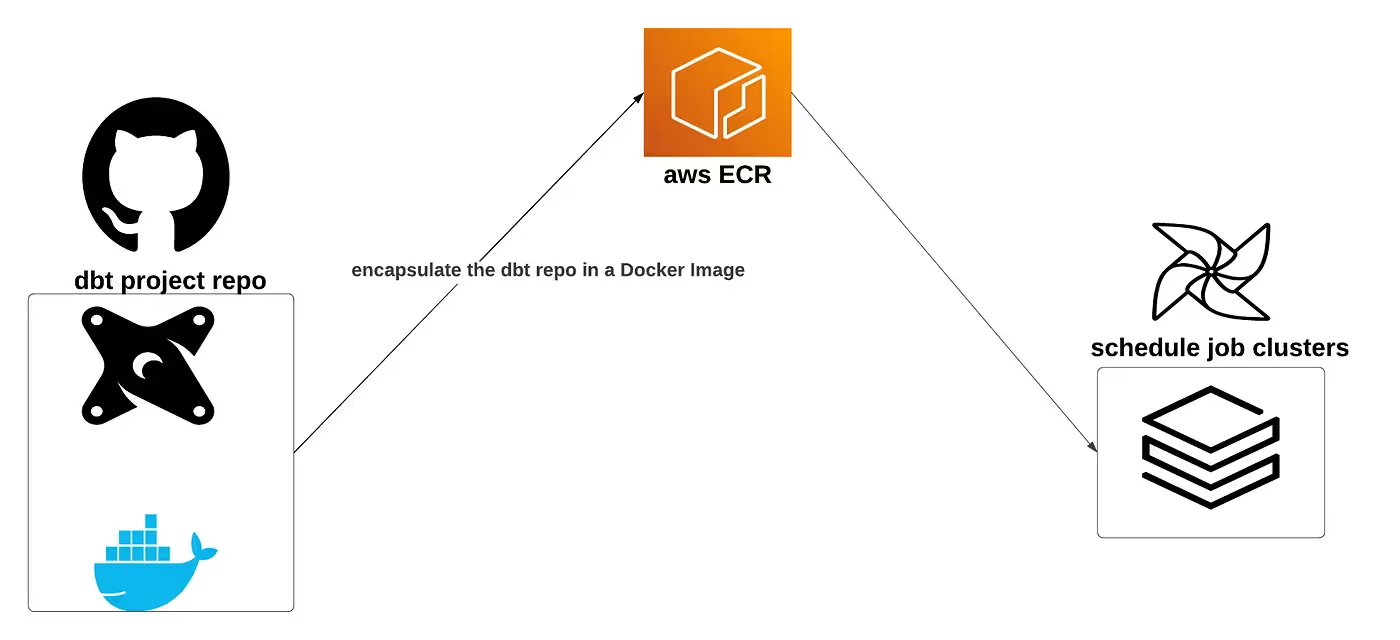

At GetYourGuide, we developed a solution leveraging Docker, encapsulating the dbt project along with a dbt-spark adapter’s profiles file. This approach involved creating a Dockerfile that bundled the necessary configurations and then pushing the resulting Docker image to a private container registry. Once in the registry, this image was deployed using theDatabricks job run API, enabling the seamless execution of dbt tasks within job clusters.

This solution avoids using notebooks and aligns with other similar workflows running at GetYourGuide. Although we did not adopt this approach due to the complexity of local development, it remains a cost-effective solution with many benefits. The implementation process is described in more detail in the next sections.

The following figure illustrates this process.

Creating the Dockerfile

Running dbt-databricks directly in job clusters is not supported at the time of writing. However, using dbt-spark is a viable alternative since Databricks operates on Spark under the hood.To enable this setup, the dbt project is encapsulated into a Dockerfile, which includes a dbt-spark profiles.yml file pointing to the current Spark session

Here’s how to achieve this:

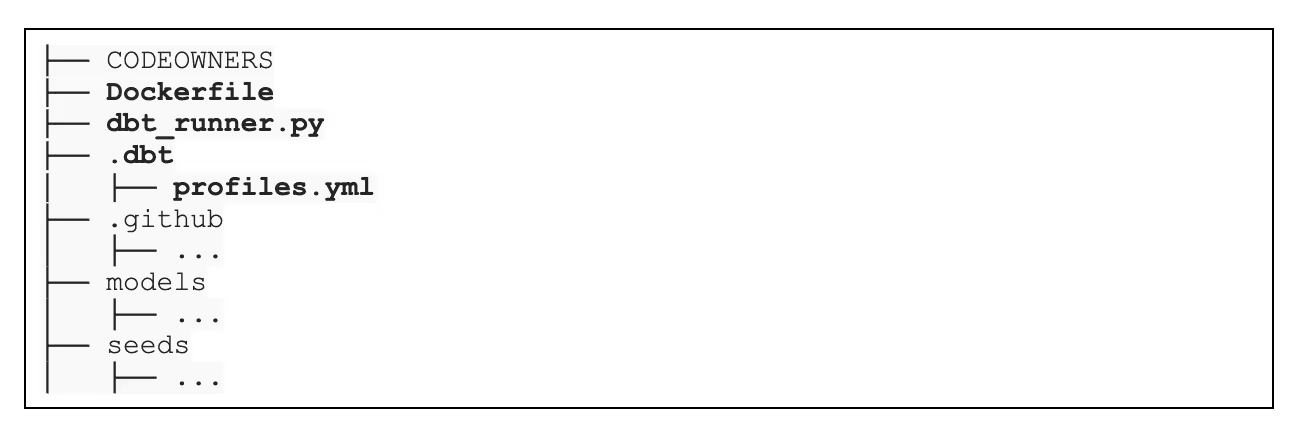

- create a .dbt/ directory at the root level of the dbt project

- place the dbt-spark profiles.yml file at .dbt/profiles.yml using the session method. The profiles.yml file is not created directly in the root directory because most dbt users place their personal profiles.yml there, and it would essentially be gitignored.

Now, with the .dbt/profiles.yml file in place, two additional things are required:

- a script to handle dbt arguments and execute them- Adapted from Leo Schick’s work, this python script, named dbt_runner.py, is placed at the root level of the dbt project. It performs the following actions:

- It accepts a list of parameters to run a dbt command, such as build, test, compile, etc.

- It removes the prefix /Workspace/file: from the working directory and sets the new path as the current working directory. This adjustment was tricky to figure out because, in essence, Databricks does not run the submitted script from its root path location.

- The docker image itself- the Dockerfile is built using databricksruntime/standard. The Dockerfile content created at GetYourGuide can be seen here. Your github repository should have a structure similar to the one below now.

Now you have:

- The profiles.yml to connect to the spark session

- The script to trigger dbt

- A docker image that encapsulates your dbt project to run it into databricks

It is safe to say that the ingredients are all on the table, but we still have to cook them in order to make the most of it.

Dealing with the deployment

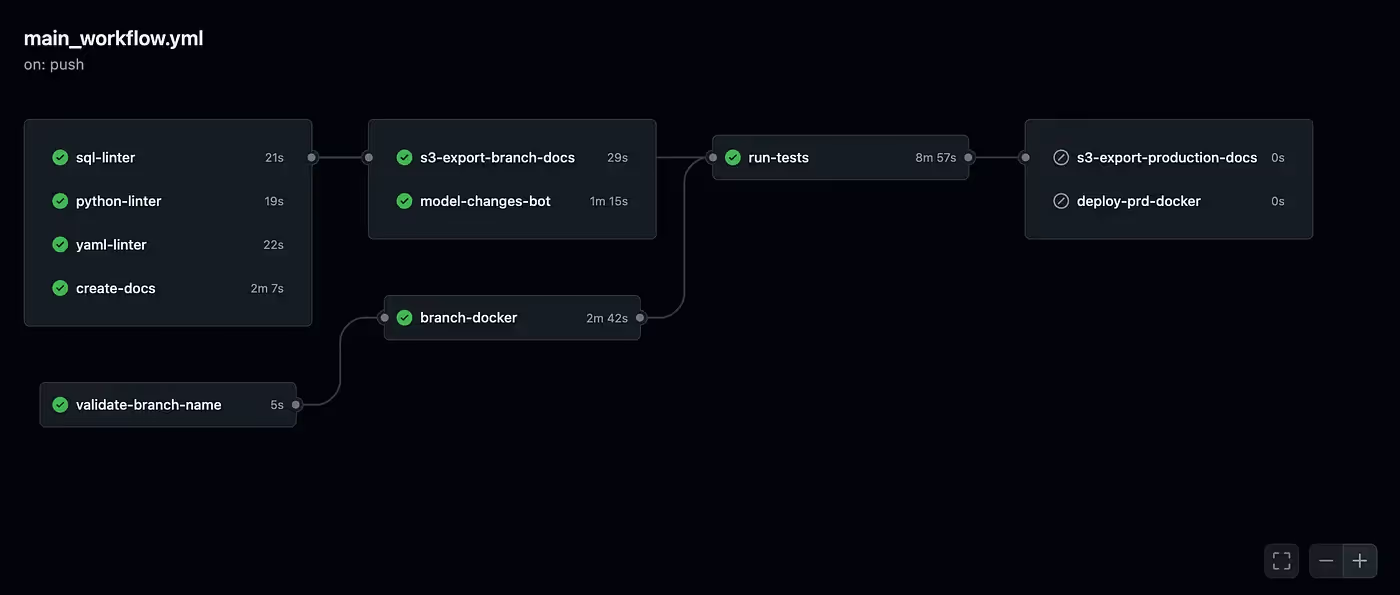

Deploying a dbt project to Databricks job clusters requires a well-structured CI/CD pipeline. The figure below illustrates a possible CI/CD pipeline for a dbt GitHub repository that will make use of job clusters:

- Generate artifacts early- the pipeline begins by generating dbt artifacts with dbt docs generate, to save time for subsequent actions like uploading to S3.

- Validate branch names- a validation step ensures that branch names do not contain special characters, avoiding issues with tagging ECR images using invalid characters.

- Run tests in Databricks- A POST request is sent to the databricks /jobs/run/submit endpoint triggering a test dbt command. Note that job cluster start-up times can take a few minutes!

To create the docker image, the branch name is used as a tag suffix. Since every push essentially generates a new image, this can lead to an excessive number of images in the container registry. To circumvent it, it is recommended to add a policy to clean ci-related images at regular intervals.

Once the Docker image is stored in the container registry, it can be used to trigger a dbt command in the CI or in airflow that will run a dbt transformation inside a job cluster. To facilitate this, a custom Python script,trigger_databricks.py, was developed.

The python script requires 5 key arguments

- databricks_instance: your databricks host

- token: databricks authentication token so we can send requests to databricks

- docker_image: the path where our docker image is located at

- run_name: name of the run in databricks

- dbt_command: the dbt command to run (for example, test, build, run, etc)

Although this solution was effective, at GetYourGuide we ultimately shifted to using a serverless warehouse. The decision was driven by the smoother developer’s experience.

Final Remarks

The integration between dbt with databricks is still not optimal for the users aiming to use job clusters. However, for teams prioritizing cost savings and resource control, the steps outlined in this guide provide a practical and efficient approach. By leveraging Docker images and the databricks job run API, it is possible to overcome limitations and harness the power of dbt within a databricks environment.

.JPG)

.jpg)