Final Steps and Key Learnings from Our Public API Migration

Discover the journey of GetYourGuide's API migration from a monolithic architecture to microservices. Learn about the challenges faced, innovative solutions developed, and key learnings from this transformation to enhance performance, scalability, and maintainability. Ideal for software engineers and tech enthusiasts looking to understand the intricacies of modern API infrastructure.

Key takeaways:

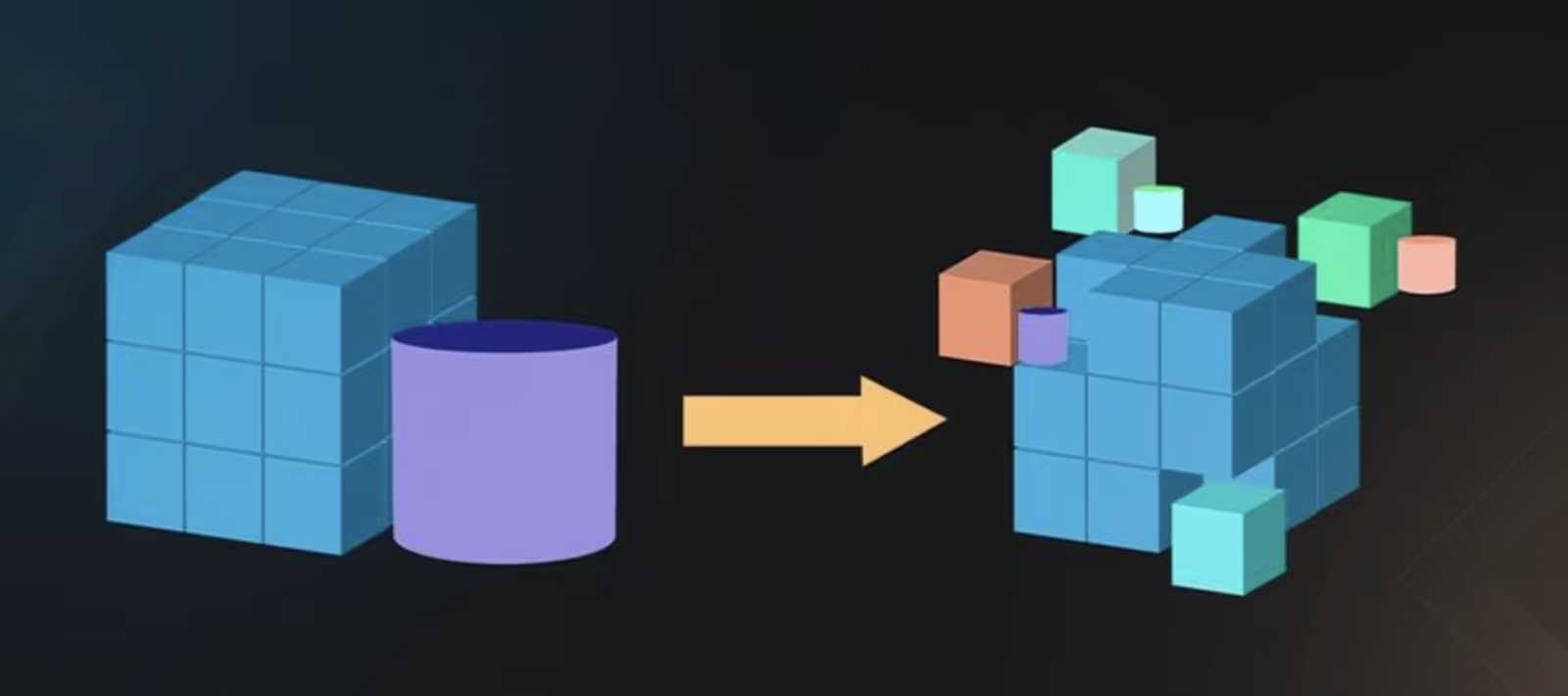

In today's fast-paced digital landscape, evolving our infrastructure to meet growing demands is crucial. At GetYourGuide, we recently undertook a significant project to migrate our public API from a monolithic architecture to a microservices-based approach. This transformation was not only a technical endeavor but also a strategic move to enhance performance, scalability, and maintainability.

I’m Luis Rosales Carrera, a Software Engineer at GetYourGuide on the Partner Tech team. In this article, I'll guide you through the final stages of our complex migration, focusing on the challenges we faced and the innovative solutions we implemented. I'll also delve into the significant benefits we achieved post-migration and share key insights and best practices to help others on similar paths.

From seamless deployment strategies to ensuring minimal downtime and optimizing our API's performance, our journey was filled with invaluable experiences and insights. Join me as I delve into the intricacies of our migration process and uncover the strategies that led to a successful transformation of our public API infrastructure.

{{divider}}

The Migration

Before diving into the technical details, let me introduce GetYourGuide's public API. This API is leveraged by a diverse range of partners, from small businesses to large airline companies, to streamline the entire booking process. It enables our partners to create custom-tailored integrations, providing a seamless experience for their customers.

The migration of our public API from a monolithic architecture to a microservices-based approach was designed to be as transparent as possible for our partners, avoiding massive communications about downtimes and API changes which would have otherwise been resource-intensive.

To streamline the process, we divided the migration into different domains, each clearly defined by its purpose (e.g., categories, tours, suppliers, bookings). Each domain contained multiple endpoints to allow operations for retrieving and sending data at different granularities. We started with domains that were easier to migrate, such as categories, which only involved read-only operations. This strategy allowed us to leverage the knowledge gained from these initial migrations before tackling more complex domains like bookings or carts.

Before migrating each domain, we ensured we had a comprehensive plan in place. It was essential to have a clear roadmap of the next steps to avoid any mid-migration uncertainties. For example, while migrating the bookings domain, we realized the necessary data only resided within the monolith. There was no external access to this data, so we had to create a mechanism to expose it, enabling us to consume and transform the data to maintain a seamless user experience. A big shoutout to our colleagues in the Fintech teams who helped overcome these blockers and actively supported us in solving this problem and extracting the data.

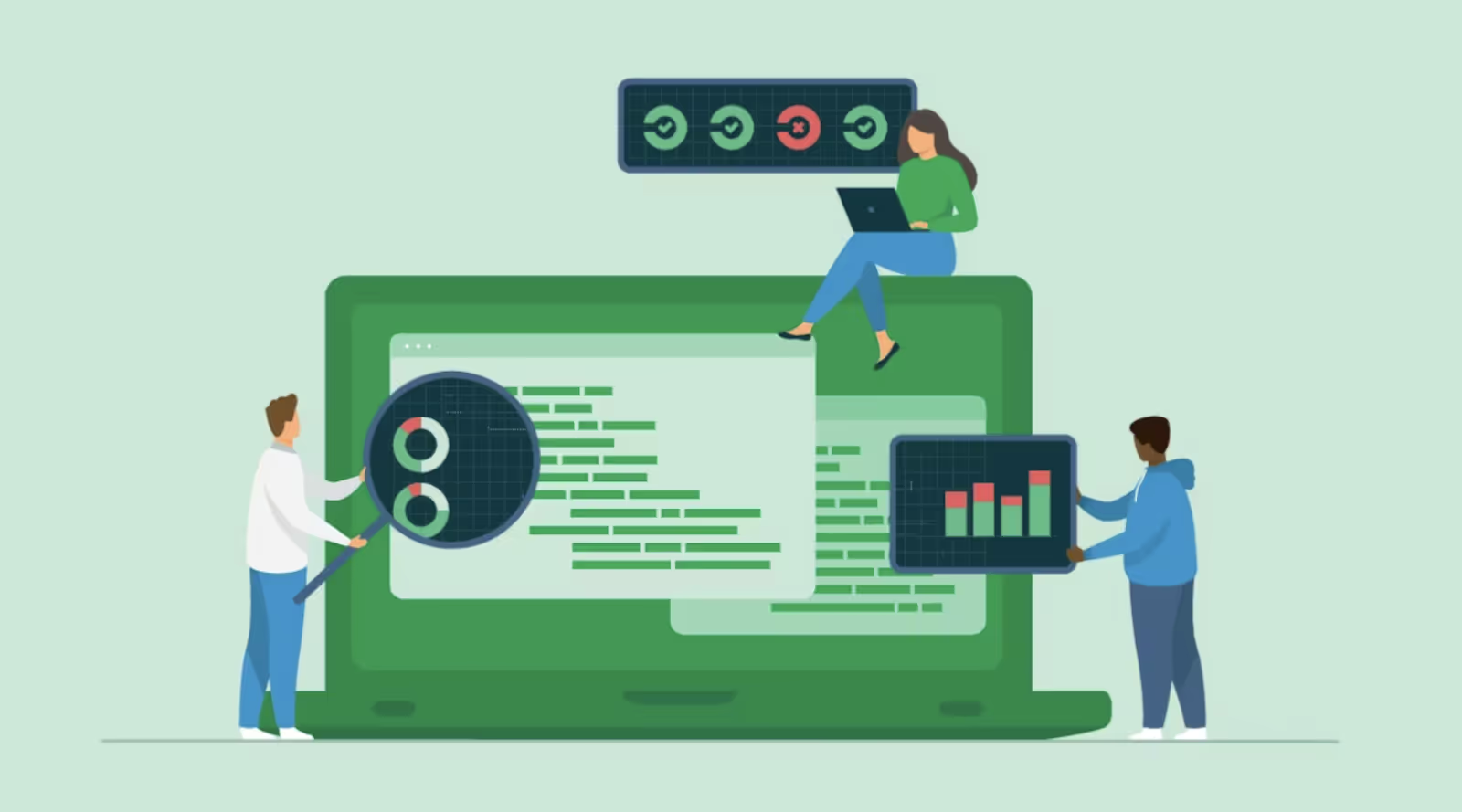

Another critical component of our successful migration was ensuring good coverage with end-to-end (e2e) tests. Early in the migration process, we created a comprehensive test suite to exercise crucial system paths. We invested time to ensure these e2e tests were always running with the latest migration snapshot, regardless of whether 20% or 50% of the migration was completed. Automated tests ran programmatically, and we acted immediately upon detecting any inconsistencies. This proactive approach ensured that no major bugs were discovered during the rollout, and the investment in testing paid off significantly.

In conclusion, the successful migration of our public API from a monolithic to a microservices-based architecture was achieved through meticulous planning, strategic domain-based segmentation, and proactive testing. By ensuring a comprehensive plan was in place for each domain and leveraging the invaluable support from our colleagues, we navigated through potential blockers seamlessly.

With the migration complete, let's delve into the key challenges we faced, the benefits we realized, the key learnings, and our future plans.

Key Challenges and How They Were Overcome

During the final stages of our migration, we encountered unexpected challenges and complex problems that required innovative solutions to ensure a seamless transition for our partners.

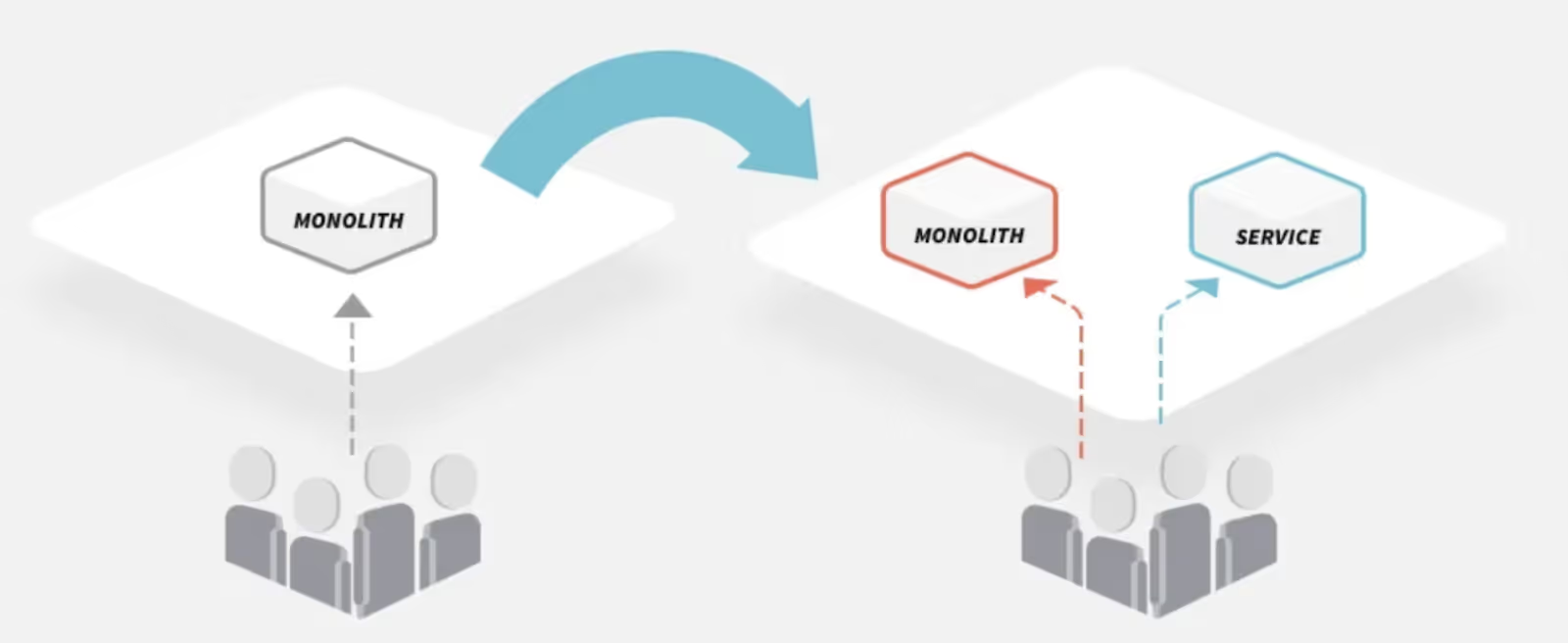

Load Balancing the Traffic

Since our plan from the beginning was to keep this migration transparent for our users, we needed to find a way to keep the traffic flowing without disruptions while extracting functionality from the monolith into a separate service. We decided that this traffic split needed to happen at a top level, where we had enough context to determine whether traffic should go to the monolith or to the new service. Of course, both systems had to have exactly the same functionality so our users wouldn’t notice any discrepancies.

Thanks to our infrastructure teams, we had the ability to control the network flow at this level. Using Istio and its API, we defined Virtual Services to set the traffic routing rules. This setup gave us a lot of confidence when releasing migrated functionality since we had total control over the network flow. We could start and stop the outgoing flow to the new service if we detected any bugs, and even define the percentage of traffic each service could receive. This was particularly useful for high-traffic endpoints, as we could run our migration with, for example, 1% of traffic going to the new service, monitor the endpoint behavior in real time, and minimize disruptions if any issues arose.

Handling Legacy Functionality

During this migration, we faced a case where, instead of rewriting the functionality in the new service, we first analyzed whether it made sense to migrate it. We examined the number of active users and the revenue generated by this functionality. Thanks to this analysis, we discovered that we could save time and resources by not migrating this functionality.

However, we couldn't stop supporting this functionality overnight due to existing contracts with our partners. We needed to keep this functionality alive a bit longer. To continue uninterrupted with our migration, we had to find an innovative solution to migrate the endpoint that was exposing this functionality but not the deprecated part, as it didn't make sense to invest time in migrating a functionality that was about to be removed.

How did we overcome this? For this particular scenario, we configured our new service as a proxy. This allowed us to proxy incoming requests for the deprecated functionality to the monolith while continuing to serve the main functionality in the new service.

With this setup in place, we had total control of the proxy. Whenever the deadline for supporting this functionality passes, we can easily remove the proxy and continue serving the functionality from our new service.

Boosting Productivity through Code Generation

This project required meticulous attention to detail, with every delivered functionality reviewed by multiple team members and often involving different teams. With so many tasks at hand, the last thing we wanted was to send our users a payload with a wrong key or improperly validate their payload due to a type error.

To minimize human-factor mistakes, we used tools such as OpenAPI Generator and MapStruct to auto-generate as much code as possible. By using generated API clients and code-generated mappers for transfer objects, we saved a lot of time and reduced human error. This approach not only minimized mistakes but also eliminated the need to write boilerplate code.

These were some of the innovative solutions that helped us tackle complex challenges and ensured a smooth and efficient migration process. In the next section, we’ll dive into the benefits we saw post-migration.

Key Learnings

In this section, we will discuss the key learnings from this project, which not only facilitated our migration but also enhanced our overall strategy and efficiency.

Start Small

One of the key strategies we adopted during our migration was to start small. Instead of diving headfirst into migrating complex domains, we began with those that were easier to handle. This approach allowed us to gain valuable insights, set goals and build confidence in our migration process before tackling more complicated domains like the checkout flow.

Starting with simpler domains had several advantages. First, it enabled us to test our migration framework in a low-risk environment. Any issues we encountered were easier to address and didn’t significantly impact our users. We could refine our processes and strategies, learning from each small migration and applying those lessons to the next.

Additionally, migrating simpler domains first helped us to incrementally build our team’s expertise and confidence. Each successful migration reinforced our understanding and capability, which was crucial when we moved on to more complex domains. This step-by-step approach ensured that we had a solid foundation before dealing with the intricacies of larger and more critical parts of the API.

By starting small, we could also validate our end-to-end testing suite and automated tests. This ensured that our tests were robust and reliable before applying them to higher-stakes migrations. Overall, beginning with less complex domains was a strategic move that paved the way for a smoother and more efficient migration process.

Avoiding Migration of Legacy Functionality

In most cases, we migrated functionality 1:1 so our users would see the same input and output before and after the migration. However, there were instances where we could improve existing functionality.

For example, there was a case when our monolith was still consuming from deprecated sources. We used this migration as an opportunity to stop consuming from old sources and start consuming from up-to-date data. This investment in a longer-lasting API helped us avoid future migrations, especially since teams responsible for deprecated functionality might be planning to sunset those parts of their system.

We also carefully considered whether each piece of functionality was worth migrating for the long term. For instance, while migrating the checkout functionality, we realized that one of our payment methods wasn’t generating enough revenue to justify the migration effort. Rather than migrating this functionality, we chose to sunset it and provide our users with an alternative, ensuring they could continue operating with us seamlessly while avoiding the time-consuming process of migrating a low-impact feature.

Think twice before embarking on a migration; you probably don’t need it.

Real-Time Monitoring and Adaptive Adjustments

Continuous monitoring and the ability to adjust quickly were critical to the success of our migration. From the outset, we knew that even the best-laid plans could face unexpected challenges. Hence, having a robust monitoring system and the flexibility to make real-time adjustments was paramount.

We set up comprehensive monitoring tools to keep a close eye on the performance and stability of both the monolith and the new microservices. This included tracking key metrics like response times, error rates, and traffic patterns.

How did this help us? In one instance, after migrating an endpoint, we noticed latency issues once we switched all traffic to the new service. Upon further analysis, we discovered that the upstream service from which we were pulling data did not have an SQL query optimized for our use case. By communicating with the respective team, we addressed the issue properly. Without a proper monitoring system in place, these issues would have been much harder to discover without user feedback.

Effective monitoring also proved invaluable when we detected performance issues in an endpoint that aggregated data from multiple upstream services to construct the activity entity. By analyzing the behavior using a flame graph, we identified an inefficiency: part of the data was being pulled synchronously, even though there were no direct dependencies between this data and the subsequent processes. Recognizing this, we parallelized the independent requests, significantly improving response times. This optimization greatly enhanced the user experience when accessing our catalog of activities.

In essence, the ability to monitor and adjust in real-time ensured that our migration process was resilient. It enabled us to solve problems as they arose, minimizing disruptions and maintaining a high standard of service for our users. This adaptability was a cornerstone of our successful migration strategy.

Conclusion

Migrating our public API from a monolithic architecture to a microservices-based approach was a significant and demanding project that required meticulous planning, innovative solutions, and seamless execution. At GetYourGuide, we tackled this transformation not just as a technical challenge, but as a strategic move to enhance our API’s performance, scalability, and maintainability.

Throughout this journey, we faced complex challenges, from load balancing traffic and handling legacy functionality to leveraging code generation tools and continuously monitoring our systems. Each obstacle taught us valuable lessons and drove us to develop creative solutions that ensured the success of our migration.

The benefits of this migration have been substantial. We've seen significant improvements in performance, scalability, and reliability, backed by specific metrics and positive feedback from our users and partners. This transformation has not only enhanced our current operations but has also set a solid foundation for future growth and innovation.

This architecture supports our long-term business goals and technical strategies, enabling us to introduce new features, services, and scale to meet growing demand efficiently.

Thank you for joining me on this deep dive into our migration process. I hope our story inspires and guides you in your own technical endeavors.

.JPG)

.JPG)