From trees to transformers: adopting deep learning for activity ranking

Learn how GetYourGuide transformed activity ranking with deep learning. Follow our six-month journey from tree-based models to transformer architectures, including technical challenges, A/B testing strategies, and lessons learned while ranking 150,000+ travel experiences for millions of users.

Key takeaways:

At GetYourGuide, our mission is to help millions of travelers discover and book experiences they’ll never forget. With over 150,000 activities offered by more than 35,000 suppliers worldwide, we’re proud to be the leading marketplace for travel experiences. But with so many options, how do we make sure each traveler finds the perfect activity for their next adventure?

The answer lies in our activity ranking. Every time a traveler searches on our platform, our ranking system decides which activities appear at the top of the page. This is a high-stakes decision: limited screen space and attention mean that only the most relevant activities get noticed and booked. Getting ranking right is the difference between a magical trip and a missed opportunity.

Recently, our Activity Ranking Team undertook one of our most significant technical challenges yet: migrating our complex ranking system from tried-and-true tree-based models to state-of-the-art deep learning models. In this post, we’ll share the story of this transition — why we made the leap, how we approached it, and what we learned along the way.

{{divider}}

How does machine learning shape the user journey?

Ranking is at the heart of the traveler’s experience when using the GetYourGuide app. It’s how we connect each user to the right activity, whether they’re searching for a wine tasting in Bordeaux or a snowshoe hike under the northern lights in Lapland. But finding that perfect match isn’t easy.

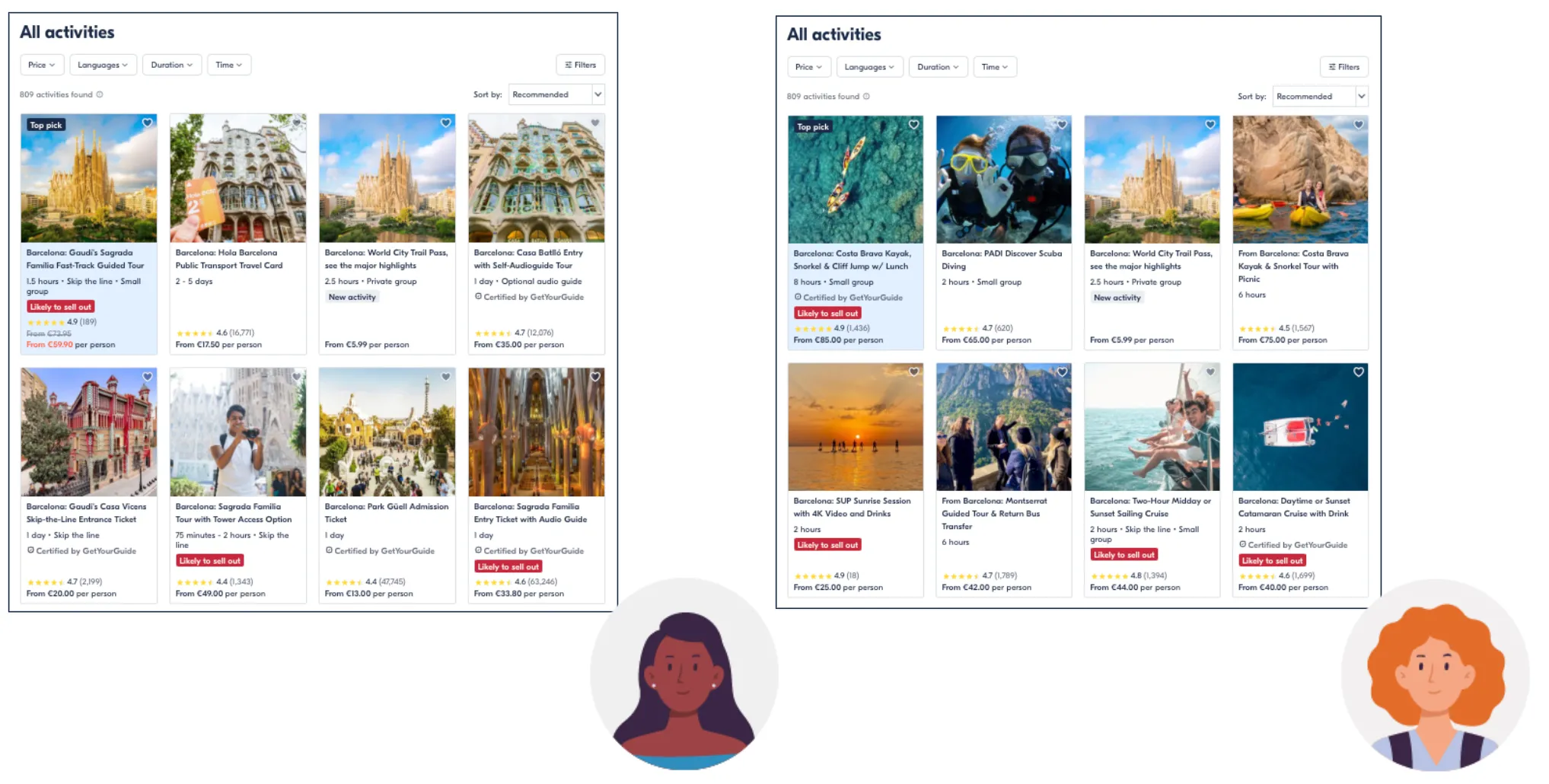

Our ranking system must balance seasonality, language preferences, business goals, and the unique interests of every user. For example, two people searching for activities in Barcelona might see completely different recommendations, each tailored to their own tastes and needs.

To tackle this challenge, we employ a machine learning approach known as Learning to Rank. Instead of relying on hand-crafted rules, our system learns directly from traveler behavior: what people view, click, and book.

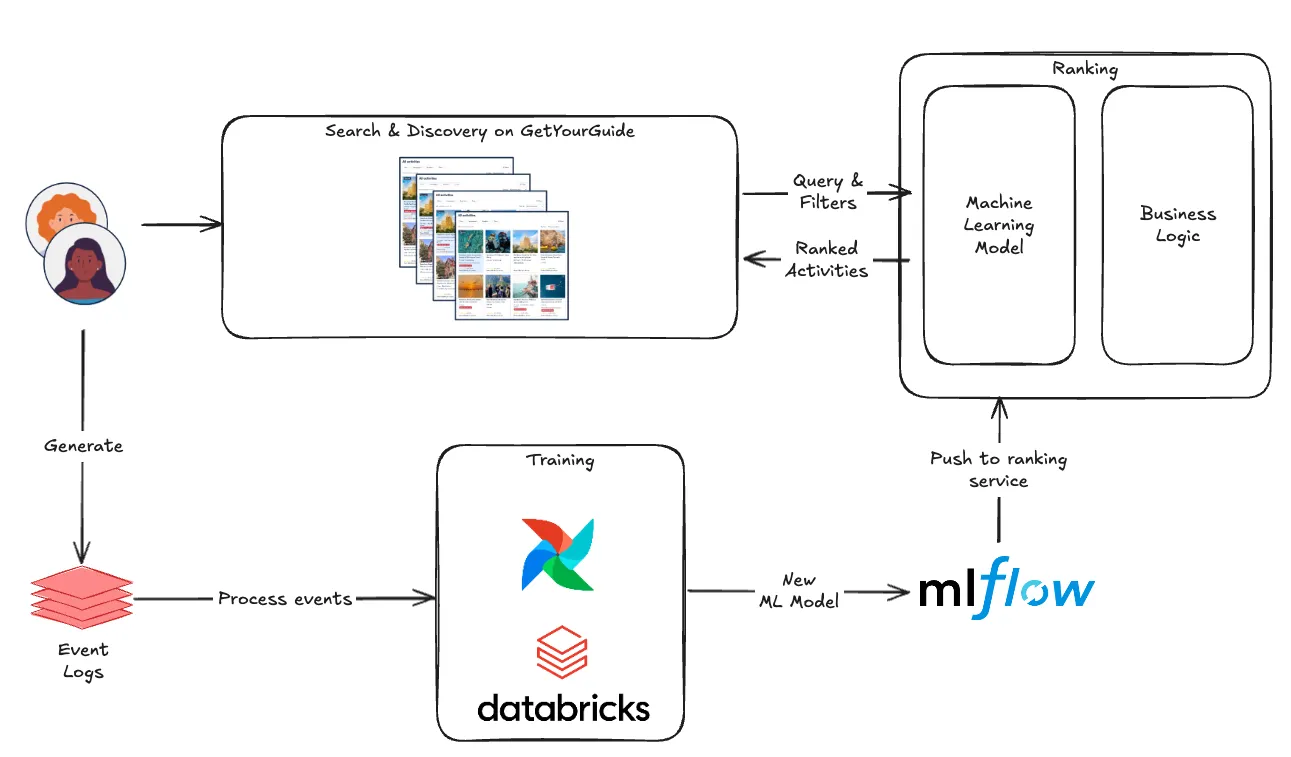

Every interaction on our platform becomes a subtle signal fed back into our ranking system, helping us refine our understanding of what “relevant” means for different users and situations. This creates a continuous feedback loop.

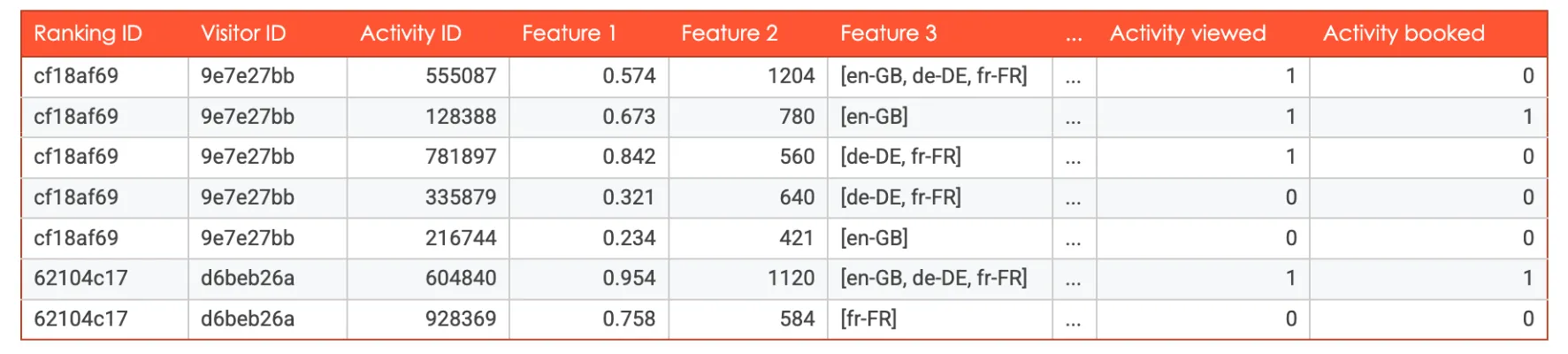

As travelers use our site, they generate a rich stream of data through their searches, views, clicks, and bookings. That data is then processed and used as implicit feedback to train and update our ranking models. For every user and activity in a historical search, we construct a feature vector comprising over 50 signals, capturing a wide range of information, from product attributes and user preferences to contextual details such as seasonality and language.

Once trained, our ranking models are deployed to production, where they improve relevance for millions of searches each day. New models are produced and deployed daily, while being carefully monitored through both offline metrics and live A/B tests, to ensure that offline improvements also translate into real impact for travelers and the business. This approach enables us to personalize search results at scale (read this blog for more details), helping each user discover the experiences most relevant to their unique interests and their destination.

Why move to a deep learning model?

For years, our ranking relied on robust tree-based models, utilizing XGBoost within the Learning to Rank framework (read this blog to find out more). This approach served us well, but over time, we began to reach the limits of what these tree-based models could offer. The improvements in each A/B test and new model iteration became smaller and harder to achieve.

At the same time, we saw inspiring results from other industry leaders, such as Airbnb, Wayfair, and Etsy, who shared how deep learning has transformed their recommendations. Deep learning also promised much greater flexibility: the ability to design custom architectures, utilize advanced loss functions, and leverage new types of data, such as text, categorical, and even image data.

But we knew this wasn’t going to be an easy switch. Moving to deep learning meant rethinking our infrastructure, scaling up for multi-GPU training, optimizing resource usage, and even addressing practical challenges, such as much larger Docker image sizes due to PyTorch. The migration took over six months and pushed us to revisit everything, from our CI pipeline to model deployment.

Despite the challenges, each step in this process brought us closer to a more powerful and adaptable ranking system, one that is ready to meet the needs of our growing marketplace.

A phased experimentation strategy

Rather than trying to rebuild everything at once, we broke the transition into clear, manageable phases. This helped us stay focused, learn quickly, and minimize risk along the way.

Phase 1: Technical parity

Our first goal was simple: prove that a deep learning model could match the technical performance of our existing tree-based system. In this context, “technical performance” meant fully integrating the model into our service, deploying it successfully, and serving rankings with reasonable latency.

At this stage, we weren’t yet so concerned about the actual precision of the ranking model, so we started with a rather basic neural network architecture trained on just four features and tested it internally behind a feature toggle. Once we confirmed that the technical setup worked as intended and the model was serving results within our latency targets, we expanded it to use all available ranking model features. With this more complete model, we launched an A/B test with 50% of visitors. This incremental approach helped us build confidence in the new stack and ensured the deep learning system could stand toe-to-toe with our XGBoost model in real-world conditions.

Phase 2: Business parity

While the basic neural network from Phase 1 met our latency SLOs, it still lagged behind in ranking precision and business metrics, meaning the relevance just wasn’t as strong for our end users. Our next focus was to match the business performance of our tree-based model, not just its technical requirements.

To do this, we introduced a self-attention architecture, which significantly improved our offline ranking scores. However, we noticed an unexpected side effect: user bounce rates increased, with more users ending their sessions without ever viewing any activities or making bookings. To address this, we broadened our training data to capture a broader range of user behaviors, especially from visitors who viewed but didn’t book an activity. After a few iterations of refining the model and data, we also achieved parity in our core business metrics, successfully clearing this second milestone.

Phase 3: Business wins

With the basics in place, our focus shifted to achieving real, measurable business impact. In this phase, we focused not only on matching the business performance of our XGBoost model but exceeding it. Ultimately, it took us more than four iterations of refining the core model architecture to deliver better results for our users and achieve significant business wins.

To improve the precision of our ranking model, we added features to capture the semantic similarity between activities and categories (think: “wine tasting in France” and “monk meditation in Japan”), introduced a more sophisticated Deep & Cross model architecture, and incorporated trainable embeddings in our model.

We also significantly scaled up our dataset to provide the model with richer information. After several more rounds of experimentation, we observed a clear improvement in ranking metrics and, most importantly, a significant increase in business results, confirmed by a positive A/B test.

Our deep learning model architecture

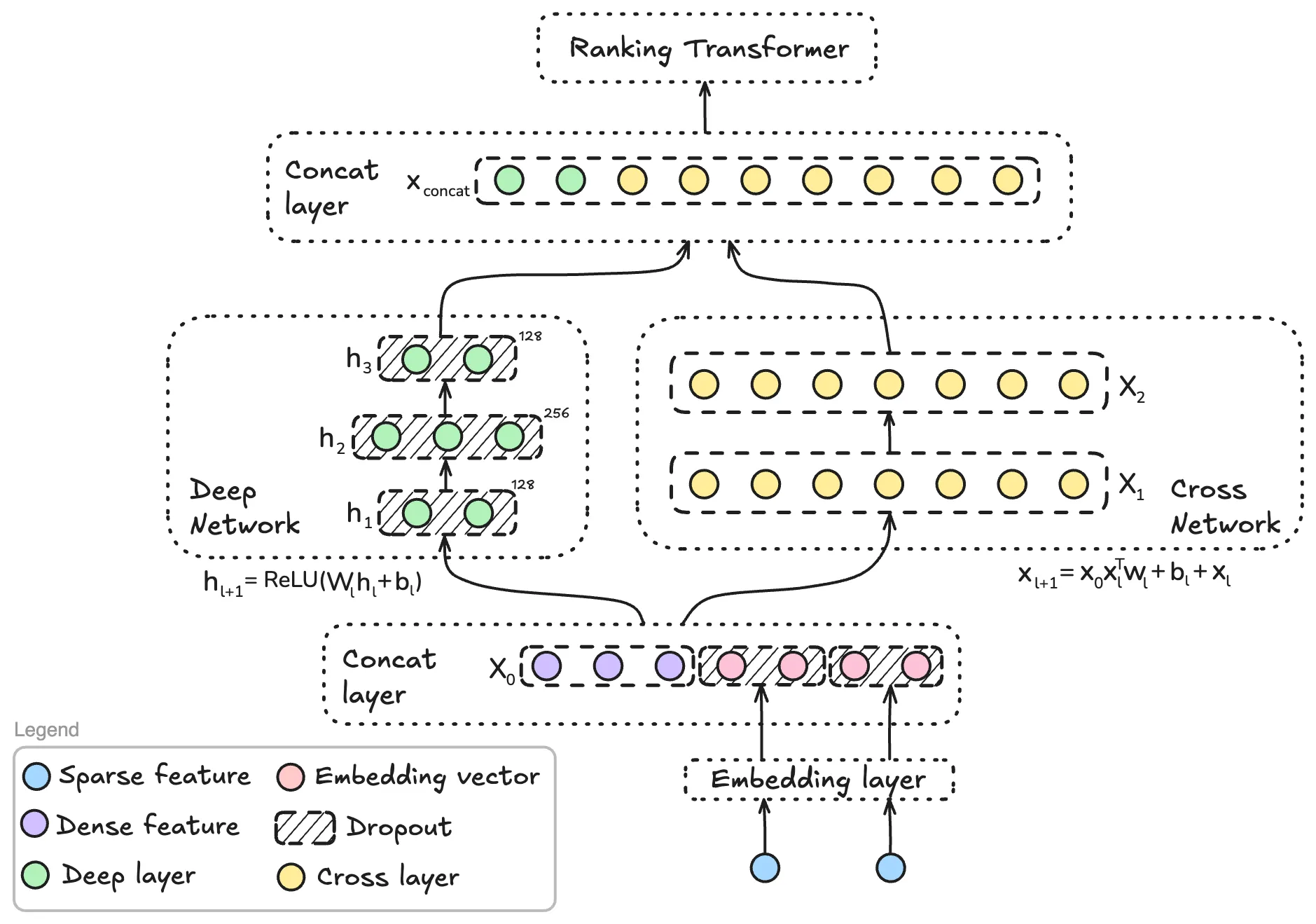

Ultimately, we developed a deep learning model that combines several powerful components, each playing a crucial role in determining how activities are ranked on our platform. The two main pillars of the architecture are the Deep & Cross Network and the Ranking Transformer.

Everything starts with the embedding layer. Here, sparse categorical features, like locale, activity ID, and location ID, are transformed into dense vector representations. This step enables the downstream neural network to handle a wide variety of inputs efficiently. One challenge we encountered was the tendency for the model to “memorize” unique entity IDs (especially activity IDs) during training via the learned embedding. To prevent overfitting and encourage generalization, we applied strong dropout regularization to these embeddings, helping the model focus on broader patterns rather than memorizing individual activities.

After embeddings are produced, the data flows through two parallel subnetworks: the deep network and the cross network. The deep network is a classic multi-layer neural network, designed to learn complex, non-linear relationships from the input features. It’s especially good at picking up subtle patterns and interactions that aren’t easy to hand-code. The cross network runs in parallel and is specialized for capturing explicit feature interactions. While deep networks excel at modeling nonlinearities, they can struggle to represent simple, direct feature combinations efficiently. The cross network addresses this by explicitly learning feature crosses, and its ability to efficiently model these direct combinations gives the model an extra edge in relevance. For example, the cross-network can identify that “category = cooking class” combined with “traveler type = family” is a winning combination for specific users.

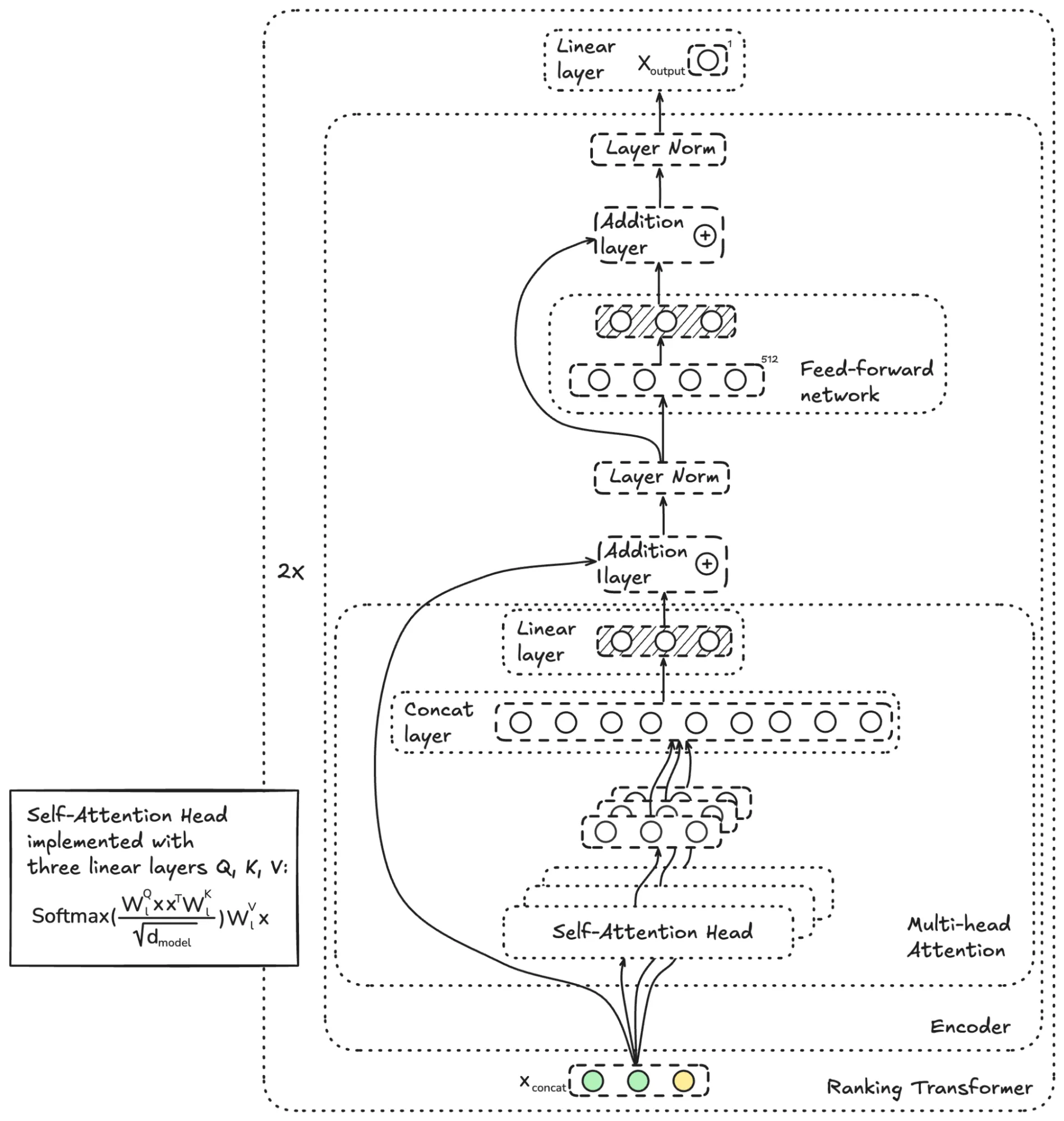

The outputs of the deep and cross networks are then concatenated and fed into the ranking transformer, which is the final major component of our architecture, and one inspired by the transformers used in modern language models. Our ranking transformer consists of two encoder blocks, each using a three-headed self-attention mechanism followed by a feed-forward network, along with in-between skip connections and layer normalization.

The key role of the ranking transformer is to apply self-attention across all activities in a search query. In practical terms, this means the model considers the entire set of activities within a single ranking request, allowing it to make list-wise ranking decisions based on the context of the entire query, not just each activity in isolation. Unlike language models, we don’t use positional encoding here since the pre-ranking order of activities doesn’t carry meaning for us. What matters is how the model ranks the activities in its final output, focusing entirely on delivering the best possible relevance for each ranking request.

Key takeaways and conclusion

Our journey from trees to transformers has been full of challenges, learning, and growth. Along the way, we picked up some valuable lessons that shaped both our process and our results.

Start simple and test often

Reusing as much as possible from our XGBoost setup helped us move quickly and avoid reinventing the wheel. By making incremental changes and validating them both offline and in production, we conducted over 50 iterations and 10 A/B tests within a six-month period. This rapid feedback loop was crucial for learning what worked and what didn’t.

Break big goals into milestones

Dividing the project into clear phases – technical parity, business parity, and finally business wins – kept us focused and motivated. Celebrating each milestone not only boosted team morale but also made it easier to communicate progress to stakeholders.

Stay agile and expect surprises

Unexpected issues such as increased user bounce rates and infrastructure challenges (hello, Docker image sizes!) forced us to adapt quickly. Being flexible and ready to pivot based on real-world feedback helped us turn setbacks into opportunities for improvement.

Most importantly, none of this would have been possible without the collaborative efforts of our team: Nikhil, Duy, Galina, Olivia, Hsin-Ting, Mihail, and Theodore. By working together and following a disciplined, iterative approach, we transformed our ranking system from tree-based models to deep learning, delivering significant improvements in both user relevance and business impact for GetYourGuide and our supplier partners.

We’re excited about what’s next! This new foundation positions us to continue delivering even more relevant and personalized travel experiences to our users, while driving growth for GetYourGuide’s suppliers all over the world.

Want to be a part of it? Find your dream job on our open roles page, and find out more about how our tech team works to revolutionize the travel experience industry here.

.JPG)

.jpg)