From Text to Context: How We Introduced a Modern Hybrid Search at GetYourGuide

Discover how GetYourGuide revolutionized its search functionality by introducing a modern hybrid search system. Join our engineering experts, Alexander Butenko, Ansgar Gruene, Dharin Shah, and Ryan Sequeira, as they share insights on transitioning from a traditional lexical search to a cutting-edge hybrid search. Learn about the implementation of a multilingual model and optimization strategies that enhanced search accuracy and user experience.

Key takeaways:

Alexander Butenko, Ansgar Gruene, Dharin Shah, and Ryan Sequeira from our team share their experience and lessons learned from introducing a modern hybrid search at GetYourGuide. In this blog, they explain their structured approach, including the implementation of a multilingual model, and optimization strategies that ensured a smooth and effective transition.

{{divider}}

Introduction to full-text search at GetYourGuide

At GetYourGuide, we offer a dynamic marketplace for customers to book global travel experiences, with a catalog of over 150,000 activities in 35+ languages. Our platform enables customers to explore location-based or interest-based pages as well as providing an enhanced, full-text search option.

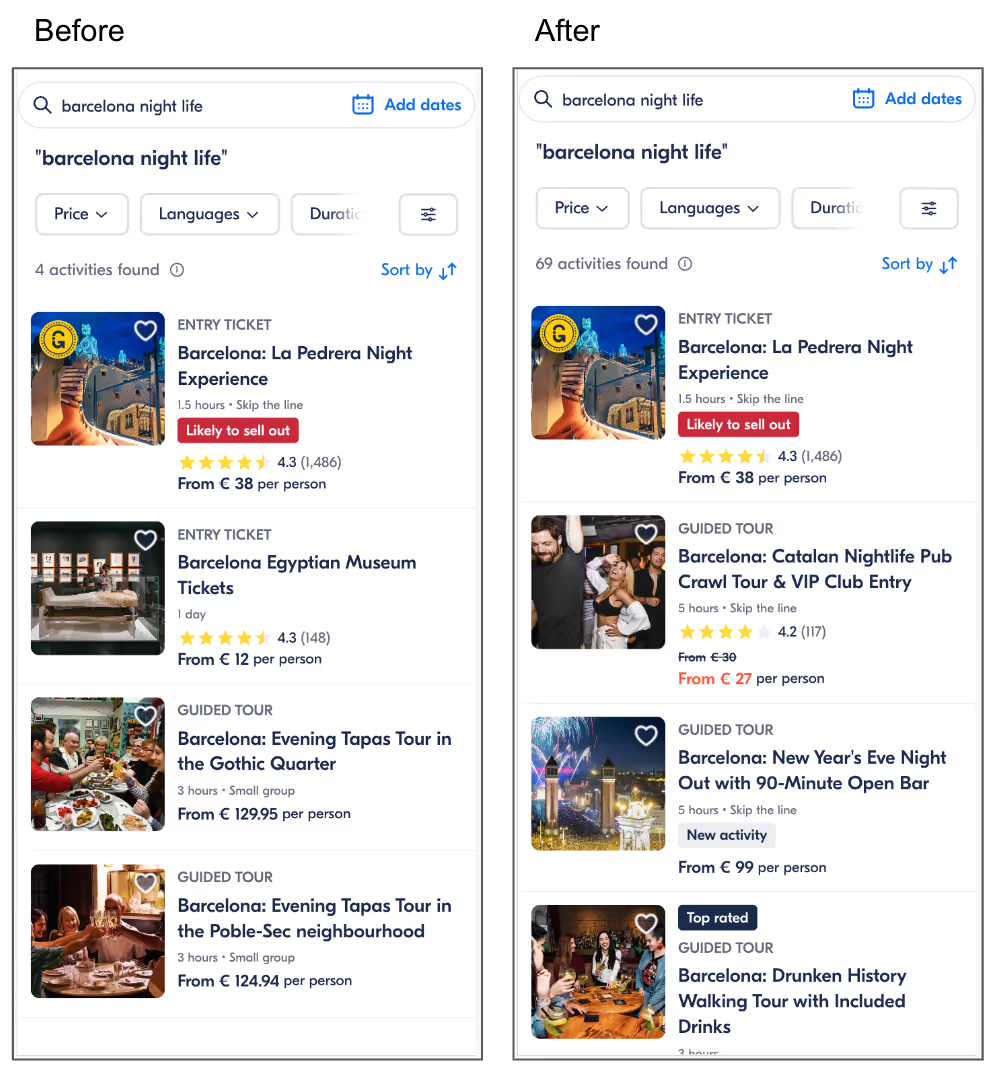

The full-text search bar allows customers to input their query to show the activities that match best (Ex - “Day trips from London”). For quite some time GetYourGuide applied a pure lexical search which looks at exact word matches between the query and the product. However, in the past year, we have leveraged the state-of-the-art approach, hybrid search which has improved the overall discovery experience for users. You can see some of the improvements in the results below:

Our previous lexical search approach, while effective for simple queries, struggled with nuanced searches. For instance, it was challenging to match less direct or descriptive terms (“family-friendly Paris activities” with "Harry Potter tours" or “nightlife”).

Before diving into details of the implementation of this hybrid search, let us first briefly describe the different search concepts to set up the basics.

Search Concepts - Key Approaches

What are the main approaches for text retrieval, i.e. finding matching documents (texts) for a query string? In the context of GetYourGuide, "search" refers to finding relevant products that match a user’s query.

Lexical Search

Lexical search or keyword search is good in detecting all products whose descriptions contain important words of the query. For example, it effectively detects products for the query “Moulin Rouge ticket”.

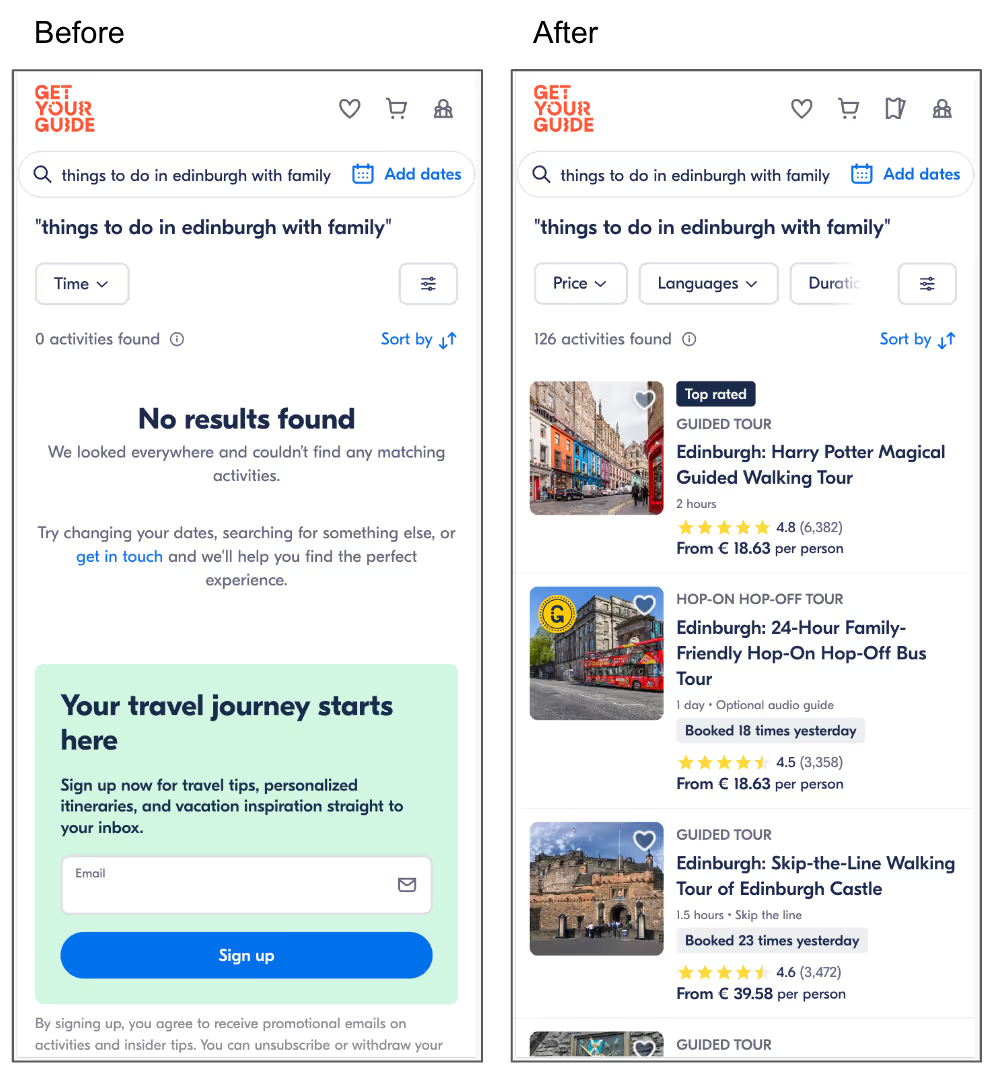

Such search methods are based on metrics that consider frequencies. How often do the words from the query show up in the document? (The more, the better.) And what share of all documents contain the word? (The less, the better, because then the word occurrence is a more decisive criterion.) This is the basic idea of the metric TF-IDF, term frequency - inverse document frequency, as shown in Fig. 1 (details on Wikipedia). A better version, called BM25, short for Best Match 25, additionally considers the size of the documents (details on Wikipedia). And BM25 is the default metric used for keyword-based retrieval in search engines like ElasticSearch / OpenSearch. Such search engines are very optimized for lexical search and use an inverted index to achieve fast searches.

Vector Search

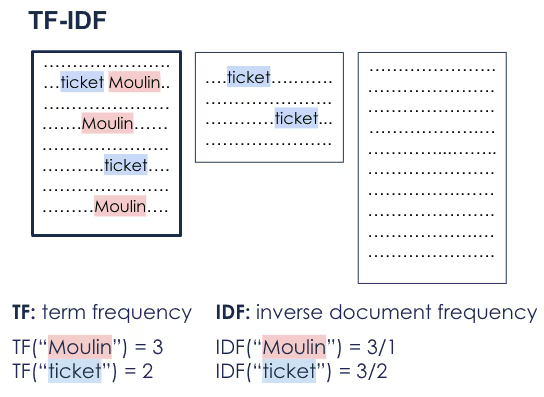

The more advanced and recent approach, known as vector search or semantic search, excels at finding contextual matches even if the query does not contain exact keyword matches. For example, vector search can recognize relevant products for a query like “dance show in Paris in a red windmill”.

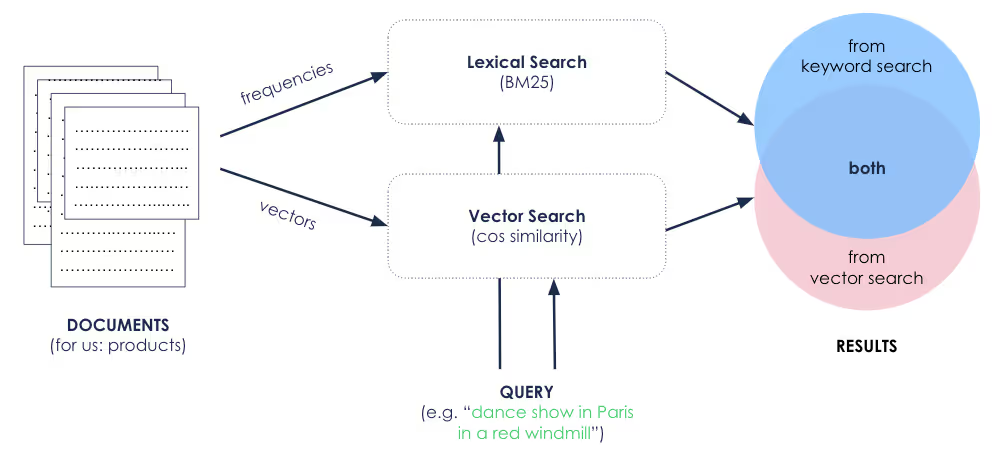

Vector search uses a machine learning model to convert the search query and the documents to semantic vectors. It is also known as Semantic Search. The model is trained in such a way that the vectors for documents that fit to the query are close to the query vector. Remember that for online marketplaces like GetYourGuide, the documents are products. The whole idea is shown in Fig. 2.

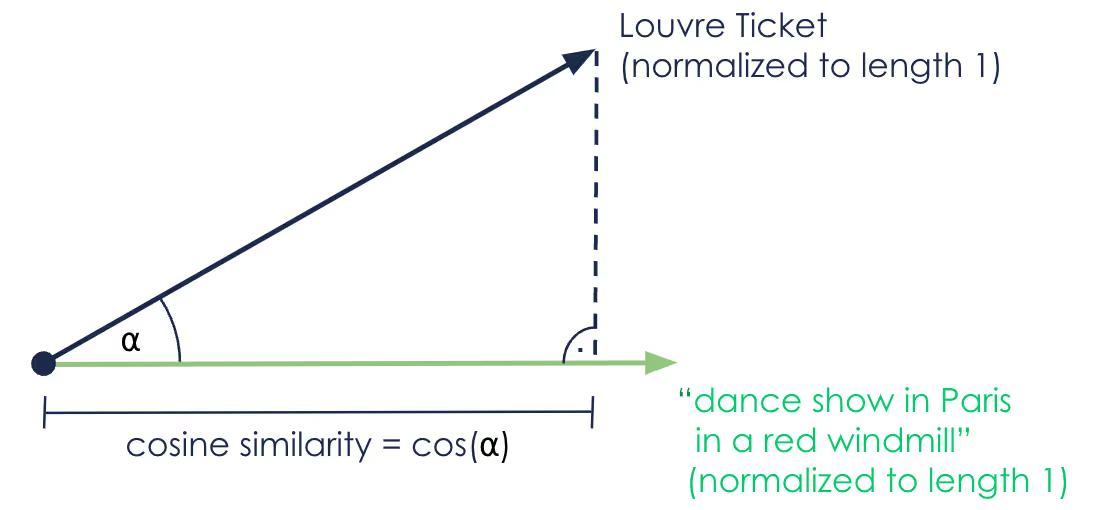

The most used metric for semantic search is cosine similarity (as shown in Fig.3) Vector search engines frequently use Hierarchical Navigable Small World Graphs (HNSW) to locate the nearest document vectors quickly, enhancing the efficiency and relevance of search results.

Hybrid Search

Hybrid search is a cutting edge approach that combines the advantages of lexical search and semantic search, creating a more accurate and relevant search experience. It is no surprise that it is therefore, the currently recommended setup for search, see for example this nice talk from 2023.

There are several methods to combine lexical and semantic search.

Hierarchical Hybrid Search

A simple way that has been applied successfully is to apply them in a hierarchy.

One common method applies lexical search first, due to its speed on big corpora of many documents. Once the top results are retrieved, the vector similarities from semantic search are used to rerank them. You can see more about this in this blog post. Because semantic search is getting faster, this might not be needed a lot anymore.

Another hierarchical approach is to apply semantic search only to more complex queries, where traditional lexical search falls short. This approach was applied successfully in this presentation from 2023, for example. And we also do it in some way here, by sticking to a simple word match for pure location queries like “Paris”.

Hybrid Search by Combining the Scores

With the improved support of vectors by modern search engines, it is now possible to run vector search quickly on a big corpus of documents. This has enabled a powerful approach to hybrid search by running both lexical and semantic search in parallel and combining the scores from each type to deliver more relevant results (see Fig 4.).

In our application, scores from both keyword search and vector search are retrieved for most documents (products), allowing a combined ranking without gaps in the results. If you are not in this fortunate situation, you can assign a default score (e.g. 0) wherever a score is missing on one side. The bigger challenge for us was how to combine the scores to achieve a final ranked list of results. Literature mentions different options:

Linear combination: A simple approach is to combine the input scores linearly with a formula like

Note that for product search it is helpful to also include a product score which measures its general popularity. Another important decision is the cut-off threshold for relevant results. While this approach is easy, of course, a linear combination can be far from optimal depending on the distributions of the input scores.

Reciprocal Rank Fusion (RRF): This is another simple method to combine the scores. It prevents impact of the different score distributions by only looking at the resulting positions in the rankings, for example by the formula

Ranking results based on their positions rather than absolute scores can help mitigate disparities in score distributions but can potentially overlook additional and useful score details.

Learn To Rank (LTR): The most involved way that often achieves the best results is to train and apply another machine learning model for this step. Such models are called learn-to-rank models and are often supported by the search engines.

Following GetYourGuide’s philosophy, from the options presented above we opted for a simple approach first, namely to combine the scores via a linear formula. We also applied a very simple form of hierarchical hybrid search by only applying it to complex enough queries, leaving out pure location-name queries where our existing system already performed well.

With the theoretical foundation in place,, lets move on to the more practical aspects of the journey, starting with the model evaluation and training.

Model Decision and Training

To implement our hybrid search model, we needed to select and train two models. The obvious one is the semantic search model that converts the queries and product texts into vectors. The second, a smaller linear regression model, was used to determine the constants for combining the scores.

For this we had to decide which semantic search model to use and how to evaluate and train both models. As a starting point for good, available, pre-trained semantic search models it’s a good idea to look at leaderboards. We used the retrieval score of the MTEB leaderboard.

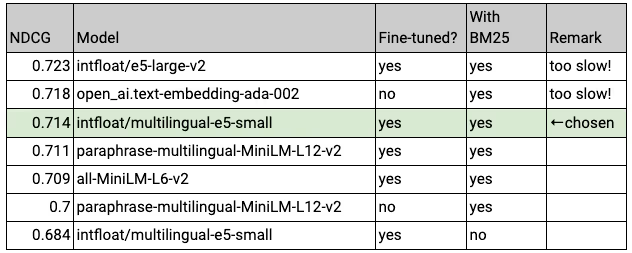

Evaluation Data Workaround: Ideally, we would use human-tagged data specific to our search context, but in this instance it would have been too time consuming. We would have also liked to use performance data from our existing search system. However, this was concentrated on pure location searches like “Paris” or “Moulin Rouge”. Hence, the data could not be used to train for normal queries like “family-friendly activities in Paris”. As a work around, we decided to use data from our Paid Search traffic from Google. The keywords we are bidding on were used as a search query. And the position-adjusted click-through rate of the products on the landing pages were used as matching scores. We divided this data into training and test data and started to evaluate options via NDCG (details on Wikipedia) on the test data. Some selected results are shown in Table 1.

Some of the best models from the leaderboard at that time were also performing best on our data. However, they were too slow for us to perform the query to vector transformation live (see row 1). Interestingly, the Open-AI vectors without any fine-tuning (row 2) performed quite well but they would need an API call live. Ultimately, we chose the intfloat/multilingual-e5-small model (row 3), as it provided a balance of performance and speed. This model was fine-tuned on our data and combined with BM25 scores from our lexical search system. Note that the comparison to the last row shows that the BM25 scores had an important benefit. The comparison of rows 4 and 6 shows that fine-tuning the semantic search models helped. And we included the model “all-MiniLM-L6-v2” in row 5 because it is a well-known small standard model for semantic search. Table 1 is based on English data but we saw very similar results on multilingual data.

In addition to the results shown in the table, we also saw that including the global product score, which was already mentioned in the formula for the linear combination of scores, had a decisive benefit. And in the fine-tuning of the SentenceTransformer model, using MultipleNegativesRankingLoss worked slightly better than CosineSimilarityLoss.

Now, onto the engineering steps taken to deploy the system into production!

Engineering the Opportunity

As we moved into the system design stage, understanding the core requirements, constraints and scale of the opportunity was crucial. . Key requirements for the system were:

- Allowing iterative testing of sets of models quickly and iteratively.

- Generating embeddings for our activity data through an event-driven system using Kafka.

- Providing an API that generates vectors for the user query in real-time.

- Combining BM25 scores and cosine similarity scores (between query vectors and activity embeddings) using either linear combination, RRF, and/or a better LTR model on top for unstructured query ranking.

Another very important non-functional requirement was ensuring the speed and efficiency of our hybrid search to provide optimal user experience.

On the behavioral and project management side, we had a simple requirement: To leverage the focus group we have built and make quick decisions to iterate and learn as we go.

Architecture

In the execution stage, our focus was on designing an efficient and flexible hybrid search architecture. Key priorities included creating a system that could respond to requests quickly, give us flexibility (models, training, ranking) enables fast iterations, and is simple to manage operationally.

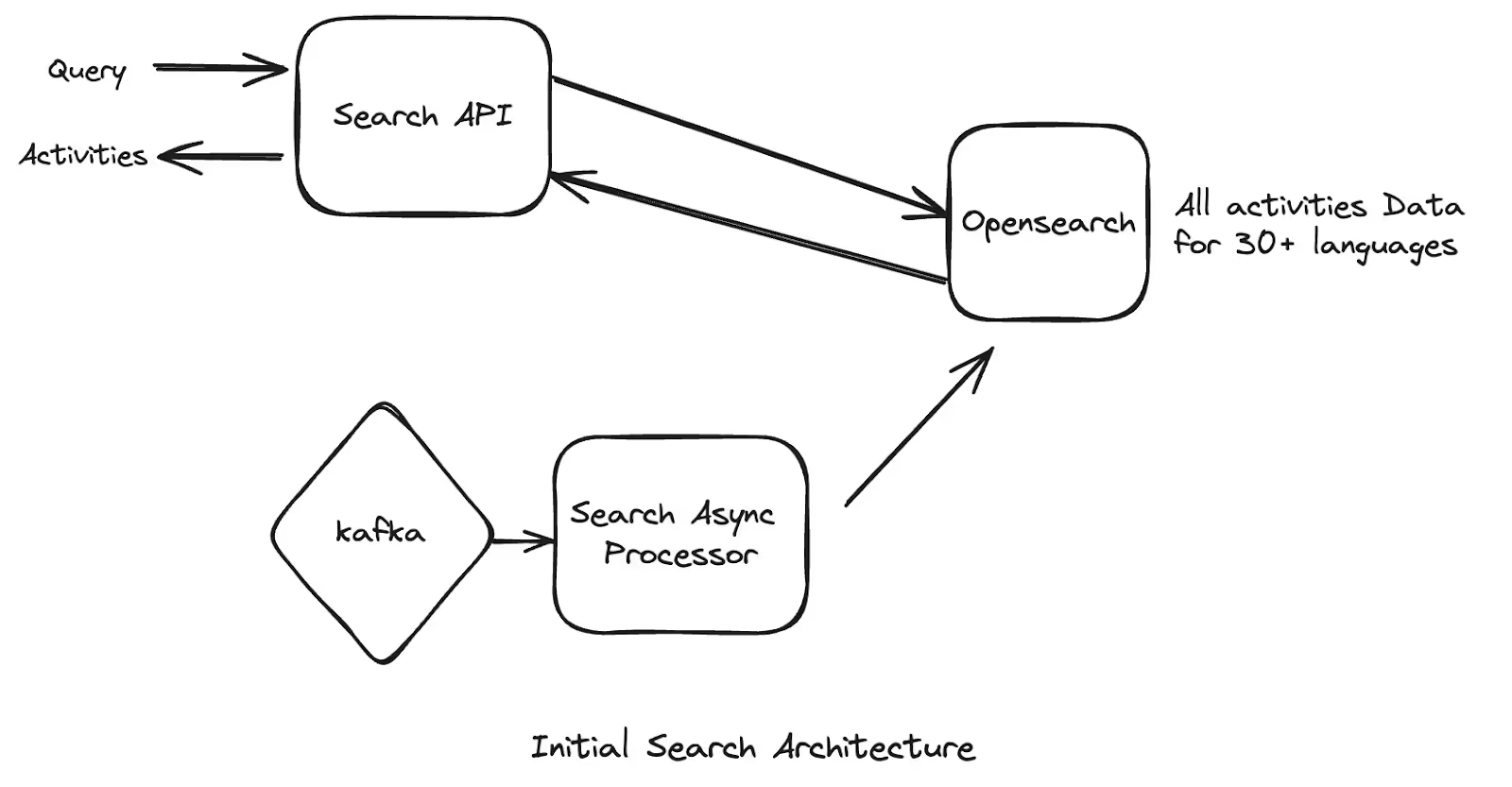

Before our improvement, the search system used only pure lexical bm25 matches to produce the results. The Search API accepts a text query and uses OpenSearch to produce the results using the `best fields` match algorithm. There was and still is a special process concerning location information; if the query including a location, like “things to do in Edinburgh with family”, we detect the location name “Edinburgh”, remove it from the query, and run the remaining query “things to do in with family” only on the products in Edinburgh.

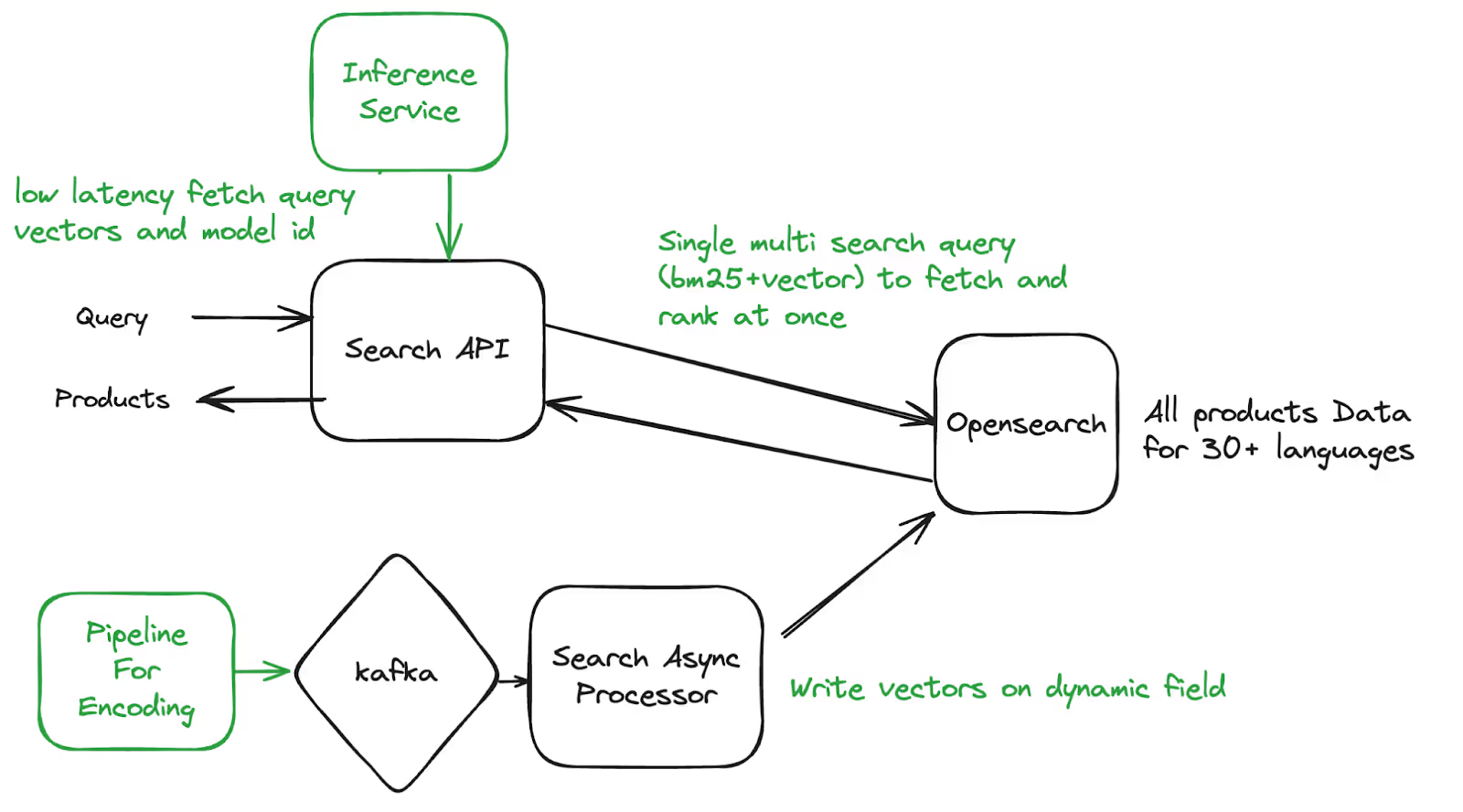

High Level New Architecture

- In our updated architecture, we combine lexical and vector search, and we designed the index schema to embed both parts. This enables us to retrieve the combined results in a single OpenSearch query.

- Using dynamic templates, we are able to dynamically generate vector fields for new models and/or new parameters within the OpenSearch index.We can reference them later during the querying phase to do AB testing between models, and different parameters without manual intervention.

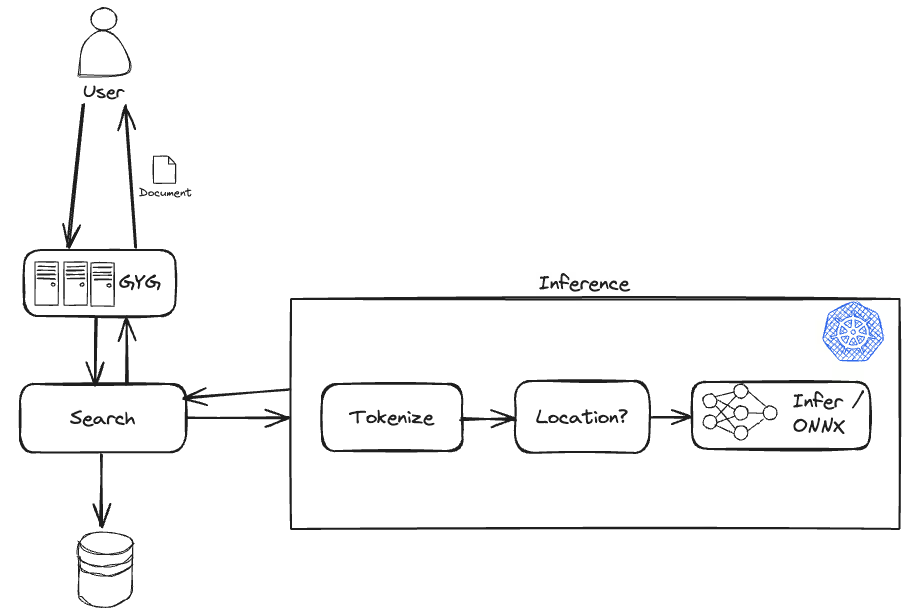

Inference Service

On the inference side, we evaluated a handful of setups but ultimately decided to go with a separate Python service built on top of FastAPI, exposing an endpoint for query embeddings. The main benefits of having a separate service include ease of scaling, independent experimentation, and ample room for any potential CPU-to-GPU migration of the model we’re serving. That being said, the model serving part is currently done on CPU cores. As low latency was one of the parameters we wanted to optimize for, given the CPU-only constraint, we conducted extensive load testing experiments across different hardware and models before making the decision on the model type and size. We used this data to drive the decision.

In this series of experiments, we also tested the ONNX vs. non-ONNX setup and ended up choosing the former based on the performance metrics we observed, such as CPU utilization and latency at p95.

Experiment Setup & Results

We conducted a total of three A/B experiments before perfecting the search experience using hybrid search. Our hypothesis was that hybrid search would yield more search results and result in fewer empty search pages. Here’s what we learned on our journey and how each experiment improved performance, half of the GYG users experiencing the old search and the other half using the new hybrid search:

- The main objective of the first experiment was to test the setup online and validate our hypothesis. Throughout the course of the experiment, the latency remained within an acceptable range. However, we found that hybrid search didn’t perform well for location queries, such as “Berlin” because it added additional conditions where the existing exact-text match filter was working well.

- In the second iteration, we refined our approach by excluding pure location queries from hybrid search. We saw a significant drop in the number of instances with empty search results, but broader queries without location names, such as “jet ski” still posed challenges with our thresholds.

- In the third iteration, we addressed this issue by implementing multiple thresholds and retry logic that switched from stricter to more lenient thresholds when initial semantic search returned no results. We saw a further drop in the number of empty search result pages.

As a result of these cumulative improvements, we achieved a +3.4% increase in search result relevance (statistically significant) and a +2.6% increase in revenue (statistically significant). Since then, hybrid search has been in production on GetYourGuide.

Learnings

Our hybrid search project provided valuable insights into search usage and its impact on revenue:

- Combining cosine similarity and BM25 scores leveraged the strengths of both methods. Specific searches like “Moulin Rouge ticket” accurately returned relevant results, while open-ended queries like “things to do in Edinburgh with family” matched a variety of related activities like Harry Potter experiences and family friendly Hop-On-Hop-off bus tours.

- Implementing a multilingual model for semantic embeddings allowed us to test the semantic search service across multiple languages without training separate models for each locale. This greatly simplified the fine-tuning, offline evaluation, and production deployment processes.

- Previously, our text matching approach relied on a language-dependent index, often resulting in no search results when users searched in a different language than the platform's setting. . The multilingual model solved this issue without additional engineering effort.

- With latency performing better than we expected, we were able to introduce a retry logic with more lenient thresholds which contributed to the increase in revenue.

- Rather than aiming for a perfect solution immediately, we opted for a test-and-iterate strategy, which allowed us to learn quickly and optimize over time.Our progress was also due to an effective project team with diverse skills and decision making power, facilitating fast adaptations.

Future Scope

Transitioning from free-text search to hybrid search has significantly enhanced the user experience on our platform, creating a strong foundation for continued improvements. Looking ahead, we are focusing on several initiatives to boost search usage on our platform. On the technical side, our next step involves establishing a feedback loop to continuously refine our semantic search model by using real-world performance data from search results. This iterative process will help eliminate previous workarounds and ensure that our search results remain accurate and aligned with user expectations. We aim to fine -tune cut-off decisions, addressing instances where too many or too few results are shown. Building on the capabilities of semantic search, we plan to experiment with incorporating location-based and interest-based suggestions on our search page. This approach aims to provide users with a more personalized and engaging search experience, ultimately driving higher engagement and satisfaction.

{{divider}}

This blog is adapted from our talks at EuroPython 2024 and Berlin Buzzwords 2024.

.JPG)

.jpg)