From Batch to Real-time: The Incremental MLOps Journey at GetYourGuide

A journey from batch to real-time machine learning: challenges, strategies, and innovations in activity ranking. Dive into Theodore Meynard's, Data Science Manager at GetYourGuide, insight on enhancing travel experiences

Key takeaways:

Theodore Meynard, Data Science Manager at GetYourGuide in Berlin, discusses transitioning the activity ranking team from batch to real-time ranking, highlighting challenges, strategies, and innovations.

{{divider}}

Transitioning to Real-time Machine Learning

In this article, we will outline our transition from a traditional batch-processing system to real-time machine learning. We will dive into the challenges, strategies, and innovations that marked our transition. Dive in to discover how we enhanced our activity ranking system, ensuring our customers are always matched with the most unforgettable travel experiences.

Why We Needed Real-time ML

Previously, our system relied on daily batch scoring of every activity in our inventory, with our search service then ranking the activities based on these scores. While this approach was simple to maintain, it had significant drawbacks. It was challenging to personalize the ranking, and it required dependency on other teams when we wanted to test a new scoring or a new logic. Every change ended up taking multiple weeks or even months to be implemented.

To address these issues, we envisioned ML models that could perform real-time inferences using dynamic signals living in a service owned by the team. But to mitigate risks, we didn't jump straight into developing this service containing an ML model. Instead, we broke down the migration into multiple incremental steps, each adding value and allowing us to learn along the way.

A Step-by-Step Approach to Real-time Ranking

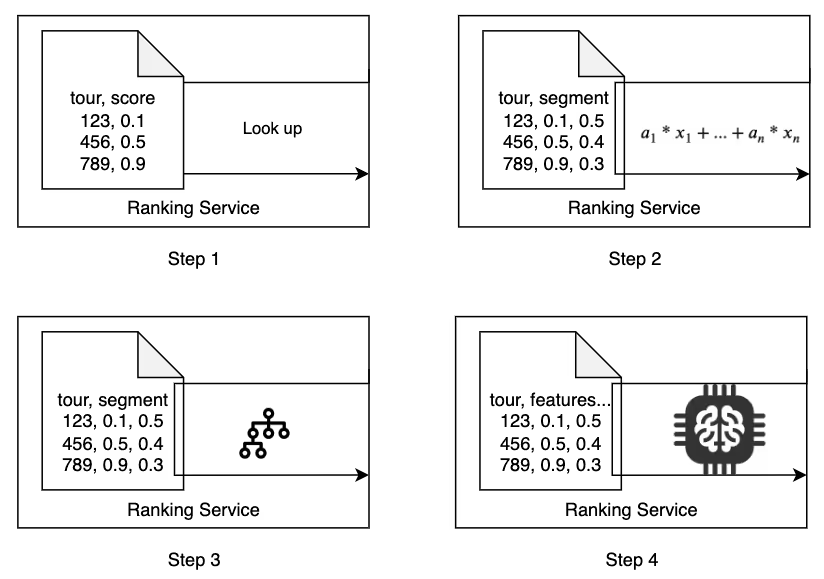

Step 1

We migrated our ranking logic to its own service. This service, initially just doing a lookup on the score and ordering based on that, proved the feasibility of having such a service and increased our speed of launching new improvements experiments. We were able to launch in days instead of weeks.

Step 2

We began to segment our ranking depending on user signals, such as the type of platform or the language used on our website. This step significantly improved our ranking (proven in an A/B test), reinforcing our hypothesis that ranking needed to be contextualized and giving us direction of which segments were meaningful to incorporate.

Step 3

We trained a model to rank activities in real time. We reused the segment scores we had created in the previous step to find the optimal segment combination based on past data. This step stabilized our model in production, helping us learn how to operate an ML model in real time and generate data to train it.

Step 4

We improved the model by adding relevant features from users and the activity for ranking. This combined with fine-tuning the model, led to a significant improvement in our ranking.

Key Design Decisions: Balancing Speed with Reliability

Operating such a service brought its own set of challenges. As our website and apps depend heavily on the ranking on most of our pages, high availability is crucial. We also had to maintain low latency and high development velocity while ensuring explicit ownership, full workflow in the continuous integration (CI), and end-to-end (E2E) tests with real data.

To overcome these challenges, we made key design decisions:

1. Explicit Ownership

We used MLflow, an open-source library to manage ML model lifecycles, to clearly define the interface between our Data Scientists and MLOps engineers.

Data Scientist's Role:

- Feature Generation: Our Data Scientists are at the forefront, focusing on generating the necessary features and datasets.

- Pre & Post Processing: They utilize the sklearn pipeline to define essential pre-processing and post-processing steps, ensuring the data is primed for model training.

- Model Training & Storage: Once the data is ready, they train the model and subsequently store it for future use.

MLOps Engineer's Role:

- Model Loading: Our MLOps engineers take over from here, loading the trained model from storage.

- Feature Provision for Inference: They are responsible for supplying the necessary features for real-time inference, ensuring the model has all it needs to make accurate predictions.

- Service Health Monitoring: Beyond just model deployment, our MLOps engineers continuously monitor the health of the service, ensuring its robustness and reliability over time.

2. Real Data for Testing

Validating our ML pipeline was a challenge we grappled with for a while. The intricate interplay between code and data in ML pipelines makes it difficult to separate and test them in isolation. Initially, we considered using dummy data for validation, but this approach had several drawbacks:

- Complexity: Creating dummy data for ML pipelines is cumbersome due to the need for multiple data sources.

- Maintenance: Hard-coded values or data generation methods are tough to maintain and update.

- Inconsistency: Even with dummy data, there's a risk of discrepancies between test environments and production. A test might pass, but the pipeline could still malfunction in a live setting.

- Dynamic Nature of ML Datasets: Our datasets are ever-evolving. We frequently add or remove features and data sources, making static testing methods less effective.

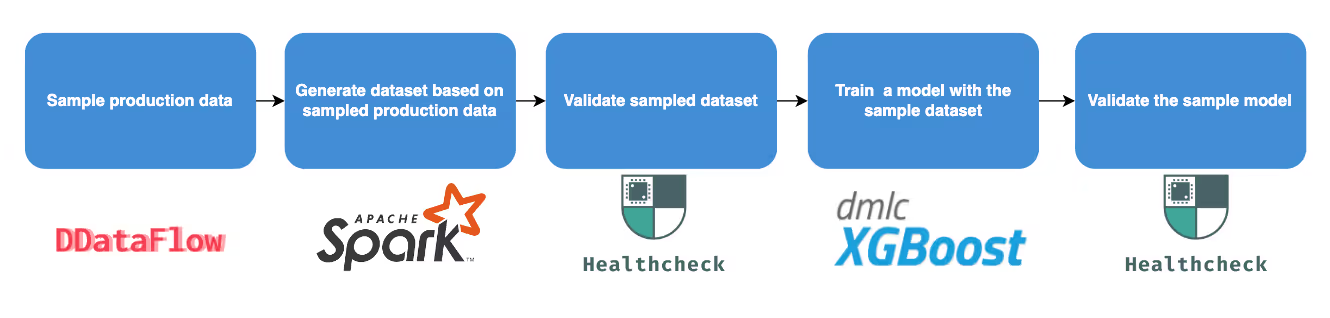

Given these challenges, we concluded that the most reliable way to test our pipeline was by using production data. However, we needed to sample this data to expedite the testing process.

To allow us to do such a thing, we developed and open-sourced DDataflow, a tool designed to simplify data sampling for end-to-end tests within the Continuous Integration (CI) framework. If you're curious, explore DDataflow on pypi.

To further ensure the reliability of our data and models, we introduced health checks or expectations.

These checks serve as a validation mechanism:

Dataset Expectations: For instance, we expect the dataset to maintain the share of booked activity within a certain range.

Model Expectations: Our models should consistently achieve an accuracy above a specified threshold.

These health checks are run as part of our end-to-end testing, combined with the data sampling provided by DDataflow. Given the increased noise from sampling, we've set more lenient requirements for these checks.

3. Automate Workflow

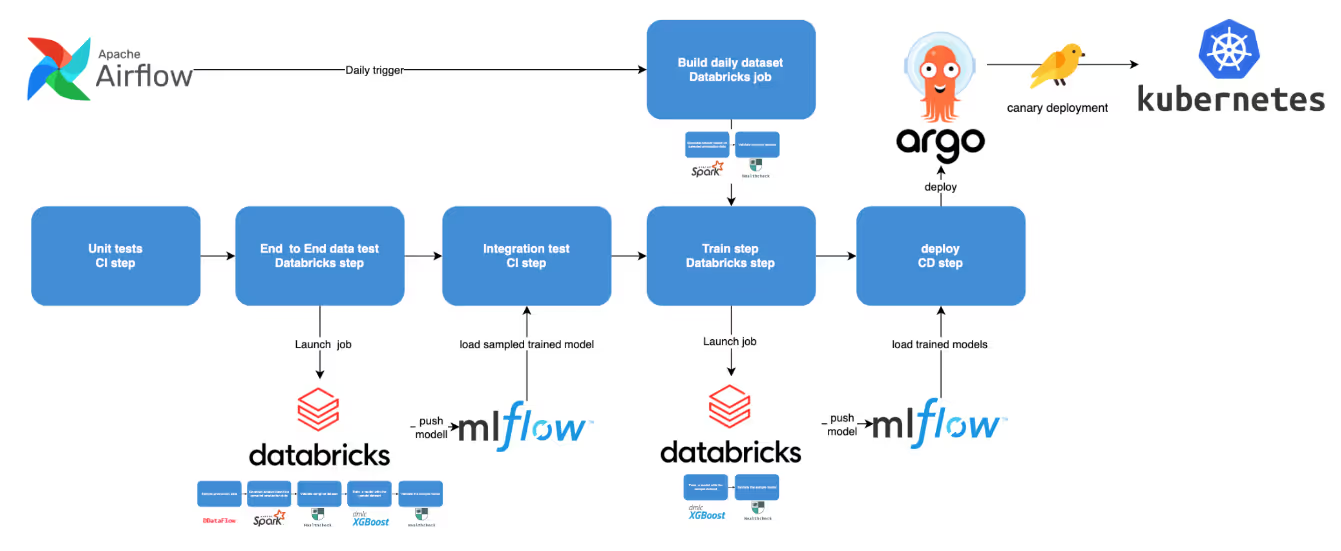

We automated every step of our testing and deployment process in our CI. This automation helped remove room for manual error and simplify development speed.

{{quote}}

Here's a step-by-step breakdown of our automated workflow:

- Unit Testing: Every code change undergoes rigorous unit testing to ensure individual components function as expected.

- End-to-end Data Testing: We run comprehensive end-to-end tests on sampled data, incorporating health checks to validate both the data and the models.

- Integration Testing with MLflow: After passing the initial tests, an ML model is pushed to MLflow. This model is then used for integration tests to ensure seamless interaction with other systems in the web service.

- Production Model Training: Every merge to our master branch triggers a training job, resulting in the creation of a new model. This means that a fresh model is generated with every code update, ensuring our system is always up-to-date. When the change is unrelated to the model, we can skip the change; see this blog post for the overall architecture

- Model Health Checks: Before deploying, we validate the newly trained model using our health check building blocks. This step ensures the model's accuracy and reliability.

- Service Deployment: The validated model is then loaded, wrapped in a Docker container, and deployed using Argo CD, our chosen deployment orchestration tool.

- Safety Nets: To further ensure reliability, we employ a canary deployment strategy. This allows us to test the new model in a real-world environment while ensuring it meets our latency requirements. If any issues arise, the Docker packaging facilitates easy rollbacks to previous stable versions, just like any other service at GYG.

Our automation extends to daily operations with airflow ensuring that our machine learning models are always up-to-date and reliable:

- Dataset Generation: Every day, we generate a fresh dataset that captures the most recent data, ensuring our models are trained on timely and relevant information.

- Dataset Validation: Before any further steps, we validate this dataset using our established health check protocols. This proactive approach has been invaluable, shielding us from potential upstream incidents that could disrupt our operations.

- Model Training: With the validated dataset in hand, we proceed to train a new model. This ensures that our predictions and inferences are based on the latest data patterns and trends.

- Model Validation: Post-training, the model undergoes rigorous validation. We ensure its accuracy, reliability, and readiness for deployment.

- Deployment: Once validated, the model is seamlessly deployed, replacing the previous version. This ensures that our platform always benefits from the most recent and optimized machine learning insights.

By automating every step, we've significantly reduced the potential for manual errors and streamlined our development process. This not only accelerates our development speed but also ensures consistent quality and reliability across all our ML operations.

Looking ahead

Our journey was full of surprises and spanned multiple quarters, with the collective effort of our dedicated team members of the activity ranking team and also everyone who helped build our Machine learning platform.

The key to our success was the incremental upgrade of our data product, explicit ownership, the use of production data for testing, and the automation of our workflows. As we look ahead, we're excited about the improvements we've made in our ranking system. We're currently working on personalization and integrating additional relevant signals to further enhance our ranking capabilities.

If you have any questions or are interested in joining our dynamic team, check out our open roles or learn about the growth path for engineers at GetYourGuide. We're hiring!

In our pursuit of efficiency and reliability, we've automated every facet of our testing and deployment process within our Continuous Integration (CI) framework.

.jpg)