How to Split a Monolith into Services

I’m Tobias, a principal engineer at GetYourGuide. Currently, I drive the architecture of our marketplace.

.avif)

Key takeaways:

Tobias Rein, co-founder and principal engineer, shares the playbook on how we’re breaking our monolithic architecture into services. Having seen and built the GetYourGuide platform from our early days, he’s our expert on systems and is the driving force behind this project.

{{Divider}}

I’m Tobias, a principal engineer at GetYourGuide. Currently, I drive the architecture of our marketplace. I love to be hands-on and ship things, so I’m still closely involved in some of our feature development. We’ve been operating GetYourGuide as an online marketplace for travel experiences since 2009. During these last ten years of exponential growth, the heart of our codebase was a single, monolithic application.

As the company continues to grow, and more than 100 engineers contribute to the ongoing success of our platform, a monolithic application brings along operational challenges.

In order to keep the velocity of our engineering teams high, we decided to go for a service-oriented architecture, in which every mission team owns one or multiple services. For complete decoupling, services must not share databases.

You might also be interested in: How we migrated a microservice out of our monolith

How to split a monolith into services: 5 steps to breaking apart a monolithic application

Challenges of breaking a monolith

To achieve our target architecture, we need to split the existing monolith. This is a challenging task for several reasons:

- The domains in the monolithic application are not strictly divided as we were willing to take technical debt in the early days of GetYourGuide. This means, for example, that we have database queries joining tables from different domains.

- The data migration must happen while the system is running. Any downtime on getyourguide.com is not acceptable.

- We want to migrate in an iterative way to follow our agile principles and minimize risk.

You might also be interested in: Data pipeline execution and monitoring

Prerequisites to splitting the monolith

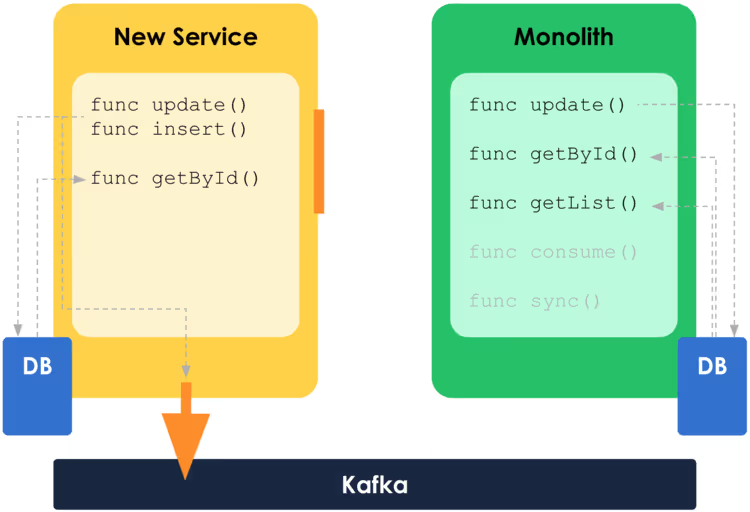

Before we start with the migration of one domain, we need to bootstrap a new service. For us, this means cloning our docker template, setting up the database, and beginning to write the application code. At a minimum, the service needs to provide the following functionality:

- Endpoint to insert, update, and delete data. Those endpoints do data validation and persist the data in the new database owned by the service (for simplification, the figure only shows the update() and insert() endpoints).

- An endpoint to retrieve data (in the figure called getById())

- A change stream. Whenever an object is changed, e.g. through the update endpoint, the service emits a change event to a given Kafka topic.

Splitting the monolith

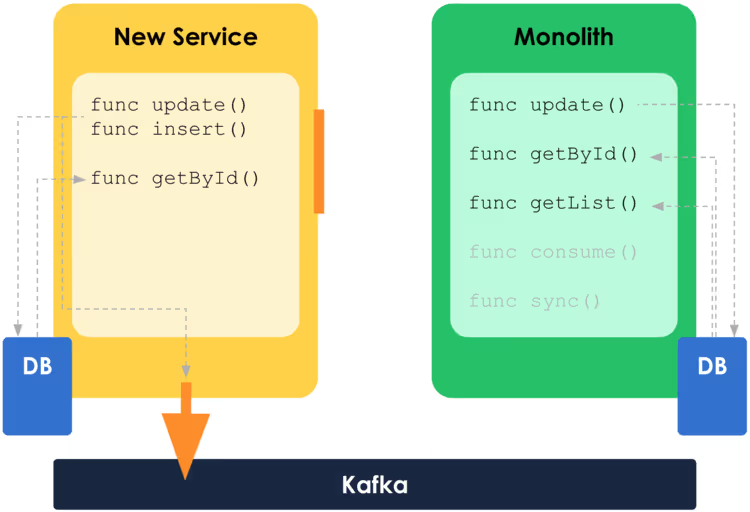

Step 1: Write twice

Once the service is ready, the first step of the migration is to let the monolith write to the new service in addition to the updates to its own database. This means we are writing two data sources for every change of the data.

As the new database starts empty, only the inserts will be successful. Updates to an object that does not exist are failing, and the service responds with a 404 HTTP status code. During this first step, we are silently ignoring these exceptions in the monolith.

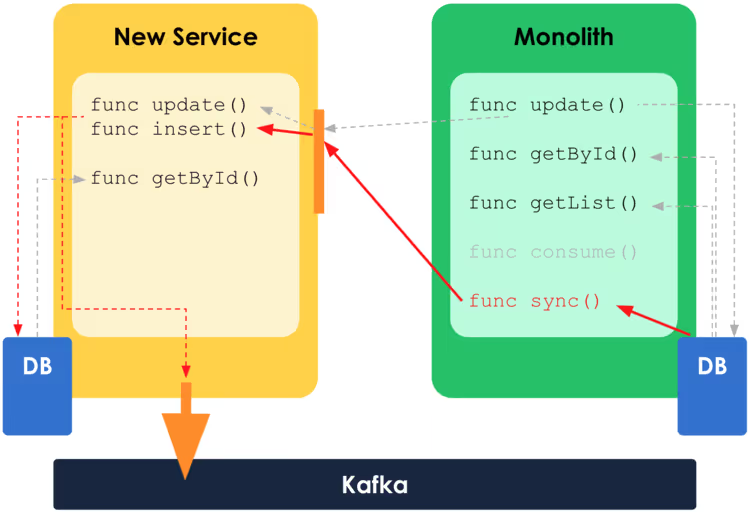

Step 2: Data sync

The second step is to copy the data from the monolith to the new service. We use a script that iterates through all the data in the monolith and pushes them through the insert endpoint into the service.

You might wonder why we do step 1 before step 2. The reason is to ensure that ongoing updates are already reflected in the service while the script is running. After this step, the data in the service is in sync with the data in the monolith. You might want to do some consistency checks to make sure you don’t have corrupted or lost data. We use a script similar to the sync-script to ensure consistency.

You might also be interested in: Tackling business complexity with strategic domain-driven design

Step 3: Change the source of truth

Once you are confident the service holds the correct data, we flip the switch, and the monolith starts reading from the service. Whenever we are loading an object in the monolith, we get it from the service using the interfaces.

You might ask why the getList()-function in the figure is still reading from the database from the monolith. This is one of the problems of the monolithic application. The domains are not strictly separated, and there might still be queries to tables that belong to the new service.

The cost of refactoring all those queries is exceptionally high. Considering that other domains will also split out of the monolith in the near future, we decided to leave those data accesses untouched. This implies that the monolith needs to hold a copy of the data from the new service. We go deeper into this in the next step.

You might also be interested in: The art of scaling booking reference numbers

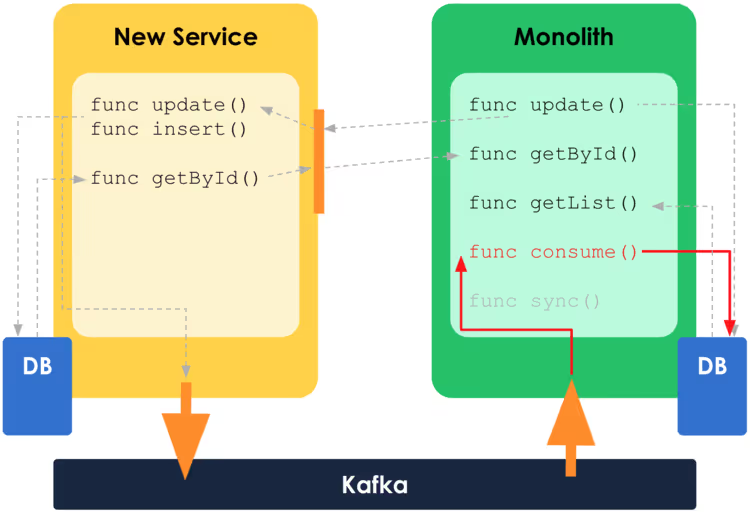

Step 4: Consume change stream

In order to keep a local copy of the data from the new service, the monolith needs to consume the change stream from Kafka.

There is a challenge with this step. As you can see in the figure, changes are written twice to the database of the monolith, first, directly during the update-function.

Second, while consuming the change stream, make sure those updates are idempotent and are not harming the system. This is not always trivial. Once we are successfully consuming the change events, we move on to the final step.

You might also be interested in: Our career path for engineers

Step 4: Stop writing to the monolith

The last step to completely hand over data ownership to the new service is to stop writing directly to the database of the monolith in the update-function.

Congratulations, you’re done.

Conclusion

After the five steps, the primary data is in the new service, and all updates are done directly through the services’ interfaces. The monolith still holds a copy of the data in order to make sure we keep other, unrelated parts of the monolith working.

As a following step, we will reduce the data that the monolith needs to hold to a minimum. Not all data is needed in other parts of the monolithic application. Additionally, we and other mission teams are continuously migrating more domains out of the monolith into their own services, using the playbook shared in this article.

.JPG)

.JPG)