How we automate the App Release Monitoring at GetYourGuide

Learn about our innovative App Release Monitoring (ARM) system that ensures the health and stability of our iOS and Android app releases. Explore how ARM automates tasks, saving engineering time, and accelerates our release cycle from 7 days to just 3.

.avif)

Key takeaways:

Nowadays, automation is a buzzword, and automating a process opens growth opportunities. App release monitoring (ARM) is no exception. ARM represents a suite of innovative tools designed to monitor the health and stability of iOS and Android app releases. These tools provide real-time updates by sending notifications to Slack channels and logging the app's status throughout the release process.

At GetYourGuide, we use ARM to monitor the rollout of our Android and iOS apps, starting from the moment they are submitted to the Google Play or Apple Store. The system relies on app events, such as sessions, crashes, etc, exported from Analytics and Crashlytics to Bigquery.

{{divider}}

Why we built ARM

- Saving precious engineering time. Previously, we had a manual process to handle release tasks. Each platform had what GetYourGuide calls a “release captain”, who was responsible for halting releases, increasing rollout percentages, and notifying teams of updates. The release captains were checking Firebase and Datadog monitors for crash rate increases or bugs, but now all these tasks are fully automated! More details can be found here!

- Thanks to the efficiency of ARM, we have accelerated our rollout process, moving to a weekly release cycle. When everything is running smoothly, we can even fast-track releases, cutting our release cycle from 7 days down to 3.

Before we dive into the tools, we need to explain a few key concepts. We know this section might not be the most exciting, but stick with us- it’ll be worth it! Your patience will pay off!

.avif)

Key metrics used in App Release Monitoring

Metrics play a key role in helping us evaluate the overall health, performance and stability of our app releases. ARM relies on two key metrics to monitor and ensure a smooth rollout during the release cycle.

- Crash-free sessions rate (CFSR): The number of sessions that do not end in a crash versus the sessions that do end in a crash. A session in the context of Firebase starts from a cold startup until the app is destroyed or closed by the user.

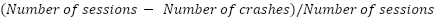

The formula:

- Crash-free users rate (CFUR): The number of users without a crash versus users ending in a crash. By a user, we mean one app installation per device.

The formula:

Defining SLOs (Service-Level Objectives)

Our tools are also based on a set of service-level objectives (SLOs). Generally, SLO stands for service-level objective, which acts as a key benchmark for app stability, performance, and availability. An SLO is an agreed-upon target defined by the tech team that sets the minimum acceptable metric level. For example, the Crash-free session rate, which is a stability SLO, needs to be at least 99.95.

To measure whether we’re meeting these objectives, we have the service level indicator (SLI), which is a real-time measurement of a specific metric, such as crash rates. The goal should be to have an SLI higher than SLOs in terms of the metrics.

At GetYourGuide, we follow a company-wide standard that all SLOs must meet the 99.95% threshold for critical metrics like crash-free sessions and latency.

Understanding Margin of Error (MoE):

MoE is a statistical concept used to measure the uncertainty of sampled data to infer a hypothesis against the total population. The higher the MoE, the less reliable and predictable the surveyed data is. It is used to get the confidence interval, the range two times the MoE.

- Lower bound: measured value - MoE

- Upper bound: measured value + MoE

With the confidence interval, we can say that the measured value is within a range of a Lower bound (measured value - MoE) and an upper bound (measured value + MoE).

.avif)

MoE is affected by these three factors:

- Sample size (n): the larger the sample size, the smaller the MoE

- Confidence level: It expresses our confidence that the measured value will fall within the confidence interval. The higher the confidence level, the more accurate our estimation is.

- Z-score (z) represents how many standard deviations a measured value is from the mean of the total population It's dependent on the distribution of data and confidence level. In a normal distribution, with a confidence level of 95%, it is 1.96 and increases with confidence level.

.avif)

MoE formula

- P: Sample proportion of the sampled population opted for an option

We will discuss how we use MoE later on 🙂

App structure

Our app is built using Kotlin, a powerful and developer-friendly language that ensures easy maintenance and scalability. It has different tasks or jobs, all inherited from a Parent's Job and assigned to an ID.

.avif)

When running the app, all the jobs are instantiated and bound to a named dependency by Koin.

.avif)

At runtime we have different job instances, and run the desired one using Gradle. Each job is scheduled and executed within a separate GitHub Action.The action builds and runs the app by specifying the job id and providing the necessary secrets and variables.

.avif)

We have three main jobs running using Github actions, each with duties:

- Release Monitoring

- Release status reporter

- Android rollout update

Release Monitoring job

This tool continuously monitors the app's health status after submission to the stores until the release is completed or rolled out to all users. It halts a release if the crash-free sessions SLOs are unmet and fast-tracks a release if the SLI indicates the release is performing well. The cron job runs this tool every 15 minutes from Monday to Wednesday and every hour from Thursday to Sunday.

This job uses some components.

- Monitoring Rule Executer

- Mobile Stores

- Log Uploader

.avif)

The process starts by using the Mobile Stores component to fetch app releases, which are then passed to the Monitoring Rule Executor. The Rule Executor has a list of monitor rules, iterating over the rules and applying each of them to the app release based on the rule's properties.

The release monitoring job is designed to be flexible, allowing new rules to be added easily for checking and validating releases. Currently, we have three rules in place:

- Crash-free sessions halting rule

- Crash-free sessions fast-track rule

- Crash-free users warning rule

Crash-free sessions halting rule

The Crash-Free Sessions Halting Rule is a critical component of our app release monitoring system. It uses it’s subcomponents to fetch the Crashes and Sessions, calculated CFSR and makes a decision based on that. If the CFSR falls below the defined Service Level Objective (SLO), the release is automatically halted, or a warning is sent to our team via Slack.

It is dependent on some of the subcomponents:

Crash-free use case:

The Crash-free use case fetches the number of crashes and sessions for the entire release cycle data from when the release is approved until the execution time of the query. Two subcomponents power the data collection:

- Bigquery executer

- Firestore database repo

It fetches crashes and sessions using the Bigquery Executer every 15 minutes, with a time range starting from the beginning of the day up to the current execution time and stores the results in the Firestore database. By doing this, we can aggregate the previously stored data with the latest query results from each run. This is due to the cost reduction, so we are trying to submit more minor queries to Bigquery. The cost of a Bigquery query depends on the volume of processed data. Since the job runs every 15 minutes, querying the data for the whole release period solely from Bigquery will increase the costs. So, with partial querying, we are decreasing the volume of the data that needs to be queried.

Crashes and Sessions are stored in different datasets.

- Crashlytics dataset is for the whole Firebase project, and each project can be composed of multiple applications, each with its own table. There are two types of tables for crashes: batch and real-time tables. Batch tables are not suitable for monitoring since they are only exported once per day. Instead, we rely on real-time tables, which are perfect for monitoring and follow a naming convention like [package_name]_[platfom]_REALL_TIME. There are three types of errors in the crash tables: Fatal(Crash), NonFatal(Logs) and ANR. ANRs can occur either in the foreground or background thread. We consider foreground ANRs to be crashes since they have the same impact as crashes on the user. There is a column for that process_state, from which we query the foreground ANRs.

- The Analytics dataset contains data from Firebase Analytics events exported to BigQuery, which we query for the session event. Similar to Crashlytics, we have two types of tables: real-time and daily tables. We use real-time tables and have a naming convention like events_intraday_YYYYMMDD.

Release halter

The Release Halter component uses the MobileAppStore component to halt an in-progress release and then sends a Slack message to the main channel. The MobileAppStore uses Google Play publisher and iOS AppStore APIs to halt a release.

A halt may come after a warning, so we need to find the last message and send the Halt message as a reply.

Each time we halt a release, we save the information of the release, such as app version, Crash-free sessions SLO and SLI. This data is invaluable for debuguing and checking out the number of hot fixes we would have per platform during a month or a year.

Note: There is a big difference between Android and iOS when it comes to halting a release. Halting an Android app removes the faulty version from the Google Play Store making it inaccessible to users. On iOS, however, halting only prevents the auto-update of the problematic version, but it remains available for download from the App Store. The Release Alert Monitor functions similarly to the halter but only sends a warning message without pausing the release.

SLO provider

The SLA Provider plays a critical role in determining whether a release should be halted or allowed to proceed based on real-time data. The SLO provider continuously evaluates app stability by calculating the Margin of Error (MoE) based on the number of user sessions and crash data. This process helps determine if the app release is stable enough to proceed or if corrective action, such as halting the release, is required. Usually, we alert when the SLI reaches half of the halt SLO.

MoE fast-tracking rule

We use this rule to fast-forward a release and increase the rollout to 100% of the users. Like the previous rule, it runs every 15 minutes. We only check for fast-track once in the release cycle, which happens on the second day of the release.

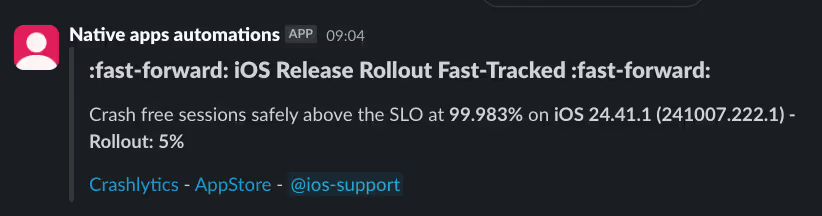

We calculate the MoE using Crash-free session data for the whole release cycle. The previous job already saves the entire release cycle data, such as Crash-free sessions rate and number of sessions in Firebase DS, so we fetch it and use it to calculate the MoE. If we could fast-track, we would send a Slack message like the following:

How do we calculate the MoE to fast-track, halt or warn?

To calculate the MoE, we use the crash-free sessions rate(CFSR) as sample proportion (P) and the number of sessions as sample size (N). Imagine we have calculated a CFSR of 99.1, and we have 5K sessions from the Big-query result. Lets consider the confidence level as 99%, so the Z-Score is 2.576. We also consider the fact that CFSR has a normal distribution in a considerably big sample size.

In theory, when the sample size is small, CFSR has a Poisson distribution since the occurrence of crashes is independent of each other and has no impact on each other. However, in our case, the sample size will increase quickly, so we can consider using the normal distribution as the base distribution.

Now let's calculate the confidence intervals,

- Upper bound: 99.1 + 0.344 => 99.444

- Lower bound: 99.1 - 0.344 => 98.756

So statistically, we need to infer that with a 99.9% confidence level, the current CFSR will be more than our SLO of 99.95 in a fully released app.

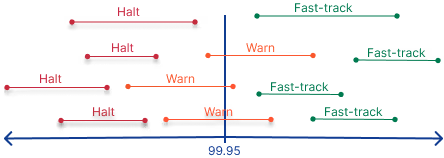

As you can see in the following image, the confidence interval, a range of min and max values, might intersect the SLO or be bigger and smaller than the SLO.

In our example, the upper bound is lower than our SLO, so this release is not a candidate for fast-tracking.

Here is our approach to a release:

- We fast-track when the lower bounds exceed 99.95.

- We halt the release when the upper bound is lower than 99.95

- We warn when the lower bound is less than 99.95, and the upper bound is higher than 99.95

The fast-track approach awards building a stabler app with CFSR safely above the SLO.

Crash-free users rule

This rule doesn't act on the current release but only provides helpful information about its current status. It acts as a guardrail against false positives.

We might have crashes that happen on a specific app version or on a specific device and not affect all users. This might happen in the Android ecosystem due to the variety of devices and customised OSs per brand. In this case, there is usually a meaningful difference between crash-free sessions and crash-free user metrics, which will help us detect and maybe continue the release.

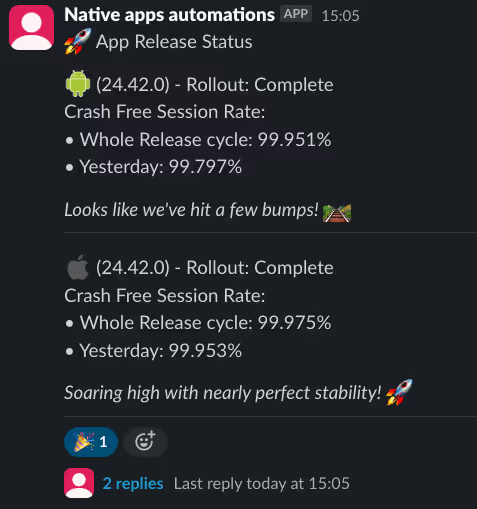

Release status reporter

This tool provides a daily report at noon, detailing the crash-free session rate, current rollout percentage, and upcoming rollout percentage. It has a similar approach to the previous job but uses batch tables instead of real-time tables. This allows us to get a comprehensive overview of the release and assess how a fix or hotfix has impacted the overall health of the release.

The reports cover the data for both the last day and the whole release cycle. It also reports the top three crashes on each release. This increases visibility throughout the engineering department since everyone checks the channel and is notified of app releases. Most EMs and PMs also found these reports very handy.

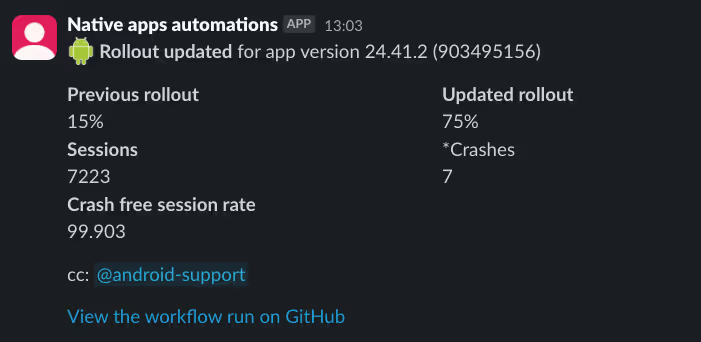

Android rollout update

This increases the rollout percentage by the defined values and reports the latest rollout percentage and the upcoming rollout update level in the channel. It only applies to Android since the iOS app’s rollout is increased automatically by the App Store, and we don't have control over it.

We publish the Android app in a staged rollout using Fastlane in GitHub actions. We run the job once per day at noon.

We heavily use the Android publisher SDK in this job. We first do the following checks:

- If there is an in-progress release

- If there is a minimum number of sessions available

If these two conditions are met, we fetch and increase the latest user fraction. The user fraction steps typically are 0.15, 0.75 and 1, so on the first day of release, we will release to 15% of users, on the second day of release to 75% and on the third day to 100% and complete the release.

If we find a hotfix, we apply the latest release user fraction. This is very helpful for improving the app release state.

Why don’t we have the iOS rollout update automation?

- We don't have control over the rollout of the iOS release. The app store automatically increases the rollout percentage. The iOS release is rolled out gradually over seven days. More information can be found here.

Conclusion

App Release Monitoring (ARM) has improved the app release process at GetYourGuide, bringing significant benefits:

- Efficiency: Automating release tasks saves valuable engineering time for all team members in the long term.

- Faster Releases: The release cycle has been potentially reduced from 7 days to 3 days.

- Data-Driven Decisions: Utilizing key metrics and statistical concepts for informed release management.

- Proactive Monitoring: Continuous health checks ensure quick responses to issues.

- Enhanced Visibility: Daily reports provide valuable insights across the engineering department.

By implementing ARM, we have not only streamlined its app release process but also enhanced the overall quality and stability of its mobile applications. This automation has been a great learning and development experience for app engineers at GetYourGuide as it frees up time and also is a new scope in the app development domain to bring innovative-driven and forward-thinking ideas to the table. As mobile applications continue to be crucial in the travel industry, systems like ARM will be key to maintaining a competitive edge through high-quality, frequently updated apps.

.JPG)

.JPG)