Inside our Recommender System: Data Pipeline Execution and Monitoring

As a senior data scientist, I iterate and improve these recommendation algorithms in close cooperation with the rest of my team and our stakeholders. For example, I work with our FinTech team for recommendations displayed at the checkout or the CRM team whenever they use our recommendations for content in email campaigns.

Key takeaways:

Our machine-learning recommender system helps customers find products that complement their travel bookings. Maximillian Jenders, senior data scientist for the Recommendations and Relevance (RnR) team, shares how he took the system’s data pipeline monitoring to the next level.

{{divider}}

Building on an infrastructure of Apache Spark clusters, Airflow data pipeline management, and event streams consumed by Kafka, he improved the existing data pipeline tracking, and introduced monitoring metrics and KPIs.

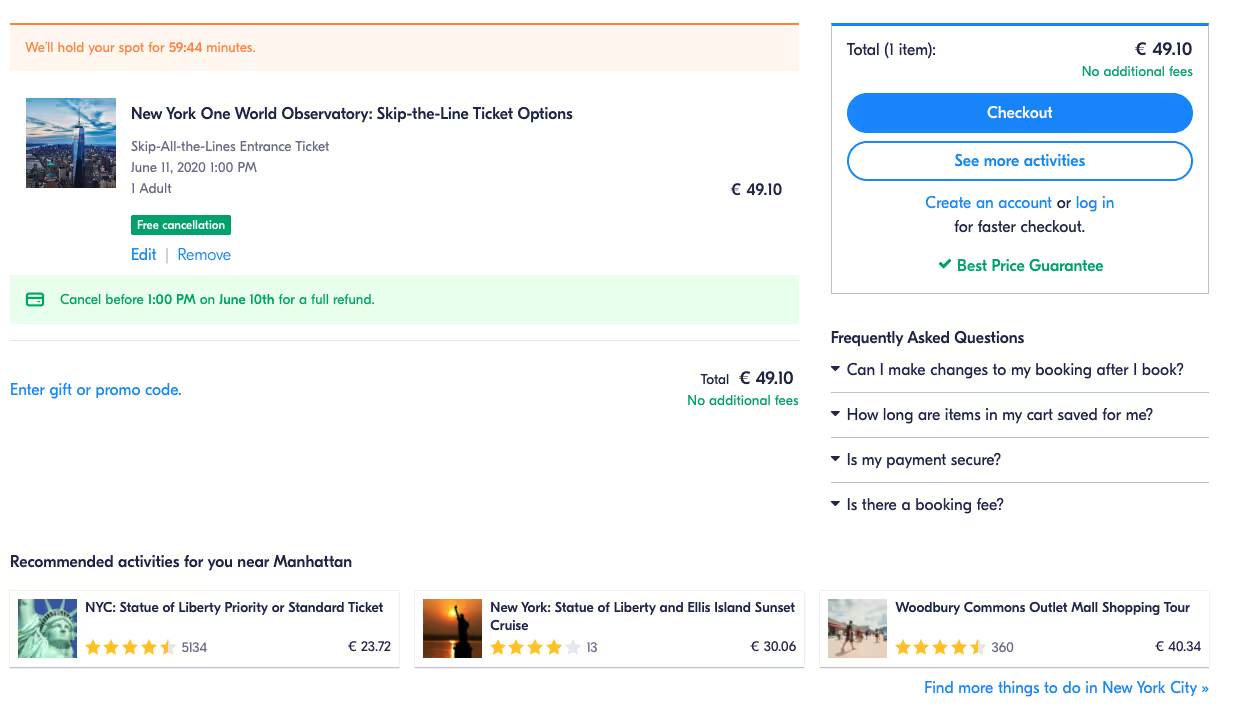

The RnR team recommends customers tours and activities based on their interests. If someone is browsing Hop-on Hop-off sightseeing tours in New York on our platform, they may also be interested in supplements like tickets to the Empire State building.

As a senior data scientist, I iterate and improve these recommendation algorithms in close cooperation with the rest of my team and our stakeholders. For example, I work with our FinTech team for recommendations displayed at the checkout or the CRM team whenever they use our recommendations for content in email campaigns.

We employ different kinds of recommendation algorithms at various touchpoints on our app and website to support travelers at different stages of their decision-making process.

Today, I'll share how the RnR team improved our data pipeline system from purely monitoring the technical execution status of data pipeline steps to more holistic monitoring of metrics and KPIs aligned with our company's objectives.

You might also be interested in: How to replace type methods in Swift to improve testability

Why we needed a new approach to data pipeline monitoring

I've been a data scientist at our company for nearly three years. I started out doing performance marketing in the Paid Search team. After two years, I was looking to tackle problems in a different domain. After discussing options for my professional development with my manager, I joined the RnR team. There, I became the data science owner of all of our recommender systems.

Based on my previous experience working in the Performance Marketing team, I wanted to get a better overview of the monitoring of the existing systems and pipeline in the Recommendations and Relevance team.

In Performance Marketing, automated monitoring is critical as the systems manipulate advertising budgets. Missing a problem in our data pipelines might result in overpaying or losing a significant customer acquisition opportunity. While errors in the Recommendations pipeline might not lead to over-paying, they could still incur the serious opportunity cost of missed revenue or degraded customer experience.

The Data Product organization within our company agreed on 15 data science principles we live by. Improving the monitoring system is a reflection of our fourth principle: "Quality, testing, and monitoring — As a mature organization, we care about maintainability and quality assurance."

You might also be interested in: How we built our new modern ETL pipeline

An overview of our recommendations pipeline

As mentioned above, we deliver different types or recommendation algorithms at various touchpoints. To create and serve these recommendations, the setup can be split into three distinct stages on the technical side. The first two are executed on Databricks-based Apache Spark clusters orchestrated via Airflow:

- Data generation: Data is at the heart of any machine learning project. We pull data from various input sources, which are filled and owned by multiple parts of the business, such as activities customers have looked at and booked, categories of these activities, or location data, to be able to compute meaningful recommendations.

- Recommendation computation: Given the data from the first step, we can now generate activity recommendations. This is where we apply machine learning techniques and validate the accuracy of our recommendations.

- Service: Our recommendations are served via a microservice, which handles requests generated during a customer's journey throughout our website or app. It also logs the recommendations we served to Kafka.

Status quo: Execution monitoring

When I joined the RnR team, they were already managing and tracking the execution of our data pipelines through Airflow. Should a pipeline crash, they would automatically be alerted and could address issues quickly. The underlying causes of crashes might include changes to the schema of underlying data or cluster resources becoming unavailable.

However, this did not guarantee that the quality of our recommendations was holding up. Shortly after I joined, an incident led to unwanted and drastic changes in the search ranking of our activities. This highlighted that corrupted or missing (partial) input data might also have a similar impact on the quality of recommendations shown.

Canary testing: Improving content and technical monitoring

A first and simple step to improving content and technical monitoring was to create output canaries. These are quick checks that would compare a freshly computed batch of recommendations with previous ones. If it detects significant changes — for example, in the ranking of our recommendations for activities, the number of activities we generate recommendations for, or how many tours we recommend per activity — it would stop the upload of the new data and fail loudly, allowing us to investigate.

In general, these output canaries help us place more trust in recommendations we compute. In case an incident happens (for example, a stakeholder notices that we suddenly serve very unexpected and low-quality recommendations on their touchpoint), our priorities are:

- confirming the issue

- understanding the underlying condition

- fixing it as fast as possible.

Knowing that we have test canaries in place that validate previous output, we have increased confidence in the quality and validity of this data and start a roll-back sooner. Once the customer is no longer impacted, we can then spend time diving deeper into the root issues, fixing them, and adding processes and monitoring to ensure they do not happen again.

You might also be interested in: When scaling Google Ads, say no to state

Measuring customer interactions

While this safety net helps, it doesn't take our business metrics and goals into account. We know how many recommendations we serve, but have no useful insights into their quality and how customers interact with them.

Luckily, there are plenty of real-time data sources that we could leverage. For every touchpoint customers could potentially have with our recommendations, we now measure — not only how many recommendations were served —but also how many customers have clicked — and eventually converted, on them. Having a dashboard enables us to estimate how healthy our systems are at the moment — and by comparing to previous time frames, we can spot potential issues or shifts in customer behavior.

While it may sound straightforward, creating such a dashboard required some considerations:

- How often do we want to update it?

- How large are normal fluctuations and seasonal patterns (e.g., weekly, monthly)?

- How do we deal with attributions when customers interact with multiple recommendations during their journey on our website?

- How do we deal with attribution lag, e.g., the time difference between customers clicking on a recommendation and booking it sometime later? Due to this lag, recent metrics will naturally look worse than older ones.

You might also be interested in: Tackling business complexity with domain-driven design

What's next in monitoring pipeline execution and health?

While we have come a long way, there are still more improvements we're working on:

1. Creating real-time monitoring of health metrics

While having an up-to-date overview of KPIs is a great stepping stone, it still relies on manual tracking and interaction. Next, we will work on expanding this into automated alerts and outlier detection.

Automation will enable us to react faster to changes, but we might be at risk of responding to false positives. By forecasting the attribution lag mentioned above, and more generally, by predicting the final KPIs, we would both shorten the reaction time and detect incidents more accurately.

2. Adding monitoring to input data

We currently have automated monitoring of the output of our recommender systems. Over time, we'd also like to add similar monitoring to input data. We are starting with pure cardinality (e.g., number of data points) for events we're consuming, like bookings or page views. Then later expanding this to look at the distribution of data (e.g., mode, median, variance) will help us detect if underlying tables change significantly and rapidly, warranting an investigation.

For updates on our open positions, check out our Career page.

.JPG)

.jpg)