Improving User Experience by Boosting Runtime Performance (Part 4 of 4)

In the last of a four-part series, Senior iOS Engineer Bruno Rovea expands on how the team recently gave the GetYourGuide iOS app a runtime performance overhaul, by taking a closer look at networking.

Key takeaways:

In the last of a four-part series, Senior iOS Engineer Bruno Rovea expands on how the team recently gave the GetYourGuide iOS app a runtime performance overhaul, by taking a closer look at networking.

In the last three blog posts we’ve discussed several of the key improvement areas for boosting runtime performance. All based on tweaks we made to our own iOS app, they include images, layers, multithreading, and more. And while networking is a little different from these defined areas, and to some extent, outside of our control, it’s nevertheless an important consideration in optimizing latency for a smooth user experience. Linked to that are topics of caching and using Multipath TCP to maintain the best connection possible – in other words, everything you need to make your app run seamlessly.

{{divider}}

Improving UX in iOS: Networking, TCP Connections Explained, and why Prioritizing Service Type is Key

As discussed at the beginning of this series, any delta latency above 100 milliseconds (ms) can affect the user experience and more importantly, the business. Although not affecting the Render Loop discussed in the first installment, networking plays a significant role here, since it is the slowest component in the latency delta composition, being slower than anything else we do locally.

Nevertheless, networking is frequently overlooked when building an app, since we don't have control over the external environment – the iteration between the user network service quality and our backend services. But even when our backend services are as optimized as possible and the network service is working properly, there are still improvements we can make on the client's side. Namely, the app, or even the services and the app to be in sync.

An important note about networking is to understand how to measure the efficiency of an internet connection. While the download and upload speeds, and latency in idle mode are important, we should also note how many round trips per minute the connection is able to perform during heavy load. This metric is isolated to every single user's environment, meaning every user has a different result, depending on characteristics like ISP, geographic location, signal power, and others. So it's the developer's role to make the least connections possible, with as few round trips as possible with the right priorities to optimize the user experience. To test yours, follow this tutorial from Apple.

From the app side, regardless of whether we are using Combine, Async Await, or any other way of controlling asynchronous code, we are probably using URLSession under it to make TCP connections to our services. If that’s the case, good news: we can optimize it! To do that, instead of using the URLSession.shared, we should create our own URLSession with a custom configuration.

But first, let's explore the basic anatomy of a TCP connection to understand the improvement points. A TCP connection is a link between two devices, in our case between the client and server. It is used to transmit data using a protocol, like HTTP, while applying security protocols like SSL or TLS. The connection can be kept open or closed right after the response depending on both devices’ configurations. Then we have the following steps:

1. When the client calls an endpoint – let's say https://getyourguide.com – we need to transform this address into an IP. This is called DNS lookup.

2. Then the TCP connection needs to be established between the client and the server.3. After that the handshake must be done, where security certificates are exchanged to keep the established connection secure.

4. After that, data can start to flow in the link.

So now we can go back to creating our own URLSession with a custom configuration:

Please, be mindful about how many URLSession objects we are handling, since they are fairly expensive to create and can lose multiple benefits if not using the same instance. More on that below.

In the configuration we can modify the following settings:

Simultaneous number of connections/streams:

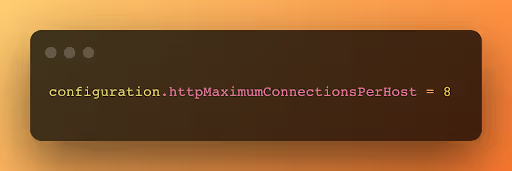

If we are using HTTP/1.1 to connect to the services and need to parallelize network requests with the same host (scheme://host:port/path), we can increase the default number, which is 6 for iOS, by doing:

If we are using HTTP/2, the maximum number of simultaneous connections is handled differently, since stream multiplexing is used, meaning just one TCP connection is used, but multiple streams can use it. The default value for iOS is 100, but services normally offer more, and even if it offered less we wouldn't even be close to the limit (I hope). In our case we just made sure that we are using HTTP/2 for all services, and the number of simultaneous connections/streams accepted by our services support our use cases.

Connection Reusability:

Here, again, HTTP/1.1 differs from /2. HTTP/1.1 opens a new TCP connection for every request, so no connection is reused, meaning all the DNS lookup and TLS handshake needs to be redone. Meanwhile, HTTP/2 enables reusing the same TCP connection, so no DNS lookup and a few steps from the TLS handshake can be shaved. When both serialization or parallelization requests need to be done, it increases the final latency perceived by the user, affecting their experience.

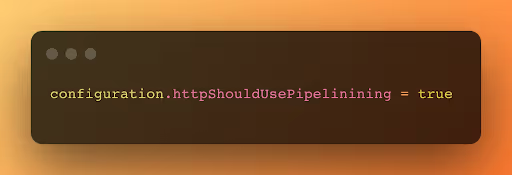

To optimize it for HTTP/1.1 we could enable pipelining by setting:

The services also need to support it by having the Keep-Alive header attribute in the requests response.

This enables the same connection to be reused by pipelining the serial or parallel requests connections to reuse the previous DNS lookup and TLS handshake in a FIFO response order. While this boosts the performance by decreasing the final latency, head-of-line blocking problems can occur, but we won't cover it here.

Since HTTP/2 makes use of the same TCP connection, the DNS lookup and TLS handshake optimization could be automatically used, but this is only achieved if reusing the same URLSession instance, since each instance holds its own pool of connections, not sharing any data between them. That's why it's important to reuse the same instance when connecting to the same host, otherwise a new TCP connection needs to be done.

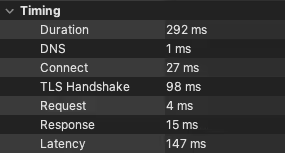

By using any proxy tool we can check the connection total time and analyze the delta to understand how much time each step took.

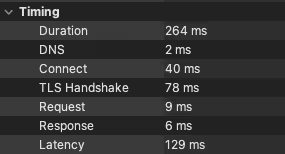

This is what happens when we fire the same request, one after the other using different instances of URLSessions:

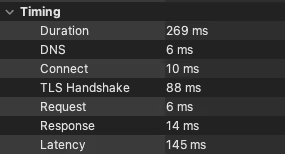

And this is what happens when we do the same using the same URLSession instance:

As we can see, reusing the same URLSession instance, so reusing the open TCP connection, gives us a faster total time (Duration) by shaving from the delta the latency for the DNS lookup, establishing the TCP connection, and to make the TLS handshake. Important to note the other values fluctuate due to the environment and each connection stability over time.

On our side we make sure to expose shared URLSession instances to be reused across the same services, which make use of these optimizations.

Transport Layer Security (TLS):

If we are using HTTP, TLS is one of the possible protocols used to securely transport data between services and clients, depending on the handshake approach to transport the data when connecting via TCP.

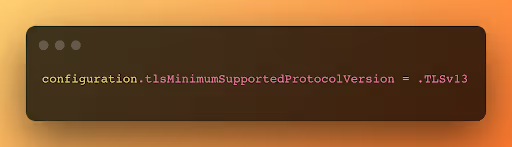

When using TLS 1.2, the handshake process takes 4 and 3 round-trips to first byte for new and reused connections respectively, but was decreased to 3 in TLS 1.3 for both new and reused ones, but can be even decreased to 2 in reused ones if the service supports TLS 1.3 0-RTT (we could decrease it to 1-2 when using TLS 1.3 with Fast Open or when using HTTP/3, but this means the services also need to support it and we need different approaches to handle the networking in the app, like using the Network framework). The good point is that TLS 1.3 is enabled by default in the URLSession, so it's only a matter of our services to support it (otherwise it could fallback automatically to TLS 1.2 for example).

Check with your services which type of connection it accepts and configure according to your use case. In our case, we made sure we are connecting through TLS 1.3 and our services support 0-RTT. We can also easily set a minimum supported version if preferred (or if you want to quickly test if your service is ready for it).

Here it is important to note that the handshake optimization for reused connections is only possible if using the same URLSession instance, as mentioned in the previous point.

Caching:

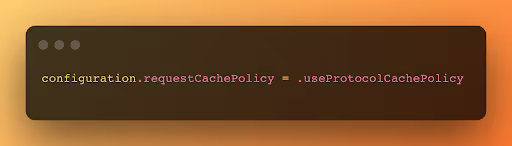

One of the easiest ways to improve the app responsiveness is to locally cache network calls, since we may reuse the same response instead of asking the service for a new one, which could be the same, unnecessarily increasing the latency for our users. We could build a custom cache for the app, defining which requests and for how much time they should be cached, but luckily the URLSessionConfiguration already provides a built-in system for this.

We can explore the possible options the configuration allows us through the requestCachePolicy property, which by default uses useProtocolCachePolicy.

In this case using what is defined in each request/response iteration, meaning each response sent by the service, can automatically define if it should be cached, and if so, for how much time it should be kept alive before expiring. To do so, it relies on the header properties sent by the response, more specifically the cache-control, where we can set if and how we can cache the response.

Currently we rely on highly dynamic data. For that reason we chose not to cache any response for the Search Results screen, but we are able to explore the cache usage in other screens when we are sure the calls are idempotent and its content doesn't change over specific amounts of time.

Note that we can manually cache responses regardless of what the service hints, but this could bring invalid states in the app or even make different clients behave differently. For this reason, I strongly recommend exploring available options and aligning with your services which response would benefit from caching, but being controlled by the services. This will ultimately improve the user experience.

Multipath TCP (MPTCP):

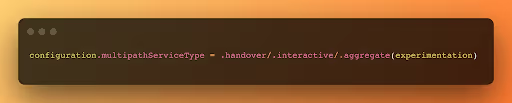

This is a feature we are currently not using, since our services do not support it yet, but is nevertheless worth mentioning. When we develop an app that can keep an open connection with the services, we depend on the connection stability to keep it open and the same data quality for the whole connection lifecycle. But when the connection becomes unstable or flaky, or even drops completely we may need to reopen the existing connection, or even try opening a new one using another interface (e.g., changing from Wifi to LTE). This process consumes resources and increases the latency, affecting the user experience, but this can be mitigated by using another feature, called Multipath TCP.

The feature makes use of all the interfaces, primary and secondary, provided by the device to try to maintain the best connection possible. It can seamlessly switch or compose between interfaces without interrupting the current open connection with the service, meaning it can change from Wifi to 5G for example, with no interruptions or affecting the user experience. It’s important to note that this feature needs server side support, so the services we consume should have this capability.

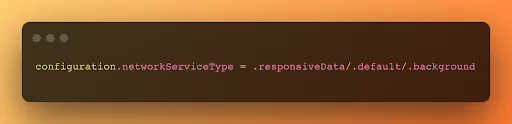

Apple makes it really easy to opt-in by doing:

We also need to make sure we have set the multipath entitlement correctly.

Service Type:

All the network calls done by the app go in a shared queue handled by the system similar to a FIFO order. I say similar because several calls can happen at the same time and a later call can finish before an earlier call, which wouldn't actually hold the queue. We could create mechanisms to manually control all the calls done by the app, including how many calls we want to fire at the same time, or order them by any specific details. Controlling it globally can become very complex and severely affect the app performance by wrongly prioritizing the calls, which is why the system provides an option to give a hint on how to prioritize specific calls so the queue can be automatically modified by priority, called network service type.

For the Search Results screen, we have calls to fetch data, but also tooling calls, which compete for resources equally as all of them have the default priority. As mentioned before, tooling shouldn't affect the user experience and must be done in a transparent manner, that's why we should invest in making user-facing calls having higher priority.

Here there are two options:

- Per session:

When creating the URLSession used to call your services, define the network service type for each. That means user-facing/time-sensitive services should have higher priority; user-facing, but not time-sensitive, can still have the default priority; and tooling services can have a lower priority.

2. Per request:

If we prefer to have a single URLSession, or are using the shared instance for example, we could define the network service type per request, applying the same logic as above.

In our case, since we have different shared sessions according to the service being used, we applied the first approach. This change shaved our latency for time-sensitive services by around 10%, which is amazing, but increased our tooling latency by more than 30% in our internal tests, which didn't affect anything in the project or business.

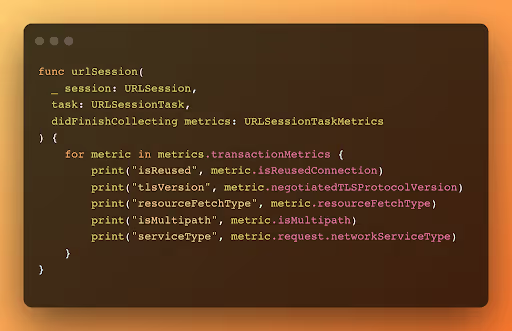

The Connection Reusability, TLS, Caching, MPTCP and Service Type can all be validated by using the URLSession delegate function:

We can use it to monitor and validate the behavior we implemented to check for further improvements if needed. The metrics can also provide performance and quality data for each task, which is recommended to be monitored.

As this discussion of networking shows, a few changes in the app code, as well as adjusting how we manage the connection between client and service, can both drastically decrease the networking latency, and by consequence, improve user experience.

It's important to note that we mostly covered HTTP/1.1 and /2, but the internet, and by extension, how we communicate between clients and services is quickly moving towards HTTP/3 and QUIC. This new protocol version is probably going to take over the majority of connections in the next few years, which can improve the user experience even further. So, while we can improve the status quo, it's wise to start looking into it.

Conclusion and future work:

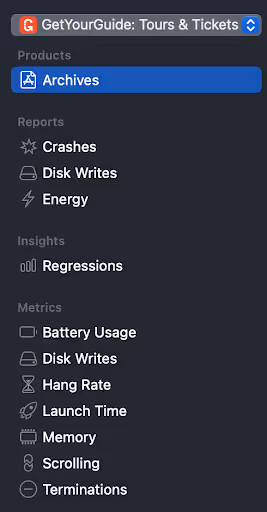

All the work shown in this series contributed to create a more fluid and seamless user experience not only in the Search Results screen, but also in the whole GetYourGuide app. After this work, we should ensure that we don't have regressions on them. That's why we have metrics and monitors in place to follow the improvements through different tools like Crashlytics and Datadog. We can also use the data that Apple already provides through Xcode Organizer, where we can check for example Hang Rate, Memory, and Scrolling metrics, which were improved with the changes applied.

For a more detailed and professional approach we are starting a new work to monitor other important metrics, such as Time To Interactive. After all, and as mentioned in the first installment of this blog post series, it's essential to keep delta latencies below 100 ms. This work will be followed by more improvements in the client side, which we may explore in a future post.

.jpg)