Scaling the Inventory Parts of our System Using Kafka Streams

Comprising millions of ‘tickets’ for attractions all over the world, inventory services are at the core of GetYourGuide’s business.

Key takeaways:

Sladjana Pajic, Engineering Manager in the Inventory team describes how the downtime around Covid-19 was an opportunity to scale up the inventory platform. Here’s how introducing Kafka Streams technology changed our architecture and leveled up platforms and teams.

Comprising millions of ‘tickets’ for attractions all over the world, inventory services are at the core of GetYourGuide’s business. After a record-breaking season revealed serious gaps in what had become an overloaded and outdated system the team started to look to alternative approaches incorporating new technologies. Shortly after, the onset of the Covid-19 pandemic had the immediate effect of stopping travel, bringing GetYourGuide’s bookings to a halt. The Inventory team used this time to scale up the platform, and update one of our oldest and most complex architectures to prepare for the big travel rebound.

{{divider}}

The Challenge: System Constraints

At the end of 2019, GetYourGuide was hitting record after record. The uptick in reservations led to the current inventory technical system starting to show its limitations. These included:

- Potential system failure due to huge growth and a load of inventory data tables in the main database;

- Poor performance of inventory endpoints preventing teams from innovating and getting maximum availability and pricing information;

- Booking errors due to outdated availability and pricing data;

- Missing capability for monitoring availability and price changes per every ‘ticket.’

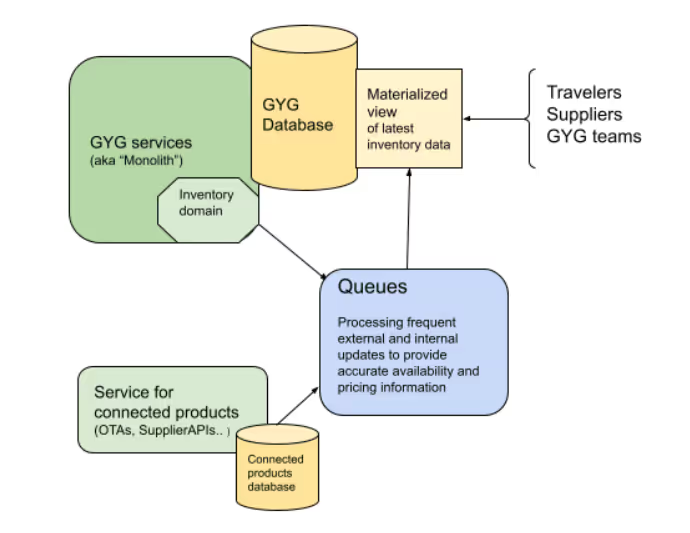

As a first step, the Inventory team analyzed the existing technical system and identified the main architecture bottlenecks:

- Having inventory services and its database as a part of the big monolith was preventing us from scaling just the inventory part of the system.

- The latest inventory data is constantly calculated and written in a single database table with hundreds of millions of records.

- The same table is also used as the main source for inventory data for all the teams and systems at GetYourGuide.

- Regular updates take hours because data is coming from multiple databases and there is a significant calculation time needed to get the final data format.

The team discussed many ideas including:

- Introducing a new microservice with an inventory domain;

- Applying CQRS (Command Query Responsibility Segregation) to separate read and write operations;

- Replacing databases’ tables with Kafka topics and streaming/transforming data.

But what would be the most efficient way to achieve the desired scale?

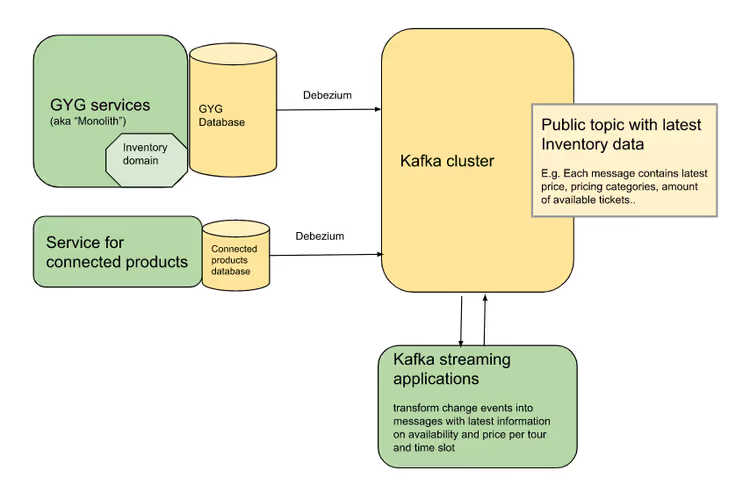

In the first phase, the idea was to focus on minimizing read operations on the ‘materialized view’ table. As the team was already familiar with Debezium and Kafka, the decision was to source both database tables into Kafka, and use Kafka Streams to transform those topics into output topics similar to the initial ‘view table.’ This topic would become a new source of truth and a single point where all the teams would get ‘read-only’ data. For write transactions, all teams would still continue to update the view table.

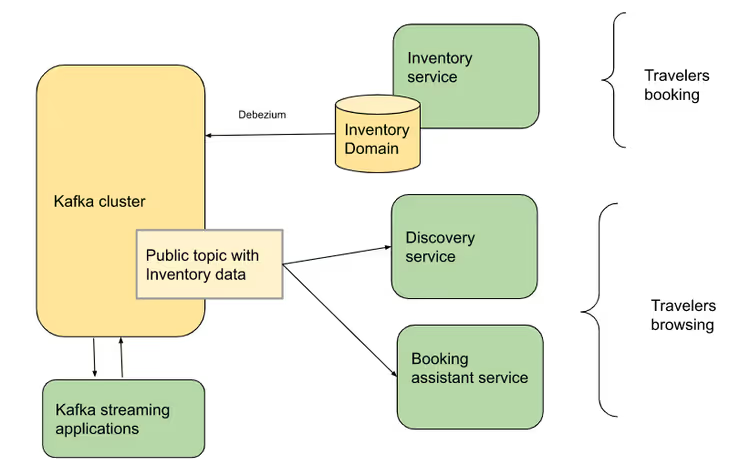

As a second phase, inventory domain data would be migrated into a new microservice with its own database. At GetYourGuide we have a proven five-step method for those migrations using Debezium change stream and Kafka (Read here more).

Evolving Architecture Step 1: Build the Stream of Inventory Data

A simplified visualization of the system, highlighting core components.

Choosing a solution with Kafka Streams meant that the team would work with a technology that was new to them, and also relatively new to GetYourGuide.

One of the very first learnings was that there would be a very steep learning curve — some engineers loved it, and some hated it because of its complexity.

For example: when using a company-established tech stack, teams already have set up environments and tools to build and ship with ease. Also, they have a good community of technology experts and collected ‘best practices.’

Introducing Kafka Streams as a new stack required the team to start from scratch, and count on its experiences with similar technologies, and ability to learn on the fly.

The main challenge in this phase was how to skill up the team. In normal circumstances, the easiest and fastest way would be to hire external experts who can help to lead the way and teach the team how to work with this new tech stack.

However, since GetYourGuide was heavily impacted by the Covid-19 pandemic and hiring was frozen, the main strategy was instead to invest time in people's growth, and place trust in their learning capabilities. One of my favorite pieces of feedback from the lead engineer on the project was this: “Not having external help made me learn so much, and I felt like GetYourGuide trusted in me.”

Lots of learnings came out of this period:

Use frameworks dedicated to streaming applications

The team evaluated a few Java frameworks for Kafka Streams. Azkarra Framework offered much better tooling compared to, for example, Springboot. Azkarra offers UI support for visualization of topologies and troubleshooting (e.g,. querying its data directly in state stores). Also, it provides a wider range of built-in metrics for excellent monitoring of streaming applications.

When unsure, rely on proof of concept (POC)

When you are uncertain about performance/scale/approach on how to implement a feature, remember to ‘divide and conquer.’ Meaning, instead of building the full feature it is better to break it up into unknowns and focus on building the most complex or risky parts first, and then evaluate the approach.

Over time we always used this approach to choose the best solution from a pool of ideas. Those that were successful were used to build the full feature, and those that got rejected helped us save time and re-evaluate other ideas.

Don't be scared to change the approach and start over

In the initial implementation, the team used a low-level Kafka Streams API called ProcessorAPI which is very powerful. After several months, the team realized that the solution was too complicated and wanted to re-evaluate whether a high-level Kafka DSL API would be a better choice. Here’s how they reached their conclusion:

- The team wrote tests showing that the current implementation with ProcessorAPI is incomplete and incorrect.

- The team built POC with Kafka DSL to compare the same transformation with high-level API and realized Kafka DSL was easier to implement and test.

- Since it is possible to combine those APIs, the team decided to rewrite the entire project into Kafka DSL and reintroduce Processor API only in cases where it is truly needed.

As a result of that change, the team managed to deliver the stream of Inventory data in a few months.

Make both team and system ‘Production-ready’

At GetYourGuide, all teams are focused on operational excellence and invest a lot to ensure that each team has enough tools and skills to support complex systems in production. For a project of this complexity, it took several months to go live. Specific challenges included:

- Monitoring of streaming apps is more complex than the monitoring of API endpoint;

- Building the tools for troubleshooting, as well as disaster recovery and building the knowledge in the team to be able to handle production issues;

- Defining a rollout strategy, e.g., recommended way is topics versioning vs full/partial reprocessing of the data in the same output topic.

Evolving Architecture Step 2: Adoption and Migration to Event-Driven Microservices Landscape

After the team delivered a new ‘Stream of data,’ everybody felt very proud that the new solution was much faster than the old one, and more accurate. At the same time, that also made a comparison between the systems pretty challenging — because the systems are significantly different at every point in time and the absolute truth is out there in the external systems.

To foster adoption, the team worked closely with consumers to give them the confidence to migrate to a new platform. Using new technology made a lot of teams hesitant to move.

The following steps were key drivers to adoption success:

- Providing full transparency as to why systems seem so different, and introducing an automated way to compare the old and new systems' output.

- Together with consuming teams, we defined SLA and it was a huge help in prioritizing bugs/improvements/investigations.

- Dedicating team members to work closely with consuming teams to support integration and quickly remove any issues.

- ‘Shift and lift’ principle: this helped the team to protect the scope of the project and avoid the trap of trying to fix ‘long-known’ bugs of old systems.

- Readjusting initial migration strategy: Initially, the plan was to migrate all teams to read from the new system and then to write into the new system. But to foster adoption, we decided to focus only on four teams critical to the user journey, rather than the all teams.

This change enabled the Inventory team to complete the new platform faster and start shipping features with the new platform.

For example, the recent release of the big Price Over API project was enabled and delivered by this platform. The feature allows suppliers to set their pricing in their system and share it with us, saving considerable time. For more, check out LinkedIn.

Evolving Architecture: How Successful Was It?

- Travelers APIs improved 50X. In practice, that meant, the slowest APIs went down from 5 seconds to 100 milliseconds.

- External availability and price updates in nearly real-time. Now, processing takes 20 seconds compared with a few hours in the past.

- Scale to the moon: At its peak, the platform processed a million messages per second. The new platform was used during the recent peak seasons (Easter and summer) and scored GetYourGuide’s all-time booking record.

What’s more, all of this was achieved using just 25% of the system’s current capacity. Furthermore, using Kafka Streams enables us to scale up by four in just one hour by simply adding more processors. If needed, within 24 hours we could also increase the number of partitions and scale it 10 times. Impressive!

Key Learnings and Takeaways

- Efficient Innovation

Introducing new technology (like Kafka Streams) required a longer discovery phase for the team to experiment and learn new concepts. So, in similar projects you should plan for:

- Trying out various POCs and sharing knowledge within the team. For example, we found that pairing and mob sessions helped combine domain and technology experts.

- Getting the right tooling is critical to team productivity, development satisfaction, and even the adoption of other teams. That could be debugging topic content with databricks’s notebook, or synchronizing the topic into the databricks.

- Collecting metrics from the start to have unbiased evaluation if the project is still on a good path. This is especially important in long-running projects because there might be doubts or even ‘sink-costs’ fear.

2. Executing

New technologies and concepts can lead to some surprises and unexpected behaviors.. To minimize those delayed ‘aha moments,‘ it is best to invest in end-to-end testing and cross-team collaboration (e.g., inserting a subject matter expert into integrating teams boosted productivity all round).

3. Outcomes

You won’t make it perfect on the first try — but keep moving, ship, collect metrics, and improve. Every new version of streams brought huge improvements that kept us going:

- V2 vs V1: The first version of streams took 48 hours to process all the data. But in the next version, it was 6 hours.

- V6 vs V5: Version 6 produced on average 70% fewer messages, and peaks were reduced by 90%.

- V6 vs V10: The whole Inventory team is super excited to see what version 10 will bring.

Stay tuned!

.JPG)

.JPG)