Understanding Data Products and their 4 Levels of Ownership

Data products have become an essential part of today's product landscape. The rise of the internet has shifted the focus from physical products to digital ones—the exponentially growing usage of internet enabled companies to build data products. According to Data Jujitsu author, DJ Patel, a data product is a product that facilitates an end goal through the use of data.

Key takeaways:

For anyone who’s struggling with the velocity of data product development, Dr. Alexander Weiss, engineering manager, offers a helpful guide to clarifying ownership. Alex has worked on data products in all domains of GetYourGuide. Currently, he and his team are in charge of the recommender systems and relevance ranking solutions from both a product and an engineering perspective.

{{divider}}

Understanding the hybrid nature of data products

Data products have become an essential part of today's product landscape. The rise of the internet has shifted the focus from physical products to digital ones—the exponentially growing usage of internet enabled companies to build data products. According to Data Jujitsu author, DJ Patel, a data product is a product that facilitates an end goal through the use of data.

From an engineering point of view, a data product can be anything from a simple dashboard that visualizes pre-cleaned data to a complex algorithm contained in a micro-service that consumes data from multiple sources and needs to serve requests with consistently low latency.

Recommender engines or dynamic pricing modules are standard examples for the latter, more engineering-heavy data products. They exhibit a hybrid nature. At their core, they belong to the data science domain: A customer problem must be turned into a data problem that is solved by building and optimizing an algorithm with respect to a target metric.

You might also be interested in: 15 data science principles we live by

This algorithm is often a machine learning (ML) model. The trained model becomes the heart and brain of a standard software engineering component. In modern software architecture, this component is frequently a microservice or at least an independent container.

Building a service comes with its own challenges:

- defining the interfaces

- fighting latency

- creating backups in the case of crashes

- dealing with the shortcomings of other services or streams that are required as input for the ML model

This work can even involve setting up additional infrastructure to collect more data (e.g., real-time counters of events). In most cases, these services have the same resiliency and latency requirements as other more traditional services.

The ownership challenge

Building a data product requires expertise in both data science (DS) and backend engineering (BE). Besides a few unicorns out there, the majority of people working in this area have a deep expertise in one domain and gained moderate knowledge in the other one. Products requiring expertise from different domains is a common challenge for today's companies.

One way to tackle this challenge is by organizing the product teams in a cross-functional manner so that all skills for owning a product end-to-end are involved. It would be quite strange to separate backend engineers or designers from frontend engineers working on the same product.

Surprisingly, data scientists are often a less obvious ingredient to the mixture. This might be caused by the blurry definition of the DS role itself. For many non-domain experts, the data scientist lives somewhere between an engineer and an analyst. Consequently, they are sometimes considered a replacement for an engineer or as an optional "on-demand" team member. Along with the unclear role of a data scientist within a cross-functional team comes the unclear ownership of data products.

You might also be interested in: 10 tips on effective communication in business intelligence

Levels of ownership

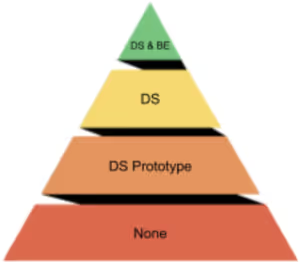

In practice, we've seen four levels of ownership for data products from a DS perspective.

Level 0: The DS consultant - no ownership

The data scientist acts as a consultant for the data product's algorithmic components. Their contribution is generally time-boxed. After they’re done, their ideas are implemented and maintained by the product owning team. This approach can only work if the DS part is simple enough to be understood by non-domain experts (heuristic approaches) and if there are no further iterations on the model needed.

Level 1: Throw it over the fence - owning the DS prototype

The data scientist owns the algorithm underlying the data product. They are free to consume different data sources, test different models, and optimize the logic in any way they see fit. Their work lives in notebooks. Once the data scientist has achieved satisfying results, they hand over their work to an engineering team that rebuilds the data scientist's work in production.. The challenge with this approach is that the production system might be very different from the data scientist's environment.

The programming language (and its libraries) might differ, the way the data is consumed might differ, and the requirements for performance might differ, too.

In addition, the data scientist might not even be aware of these differences. In summary, the implementation of the DS idea in production might deviate significantly from the original idea itself.

Furthermore, this process introduces long improvement cycles as the reimplementation of the prototype is a bottleneck.

Level 2: Algorithm in a box - owning the DS part

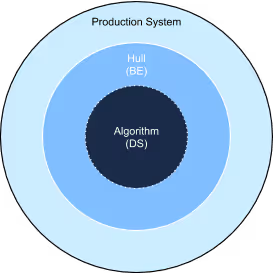

The data scientist has full ownership of the algorithm. Their implementation lives in a container in the production system—the container abstracts all the pure backend engineering work. The data scientist can rely on well-defined input and output interfaces without caring too much about what happens outside of her domain of responsibility.

This approach avoids the reimplementation of code at the cost of abstracting the production system to a digestible embedding for the algorithmic part. This setup's challenges are an increased responsibility for the data scientist to build performant algorithms and a dependency on engineers if the interfaces must be changed. Furthermore, important components of the data product, such as tracking or monitoring, still have unclear ownership.

Level 3: Empowerment - owning the data product (DS & BE)

The data scientist has full ownership of the algorithm and full control over the data product's engineering hull. In theory, this can be accomplished by one unicorn. In practice, it is usually done by having a data scientist and a backend engineer as two full members with a shared set of milestones in one team. In this setup, the data scientist can tune the algorithm, and the engineer can adapt the surrounding backend in any manner.

The one constant we see between the different levels is the split between ownership of the algorithm and ownership of the backend framework. Even in Level 3, we see that they require separate people to own the separate parts of the data product. Thus, the challenge to be solved is not how to unify ownership to one person but to minimize the number of different teams owning different parts of the data product.

Conclusion: Check your ownership, increase velocity

There is a good reason that companies establish teams being capable of owning products end-to-end: As other teams cannot block them, they can move faster. The same also applies to data products. If building and iterating on your data products feels clumsy, you might have a problem with your ownership structure. Sit down and determine for each data product your ownership level.

You should strive for Level 3; Level 2 might be acceptable if the data product is running stable, and further iterations will only affect the DS core and not require changes to the hull or the interfaces in between. It might also be an option to iterate quickly on a data product in a new domain (i.e., the domain owning team wants to build a data product MVP without making a data scientist a full member of the team).

Though, make sure that Level 2 is your choice, and you do not restrict your ideas due to this level's limitations. Level 0 and 1 are unbearable for any data product with any sort of algorithmic complexity. If you find yourself in these levels, make sure that you change your team structure accordingly. You or your data scientist should become a member of the data product owning team.

Act accordingly to your findings, it will simplify your life as a data product developer.

If you are interested in data science, check out our open positions

.JPG)

.JPG)