Laying the Foundation of our Open Source ML Platform with a Modern CI/CD Pipeline

Theodore shares how he and backend engineer Jean Carlo Machado built a modern Continuous Integration / Continuous Deployment (CI/CD) pipeline to use in all our machine learning services. They used Jinja for the templating, pre-commit for the automatic checks, Drone CI for the continuous integration, and Databricks jobs for the training. The data scientist also explains how we manage our models using MLflow and how we can orchestrate the workflow using Apache Airflow.

Key takeaways:

Theodore Meynard is a senior data scientist for the Recommendation and Relevance team. Our recommender system helps customers find the best activities for their needs and complement the ones they’ve already booked.

Theodore shares how he and backend engineer Jean Carlo Machado built a modern Continuous Integration / Continuous Deployment (CI/CD) pipeline to use in all our machine learning services. They used Jinja for the templating, pre-commit for the automatic checks, Drone CI for the continuous integration, and Databricks jobs for the training. The data scientist also explains how we manage our models using MLflow and how we can orchestrate the workflow using Apache Airflow.

To start, I’ll explain how we laid the foundation for our machine learning platform using open-source software to manage our services powered by data. Then, I’ll focus on training and batch interface and cover these points of the project:

- Our infrastructure before the project

- Principles and motivation for the platform

- The design of the solution: laying the foundation of our ML platform

We also presented our work at the Pydata Berlin meetup, a gathering of users and developers of the Python ecosystem in Berlin. Check out the full video here:

Pydata Berlin Meetup February 2021: The Foundation of our Machine Learning Platform at GetYourGuide

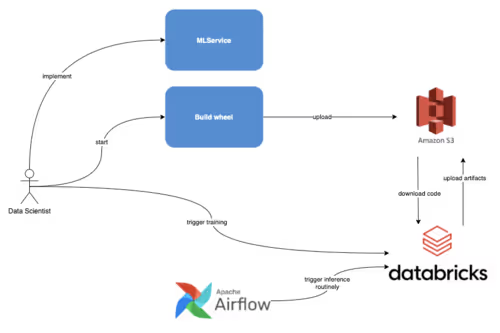

Infrastructure before the project

The Data Products team has come a long way since its roots in 2016. While our models’ complexity and reach grew, our deployment process lagged. The orchestration of the training and inference of our models is done using Apache Airflow. The computation is executed on Databricks, where we used jobs to generate the dataset, train our models and do our batch predictions. However, the code of our models in production was on Github but it needs to be imported to Databricks. Before we built our platform, the best practice was to wrap the code into a Python package, build a wheel, then send it to S3. Finally, we installed the package on the cluster before running the training job and then saved the models or the predictions to S3.

Here is the high-level diagram:

Learn more about how we now manage our ETL pipeline in Databricks in How we built our new modern ETL pipeline part 1 and part 2.

Nonetheless, this workflow did not allow us to have a straightforward link between the code used for training and the output model and predictions. We could not find the right information about the training metrics unless we looked into the logs of the job. It slowed us down each time we wanted to iterate on an existing model. When we wanted to start a new project, we had to copy and adapt this step from other repositories and refactored it for our new needs, which took time and was prone to error.

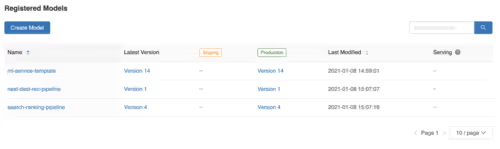

Moreover, we did not have a dashboard showing all the models used in production. As we are always experimenting with new models or iterating, it was hard to keep track of all the models being used at a given time.

Now that I’ve described our previous productionalization workflow and highlighted its limitations, I will now present the principles we based the platform architecture on.

Principles and motivation for the platform

We aspire to reach our customers faster through innovations. Many ideas need to be assessed, and multiple iterations are necessary before we nail it. We wanted to improve our workflow by automatically recording information. A lot of information about a training run is diluted in the logs. Extracting the relevant information and presenting it intelligently helps the documentation and onboarding of new members. Finally, we needed better engineering and experimentation standards.

Learn more about how we approach data science as a company in 15 data science principles we live by.

Even if the use cases and frameworks are very different at GetYourGuide, there are many shared components between the different services using machine learning.

In all our projects, we aim to:

- Follow the best practices of software engineering, such as code reviews, automatic tests, and consistent style.

- Train with a full production dataset. In machine learning, the output model is a combination of code and data. Therefore, we need to train our model in a system where the production data is accessible.

- Reproduce past runs. The exact environment and settings used for the training should be readily available to reproduce the run.

- Save and access the metrics generated during the run and output models.

- Quickly deploy a new model version and roll back in case of an incident.

If you want to learn more about how we structure our teams using data, check out Understanding data products and their four levels of ownership

So there’s the motivation behind the project and the requirements. Now let’s dive into the design of our platform foundation, leveraging open source software and integrating it into our infrastructure.

The design: laying the foundation of our ML platform

The foundation can be explained in two stages:

- Software engineering principles - Gathering all the engineering best practices in a template

- Machine learning specific refinement - Showing how we leveraged MLflow and docker containers to make our run reproducible, easy to understand, and to deploy.

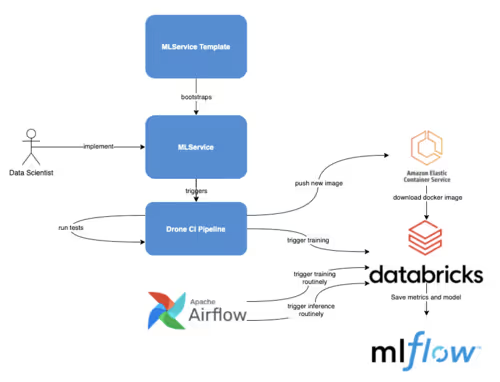

Here is a high-level diagram:

a) Software engineering principles

We created a template with all the best engineering practices for the Python project with the help of our Developer Enablement team.

This bootstrapping step automatically creates the repositories in Github, sets up the testing pipeline in Drone CI, and creates necessary infrastructure in Amazon Web Service.

The template also includes a pre-commit configuration to verify that the code is up to standard before doing a commit by running git hooks checks.

When a commit is pushed to GitHub, the template also sets up a pipeline to ensure that we are not committing credentials by mistake and that the style check, type check, and unit tests run successfully.

All of these are good continuous-integration practices. For traditional software, it's where it can directly be deployed. However, for a machine learning project before deployment, our model still needs to be trained for hours in powerful machines while accessing production data.

b) Machine learning specific refinement

The constraints on the machine's size, computing time, and access to production data to train our model make it impossible to do it in Drone CI. Instead, when we want to start a training run we add a special keyword in the commit message.

In this case, a new Drone step will tie all the dependencies together in a docker image. It gives us great flexibility in the machine learning frameworks while making sure the runs are reproducible. Then, Drone will trigger a job in Databricks to train our model. The Databricks job can use powerful instances, access production data, and run for multiple hours. To manage our models and log the metrics, we use MLflow. Let's run through some of the features we used for our platform:

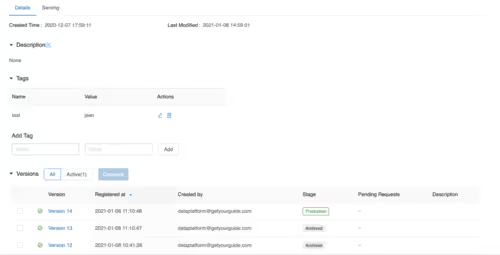

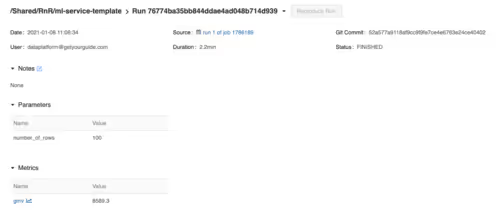

Here we dive into one of the models in production to see different model versions.

We have the metrics and parameters gathered during training for each version, a link to the logs, and the git hash of the code. It also saved the output model and all relevant artifacts generated during the training, which can be downloaded locally for inspection or used for inference.

The newly created docker image and the model logged in MLflow can be used to predict regularly using Airflow. We can also schedule regular re-training of the model with Airflow and predict using the latest model.

Conclusion

By now you’ll have learned how we started to build the foundation for our machine learning platform using open-source tools. Instead of reinventing the wheel each time, we wanted to deploy a new service: we created a template. The template allows us to quickly create a new service with a CI/CD pipeline, making sure we can reproduce our training and record the results in a dashboard. We have a working pipeline that can run production, and we can focus on our core work: Building data products to help people find incredible experiences.

If you are interested in joining our engineering team, check out our open positions.

.JPG)

.JPG)