Machine Learning Best Practices at GetYourGuide

Discover the essential machine learning best practices at GetYourGuide. Learn how we manage and optimize our ML projects using tools like Databricks, Mlflow, and Arize. Explore our innovative scorecard system to ensure quality and efficiency in data science engineering. Perfect for data professionals seeking to enhance their ML workflows.

Key takeaways:

At GetYourGuide, several teams work with machine learning systems to solve a variety of problems. Ranking activities on a landing page, predicting which experiences are likely to sell out, making sure the locations that activities are tagged to are accurate – all of these tasks rely on AI-driven solutions and services dedicated to building and serving machine learning models.

On top of that, we're always experimenting with new ML models and approaches, and so we're frequently introducing new services and new pipelines that need to be managed. With multiple teams building data science products and managing over 50 projects, we face a unique challenge:

How do we ensure high-quality machine learning best practices while maintaining a fast pace of innovation? How does GetYourGuide ensure that these machine learning projects meet the highest standards?

{{divider}}

Best Practices for Machine Learning Development

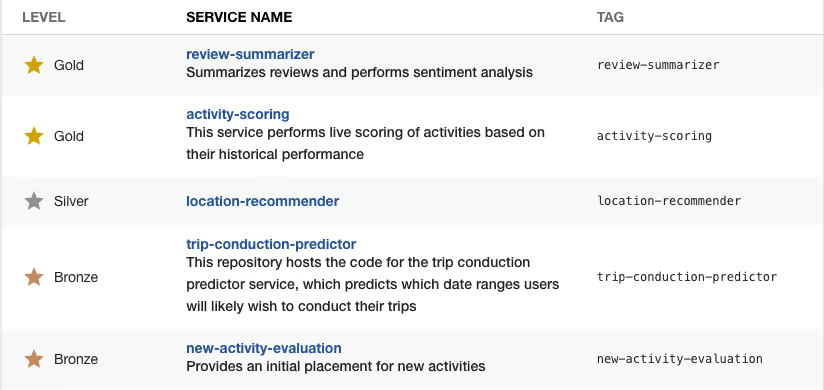

One answer is to establish a set of machine learning best practices for each project, alongside a “scorecard” system to evaluate their adherence to these standards. This approach provides each team with clear insights into whether their projects meet the best practices.

At GetYourGuide, we use a tool called Cortex to enforce these. We give it a configuration file with all the different "rules" we want to judge services on, plus some logic to determine which projects the rules should apply to (since we're focused on ML projects) and it periodically checks those projects against those rules, recording a "pass" or "fail" to each one.

Of course, not all rules are equally important, so some contribute more or less to a project’s rating than others, and whether a given best practice is useful or applicable to a given project can vary. Ultimately, the scorecard is a tool to be used by the project developers, not something to be blindly complied with. While we expect a solid justification for going against the rules we define (especially our most basic ones), we allow for the possibility.

Basic ML Rules

So, what are the best practices we’ve defined so far? The most basic rules concern linting and versioning.

Python Versions

Nearly all of the machine learning projects at GetYourGuide use Python. We ensure that projects adhere to a minimum Python version to avoid using versions that are no longer supported, which could lead to security risks or performance issues. You can look at the python release cycle for more details.

Databricks Runtime

Since we heavily rely on Databricks for machine learning, we enforce the use of supported Databricks runtime versions to ensure stability and performance in the ML pipelines we run – it's through running "jobs" on Databricks that we train our models, build the datasets said models are trained on, etc. Similar to the python version rule, we want to ensure that our projects are using a supported Databricks runtime version, ideally one labeled as “long term support”.

Pre-Commit and Mypy

We also ensure all projects use Pre-commit and Mypy. The former runs through various configurable checks prior to allowing a commit to be made in git. This means performing linting, which detects both syntax and style issues, as well as more basic checks, like ensuring large files aren't committed (since that's likely to be a mistake).

As for mypy, it's a type-checker. Because Python is a dynamically typed language, a conflict between the type that's expected and the one that's received might only result in an error once the code actually runs. However, if we use mypy (and type hints), we can catch these errors much earlier, without having to run the code.

Optional Rules

The remaining rules all involve the use of tooling that, while not strictly necessary, are often very helpful in streamlining the development and maintenance of ML systems at GetYourGuide.

Poetry

We encourage the use of Poetry for package and dependency management. The idea here is that we expect our projects to have some concrete list of their dependencies and the versions of those dependencies when they're being built and deployed for use in production, and poetry is the tool we recommend for achieving this. It may sound like a simple problem, but managing a project's dependencies without a tool can very quickly become intractable. Our dependencies can have their own dependencies, and those dependencies can have their own dependencies, and so on – to completely list out the libraries a project uses, you need to follow that chain all the way to its end, and sort out any conflicting dependencies that pop up along the way. If you skip managing versions and just list the libraries you need, leaving it to the installer to handle the rest, you risk running into hidden errors caused by new versions of packages being automatically used.

Mlflow

We also recommend using MLflow for managing machine learning models. You can deploy models without this tool, but using it along with our shared model registry makes the process easier and more reliable. Features like automatic versioning and the ability to tag models with extra details have been very useful for us.

Arize

Arize, an AI-driven solution for model observability, is another recommended tool. We've previously written about Arize, but to summarize: this tool allows us to detect issues with models in production, such as distribution shifts in features or predictions. Being able to quickly respond to these changes helps ensure that the models we use in production continue to make accurate predictions.

DDataflow

We also use internally-developed tools like DDataflow, which assists with testing PySpark code in ML pipelines. It allows you to sample data sources when ingesting them, so you can test your pipeline with smaller data before releasing changes to production. This is much easier than creating mock data manually. You can use DDataflow offline to download sampled data, or as part of your continuous integration pipeline within your test suite.

Db-rocket

Databricks-Rocket – or db-rocket for short – is another internally developed open source tool that we check for in the scorecard (and we've written about it too, here and here). Its purpose is essentially to bridge the gap between our local development environments and the Databricks notebooks that we often use, allowing us to have the best of both worlds: being able to quickly and easily make changes (and track them with version control) in your normal environment paired with the interactivity and access to live data of a notebook.

Devcontainers

Finally, we recommend using devcontainers to provide consistent development environments for new contributors, which speeds up onboarding and ensures a standardized setup for each project. And because devcontainers integrate well with Github Codespaces, which provides a remotely hosted development environment, getting started on a project can be as straightforward as finding the repository and clicking a couple of buttons. This is especially helpful in cases where you need to make a one-off contribution to some project rather than developing it long-term.

Raising the Bar

At GetYourGuide, we're committed to advancing machine learning (ML) and artificial intelligence (AI)-driven solutions that power our platform. From utilizing machine learning best practices to managing complex ML systems, we’re always pushing the boundaries of innovation. Here’s how we approach it:

The flexibility in our scorecard's rules is an area we're particularly keen on improving. At the moment we use Cortex's Github integrations to check for the existence of certain files and validate their contents. These offer a quick way to write simple rules, like checking a project's python version or whether it uses poetry, but aren't practical for more complicated ones. If we wanted to, say, check whether a project has at least 30 tests defined, that wouldn't be feasible.

To address this, we’re exploring custom REST API integrations that will allow us to send tailored data and build more flexible scorecards. This solution will empower us to write code that checks each project’s specific attributes, such as the number of tests, giving us the flexibility we need without sacrificing control.

We aim to make scorecards easier to integrate into daily workflows. At the moment, teams need to proactively check the scorecard to be aware of their projects' standing. Having automated alerts that notify teams when their projects fail to meet basic criteria would make this less necessary.

This would not only save time but also promote greater accountability. Having our best practices reflected in our service templates – which new projects are built from – helps too.

Looking ahead, we plan to enhance the rigor of our scorecard checks and introduce new ones. For instance, support for Devcontainers may move from a recommendation to a requirement. We plan to make our existing checks more rigorous, and to add several new ones down the road. Just as we continue to refine the way we build data products, the bar for our best practices is sure to gradually rise.

We’re excited about the challenges ahead and are committed to elevating the standard for AI development and machine learning model management at GetYourGuide.

Shoutout

Special thanks to Steven Mi, Mihail Douhaniaris, Cyrill Burgener as well as the wider Data Products group for their collaboration, feedback and support in standardizing our best practices through the scorecard and continuing to raise our standards around machine learning systems.

.JPG)

.jpg)