Transitioning from Apache Thrift to JSON and OpenAPI

At GetYourGuide, we recently made a change in our main serialization framework from Apache Thrift to JSON. Dang shares his team’s experience in that journey, the pitfalls, and what the future holds for the team with the new serialization framework.

Key takeaways:

Dang Nguyen is a data engineer in the Data Infrastructure team. His team’s mission is to provide reliable scheduling, messaging, and data processing platforms for non-interactive workloads. A few technologies in their tool kit include, but aren’t limited to, Kafka, Airflow, and Elasticsearch.

At GetYourGuide, we recently made a change in our main serialization framework from Apache Thrift to JSON. Dang shares his team’s experience in that journey, the pitfalls, and what the future holds for the team with the new serialization framework.

{{divider}}

Our use-case for serialization framework

The serialization framework plays a big part in how our services communicate with each other, which are:

- Synchronously: server-client (or request-response). Clients need to know which endpoints the server exposes, the expected request format, and the respective expected response format.

- Asynchronously: Two services (producer and consumer) communicate by exchanging messages via an intermediate medium such as a message broker. To consume a message, consumers need to know its format, which is determined by the producers.

For services to communicate with each other efficiently, we had been using Apache Thrift IDL to define the “contract.” However, to keep up with the new and growing tech stack, we decide to make the transition to JSON for a couple of advantages:

- It is human-readable, which makes on-the-fly debugging easier.

- It is the industry standard for RESTful API.

- It is supported by and plays well with modern technologies and tooling (e.g., Kafka).

With that, we also adopt OpenAPI and AsyncAPI specifications as the contract language for service communication.

You might also be interested in Inside our recommender system: Data pipeline execution and monitoring.

With new serialization comes new tooling

The new serialization framework requires a whole new set of tooling and support to enable our developers to achieve their best productivity. Given the company’s strong support and belief in open-source principal, we use the existing open-source projects while simultaneously contributing back to our own tooling and improvements.

Specification

As mentioned above, OpenAPI and AsyncAPI specifications define the schemas and the endpoints (sync) or the channels (async) which the services expose and can be talked to.

Registry

Once a service’s specification is defined, it needs to be made available to the public and other services. For that purpose, we have a centralized registry to host all the specifications. We adopt the open-source Apicurio Registry, which is in active development and has fantastic support for various specifications and backends.

CI/CD

The specification publication step is well integrated into our CI/CD pipeline. Every time a service’s new build is deployed, the latest version of that service’s OpenAPI and/or AsyncAPI specifications are also published to the registry. This ensures the contract and the service implementation are always in sync.

Furthermore, we require each new specification to be backward compatible with previous versions. This protects the dependency between services.

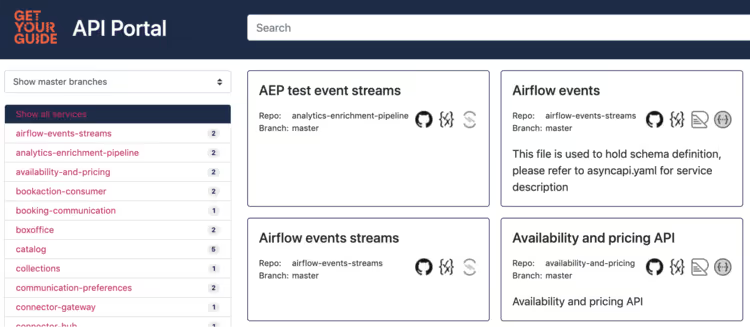

API Portal

API Portal is designed to be a one-stop-shop for developers to discover all available APIs from all services. It uses the registry and allows developers to explore APIs in visualized documentation templates such as ReDoc or Swagger.

Schema Validation

In the use-case of asynchronous communication, it is crucial for the producers to write the correct messages and adhere to the schema they “promise” to consumers. To help with that, we developed a library in-house that intercepts every message going to our broker, validates the messages against their respective schemas, injects special headers into the messages, then fails or passes the messages depending on their validation status. This way, the consumers can trust the messages they read from the broker; they even have an option to re-validate themselves, should they wish to do so.

You might also be interested in How we use Typescript and Apache Thrift to ensure type safety

Development Workflow

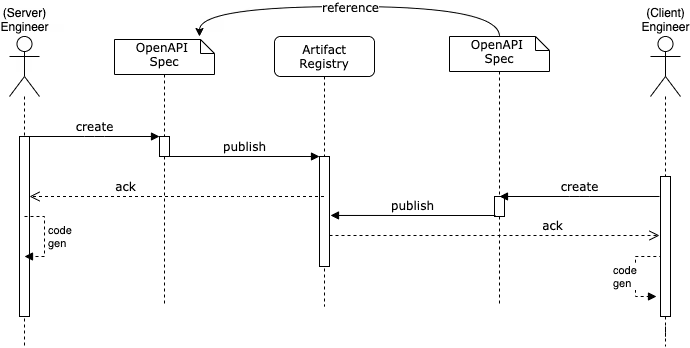

With all the tooling in place, let’s see how developers can use them in practice. Below is a typical flow for services in synchronous communication:

Server:

- Write the OpenAPI spec

- Publish the OpenAPI to the registry

- Use code generation based on the OpenAPI spec

- Implement the application logic based on the generated interfaces

Client:

- Write the OpenAPI spec, including references to the server’s public OpenAPI spec when need to (such as schema)

- Publish the OpenAPI to the registry

- Use code generation based on the OpenAPI spec. Because the referenced schemas are the same between server and client, they are consistent.

- Implement the application logic based on the generated interfaces

The pitfalls of OpenAPI

Inconsistency between open-source tooling

OpenAPI benefits from an active open-source community resulting in a big collection of available tooling. That should help adopters to get started quickly and avoid reinventing the wheel. Unfortunately, these toolings do not provide the same consistency in how they interpret the OpenAPI specification, meaning that for a given rule, tool "A" may allow the rule, whereas tool "B" does not allow it. Therefore, it is essential to test the tooling end-to-end when chaining them together.

Get ready to get your hands dirty

Depending on your requirements, merely adopting the open-source tooling may only show 50% of the story. In our case, we often find ourselves in a position to modify and extend the open-source projects to better fit our needs: in one case, it is due to specific business requirements. In another case, it's due to an old long-running bug that has not been fixed yet.

Provide best-practice early and fast

OpenAPI gives a lot of leeway for how one wants to use it. You can structure the folders in different ways and bundle them together later. You can name your endpoints, your schemas, your headers in whichever way you want. However, as an organization, that approach can easily lead to disaster and create technical debt along the road. Instead, providing a standard best-practice set on the convention, the template, what can be used and cannot be used (hint: ENUM and anyOf are evil) will benefit a long way.

You might also be interested in: How we built our new modern ETL pipeline

Where to go from here

As with any technology shift, there is a migration period between the legacy and the new tool. For us, that is migrating all the Thrift IDL to OpenAPI. Because we cannot find any existing open-source solution, we develop an in-house solution to help us just that.

We have a use-case in which we need to convert our data from JSON directly to parquet. The trick here is JSON being a schemaless format, whereas parquet requires a schema, so we need to bake the schema from the registry into the JSON data to generate parquet data. We open-source the solution here in the hope of helping others with a similar use-case.

Once the migration is complete, we wish to extend the current framework further to enable our developers' productivity. Some ideas are:

- Real-time validation using production traffic

- API mocking

- Contract testing

.JPG)

.JPG)