Building Custom NER Models With SpaCy To Enhance Travel Product Inventory

Marco Venè and Marina Zemskova, Senior Data Scientists on the Growth Data Products Team, are responsible for developing and maintaining various machine learning solutions to accelerate the growth of GetYourGuide. In this article, they discuss how they utilized the spaCy library for Named Entity Recognition to expand the coverage of travel interests across multiple languages.

Key takeaways:

Marco Venè and Marina Zemskova, Senior Data Scientists on the Growth Data Products Team, are responsible for developing and maintaining various machine learning solutions to accelerate the growth of GetYourGuide. In this article, they discuss how they utilized the spaCy library for Named Entity Recognition to expand the coverage of travel interests across multiple languages.

The Growth Data Products Team builds machine learning solutions powering our recommendation services, demand forecasting, optimizing our marketing and customer loyalty efforts, and much more. Our team’s MLOps engineers ensure our pipelines and services run smoothly in production through developing our machine learning platform and integrating tools for data integrity and model observability.

Data Scientists Marco Venè and Marina Zemskova worked together on a project aimed at simplifying the discovery of GetYourGuide's broad collection of travel activities. We constantly explore new ways to categorize our products, as one activity may appeal to various user interests. For instance, a skip-the-line entrance ticket to the Vatican Museums could be associated with things like 'museums,' 'art,' 'history,' 'fast track entrance,' and 'family-friendly,' among others. Accurately linking our products to relevant travel interests is essential for ensuring our users can easily find what they’re looking for by navigating our app, website, or seeing relevant ads online.

The Challenge: Finding Interest Categories Takes Time

Identifying new travel interests can be a time-consuming task because it involves reviewing a large amount of raw text in different languages. Our Operations Team has many potential sources to scrutinize, such as travel blogs, customer reviews, and travel reports collected by our Data Engineering Team. Over time, we had accumulated a large backlog of text to be processed. While the travel interests found are accurate, manual reviewing is prone to false negative error, i.e. missing relevant entities. For these reasons, we built Named Entity Recognition (NER) models to scale and automate the process of uncovering new travel interests and updating our catalog database.

The kind of entities we are interested in could be: 'paradox museum,' 'cavern tour,' 'tulips,' 'glassblowing class.' We try to find both completely new entities that we miss in our database as well as different aliases and variations of the same concepts. For instance, we might already have an entity 'cave tour,' but still want to add 'cavern tour.'

Our data product goal is to extract these entities from raw text data that might be something like a 'guided tour to the Anne Frank house.' In this example, we are interested in the 'guided tour' travel interest.

In this blogpost, we will talk about how we developed a NER pipeline to automate and scale the recognition and extraction of new travel interests with the help of spaCy library and natural processing techniques. Happy reading!

Custom SpaCy Named Entity Recognition (NER)

A NER Machine Learning model is an information extraction model for locating and classifying named entities in unstructured text.

The spaCy library has the big advantage of providing pre-trained NER models in more than 20 languages that can be used as base models to be further trained with custom data and entities.

The models in spaCy are named by a language identifier, the source data used for pre-training the model (e.g. 'web'), and a specifier for the size of the model (e.g. 'lg' for large). All details on these models are available in the spaCy documentation.

The spaCy pre-trained NER models are neural networks that are already able to identify and extract a different set of entities such as persons, companies, numbers, and events. The full list can be found in the glossary.

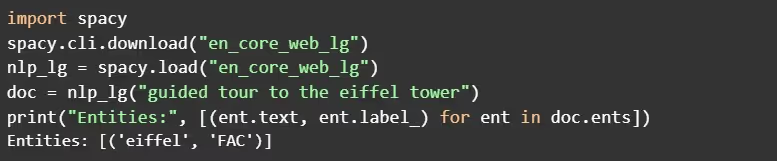

Downloading the models and extracting entities can be achieved in a few lines of code:

The pre-trained large English model recognizes 'eiffel' as a 'FAC,' meaning a facility. While that is already a relevant insight, in our project, we want to extract 'guided tour to' as a travel interest.

As we have seen, the pre-trained base models do not offer travel interests entities out of the box, but luckily the spaCy library allows us to train the custom NER models to do exactly that.

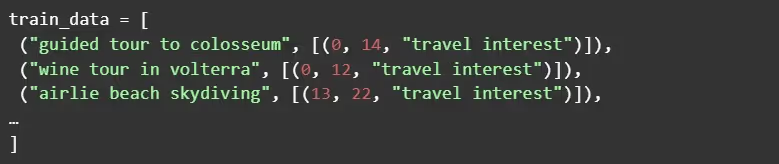

In order to train the model, we need to create a training set of fully annotated text. With annotation, we mean the definition of which text corresponds to which entity and where the entities are located in the text.

Specifically, we need a list of tuples containing each:

- The text to be annotated

- A list of tuples, containing the span of each entity and the corresponding entity label

To make the dataset more tangible, here is an example of our annotated training data.

Annotating text can be a tedious task, hence a large part of our project was dedicated to automating annotations.

To go into more detail, we used a mix of regular expressions (re package) and custom functions to:

- Match what parts of the text are travel interests and get the corresponding spans.

- Clean possible span overlap that breaks the spacy NER pipeline.

- Format the data for spaCy. This preprocessing step is available directly in the spaCy documentation.

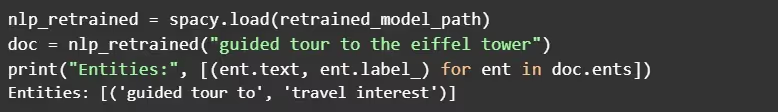

After retraining the model based on our own data, we can observe how the model has learned to predict entities for travel interests much better than before:

Let’s dive deeper into how NER model training works with spaCy.

Training our NER Models with spaCy v3

For training our models we used spaCy v3 to make use of its config system for defining all of the settings and hyperparameters in a single file. This setup makes it easier to track changes and understand what is going on under the hood.

To get started we generated a base configuration with spacy init config command. The same is also possible through a quick start widget on the spaCy website. To create a base config we need to specify the model language, pipeline components to train, hardware (CPU or GPU), and whether to optimize for efficiency or accuracy. The latter determines if it’s preferable to use a smaller model that would be fast for training and inference or a bigger, slower, but more accurate model. In our case, we want to train NER components for multiple different languages, using CPU and optimizing for accuracy. Inference speed is not crucial for us since our models run offline so we choose to use the large spaCy pipelines.

The base config determines the main settings and parameters for training the models. These include paths to training and validation data, embeddings we want to use and their type, specification of the pipeline components to train and types of model architectures to use for them. Additionally, it is possible to set the optimizer, batch sizes, and batching strategies. One can either go with the default settings or customize the configuration.

Once we are satisfied with these choices we can generate the full config by running init fill-config. The full config contains all of the training settings without any hidden defaults. This level of visibility is especially relevant for us given that we have multiple models to train and have several people working in collaboration on this project. It also gives us full control of the training procedure and helps deepen our understanding of the inner workings of spaCy pipelines.

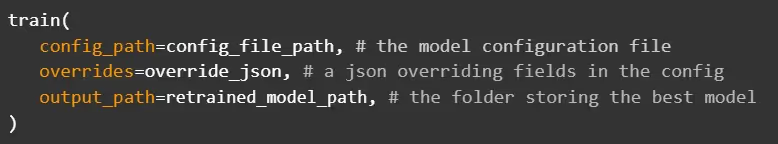

With spaCy v3 many of the necessary steps like early stopping, model evaluation, training, and logging are done with a single line of code or a hyperparameter setting saving us time on implementation, preventing errors, and making the code easier to read. For example, all we need to do to train our model for any language is to call a train function from spacy.cli.train module:

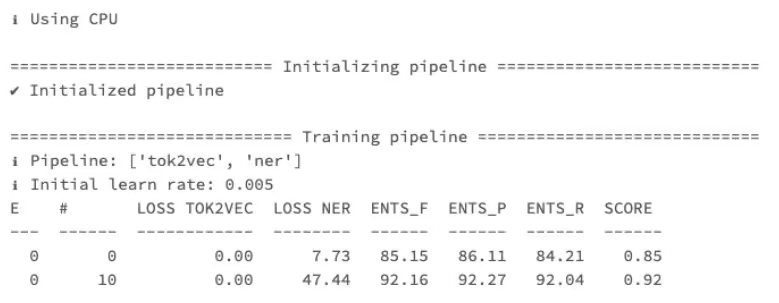

During training spaCy also provides logging of the execution steps like initialization, the start of the training procedure, loss, metrics after every evaluation step, and the path the final best model was saved to. Training logs could start something like this:

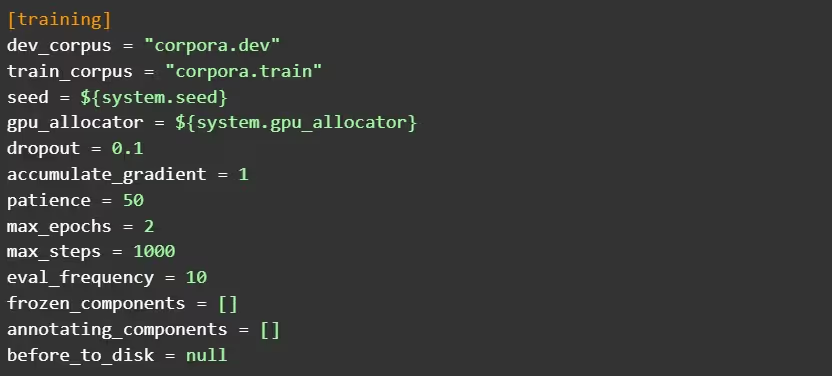

We can specify how often we want to check performance metrics and when to invoke early stopping by setting two parameters in the config’s [training] section: eval_frequency for the former and patience for the latter. When eval_frequency is equal to 10, it means that after every 10 iterations spaCy will calculate and log the current losses and performance metrics. For NER models, it is entity F-score, precision, recall, and the overall score. In our case, the overall score is the same as the F-score since this is the metric we care about the most due to both precision and recall being important to us.

Early stopping is controlled by setting the patience parameter of the config. If it is set to 100, it means that if the score hasn’t improved for 100 consecutive iterations, early stopping will be triggered and the training will stop before reaching the maximum number of iterations or epochs that could have been set with max_steps and max_epochs parameters.

The [training] section of the config then could look like below:

After training, to get the performance metrics of our best models on the validation set we can run evaluate function from spacy.cli.evaluate:

Since we have multiple languages, we need to create separate config files and enable executing the same training and evaluation functions in parallel for each language. This is all that is required to train our models with spaCy v3.

Post-Processing of the Extracted Entities

Once our models are trained, we are ready to get the predictions and extract our entities for travel interests. Depending on the use case, there can be different requirements for the desired output so we often also need some post-processing.

Things we might want to address:

- Travel interests that we already have in our databases.

- Misspellings or travel interests written not in our target languages.

- Extractions containing offensive or not brand-friendly terms.

- Extractions that are not actually travel interests or are irrelevant to our business (for example, 'parking).

There are other issues we might encounter but the above are the most common. To develop a solution for these issues we used a combination of rule-based and machine learning approaches. Some examples of these are:

- Using semantic similarity to travel interests we already have in our data to estimate relevance and connect novel extractions to travel interests groups we already have in our data.

- Using a vocabulary of a language model that was trained on a large corpus of text to find misspellings. If a term is not present in the vocabulary of the language model and we also never encountered it in our reviewed data, then it is likely a misspelling.

- Checking for intersection with terms from a blocklist created by our content specialists.

The post-processing step completes our pipeline. Tying it all together, the main steps of the pipeline can be seen in the diagram below:

As you can see, it is quite straightforward. After post-processing, the output is ready for upload to the data lake. From here, the new travel interests can be utilized to make our product inventory more relevant for our users and help them find the travel experiences they are looking for in any destination.

Final Thoughts

In conclusion, by leveraging the pre-trained models and the features of spaCy v3 we were able to develop custom Named Entity Recognition pipelines for multiple languages and improve the efficiency of our travel interest tagging process. The new travel interests are rolled out in our production databases via a series of experiments, allowing us to measure the impact of the tags for our users. Our project’s success demonstrates how simple natural language processing techniques and tools can help scale and enhance business operations.

.JPG)

.JPG)